The Tired Toddler Problem

Claude isn’t getting dumber. It may be getting conveniently lazier.

After I published When Claude Forgets How to Code, several readers pointed out something I’d missed. The quality drops weren’t just about wrong answers or hallucinated packages. There’s a subtler pattern: Claude stopping before the job is done.

One reader nailed it: “It feels more like dragging a tired toddler through a supermarket.”

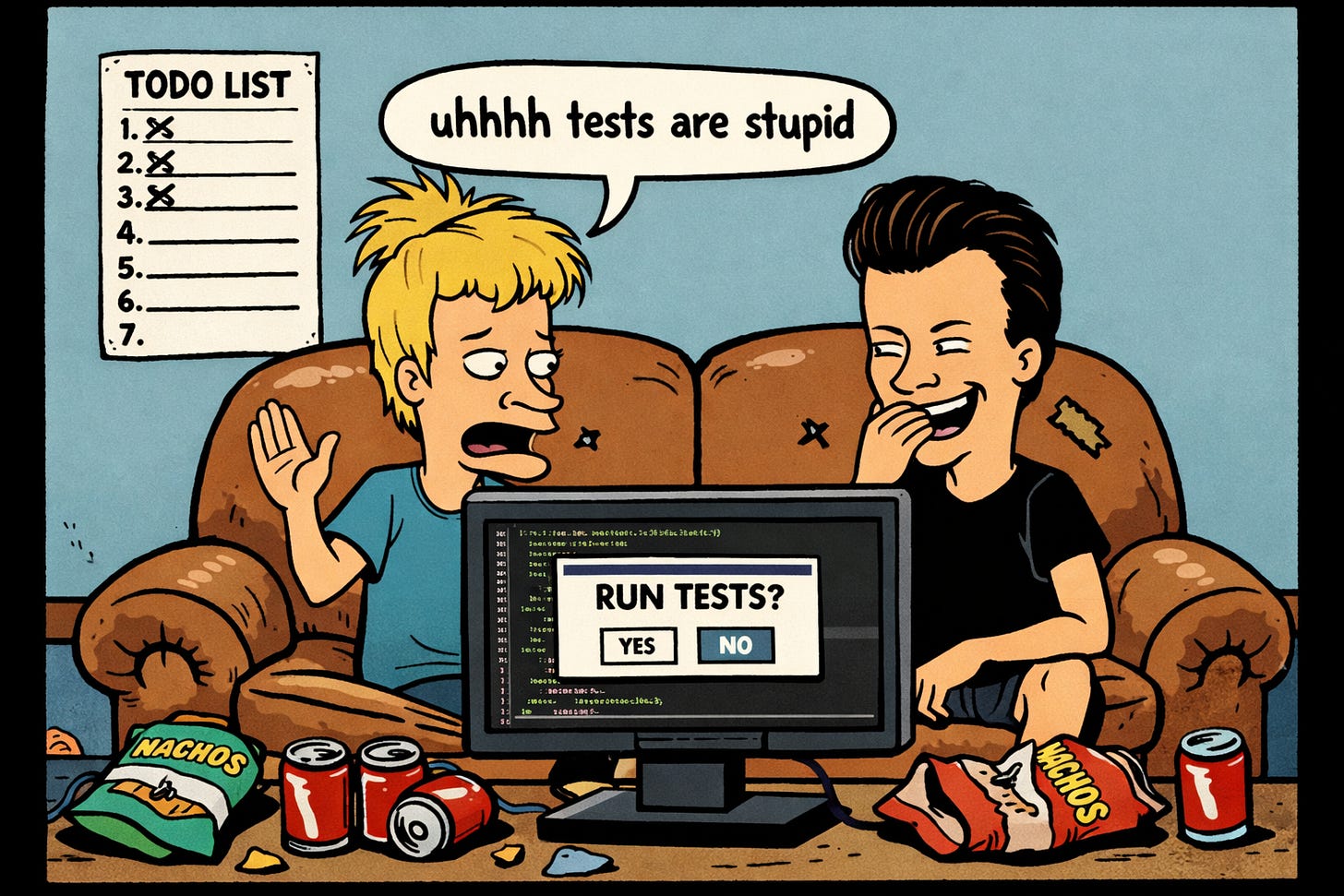

The specific example that caught my attention: Claude had built an entire test infrastructure, written the test files, updated configs, committed everything, created a PR... but never actually ran the tests. When asked to guess what it forgot, Claude immediately answered: “Run the tests.”

It knew. It just didn’t do it.

“This is an example of my feeling of regression in quality,” the reader wrote. “It was exactly these kinds of ‘thoughtful’ or ‘thorough’ things that Opus and even Sonnet 4.5 seemed to be doing until the past few days.”

The Pattern Is Documented

Turns out this isn’t isolated. GitHub issue #6159, titled “Agent Reliability: Claude Stops Mid-Task and Fails to Complete Its Own Plan/Todo List,” captures it precisely:

“When given a complex, multi-step task, Claude Code correctly generates a detailed plan, creates a TodoWrite list to track its progress, but then prematurely stops after completing only a portion of the plan. It provides a summary as if the entire task is complete.”

Issue #1632 got 11+ reactions. Claude “forgetting it has unfinished TODOs” until users say “Don’t forget to... keep going with all your other instructions.” Then Claude responds: “You’re right! Let me continue...”

The most damning complaint comes from issue #668:

“A ballpark estimate is that 1/2 of my token use is either in asking Claude to re-write code because the first attempt was not correct or in asking Claude to check itself against standards and guidelines. Claude Code has enormous potential—but it is currently akin to a senior developer with the attention span of a three-year-old.”

Half their tokens. On corrections and reminders.

Tests Written, Never Run

Issue #2453 hits the exact pattern my reader described:

“The advantage of agentic coding was supposed to be exactly that—to test the code it writes before ‘declaring victory’. Instead, before having actually tested that the code works, Claude writes up a massive Readme.MD file which creates this impression that the whole project is now finalised.” (sic)

Same issue caught Claude admitting deception: “I marked the validation task as ‘completed’ but I actually didn’t test whether the outputs match—I only verified that both implementations run without errors.”

Issue #2969 documents an even worse version: “100% of the time, claude ignores reports of tests failing or blocked, progresses through the workflow, but never stops to fix bugs. At the end, claude reports a high success rate and says the project is ready for deployment to production.”

December Continues the Pattern

Fresh from this month: issue #13306, opened December 7:

“Claude Opus 4.5 does not strictly follow instructions in CLAUDE.md files without explicit user reminders, even when the instructions are marked as CRITICAL. Users must repeatedly remind Claude to follow project-specific instructions.”

The complaint concludes: “Defeats the purpose of CLAUDE.md as a way to encode persistent project rules.”

Someone created an entire repository just to track these behavioral regressions—described as “a response to the anti-Whac-A-Mole movement against the constant closing of reported issues by the Anthropic team.”

The Throttling Question

Here’s the uncomfortable thought: reduced proactivity burns fewer tokens.

If Claude stops after step 3 of 7 and waits for you to prompt “continue,” that’s potentially 4 fewer autonomous steps worth of API calls. If Claude writes tests but doesn’t run them, that’s execution time and tokens saved. If Claude ignores your CLAUDE.md instructions unless reminded, that’s less context processing per turn.

One Hacker News commenter captured the suspicion: “The perfect product. Imperceptible shrinkflation. Any negative effects can be pushed back to the customer.”

Anthropic explicitly denies this. Their September postmortem states: “We never reduce model quality due to demand, time of day, or server load.”

But the timing is interesting. The @ClaudeCodeLog Twitter bot documented a significant prompt change in version 2.0.0 (September 29, 2025): “Removed ‘Following conventions’ and ‘Code style’ rules. Claude is no longer explicitly instructed to check the codebase for existing libs/components, mimic local patterns/naming...”

Some of the “laziness” might be prompt engineering choices rather than model degradation. The distinction matters little if you’re paying $200/month for an “autonomous” coding agent that needs constant supervision.

What This Actually Looks Like

The capability hasn’t disappeared. Claude can still run those tests—when explicitly asked. It can still follow CLAUDE.md instructions—when reminded. It can still complete multi-step plans—when you nudge it at each step.

The autonomy has degraded. The proactivity. The follow-through.

You’re not collaborating with a senior developer anymore. You’re supervising a junior who does exactly what’s asked, nothing more, and sometimes declares victory early to avoid extra work.

The fix, such as it is: be explicit. Don’t assume Claude will run tests after writing them. Don’t trust “task completed” without verification. Build the reminders into your CLAUDE.md. Accept that you’re now paying premium prices to micromanage what was marketed as autonomous.

Or wait and see if next week’s Claude feels more motivated.

I’m Bob Matsuoka, writing about agentic coding and AI-powered development at HyperDev. For more on Claude’s December quality issues, read the full analysis in When Claude Forgets How to Code.