A few weeks ago, I jumped on the orchestration train and haven't looked back. But something unexpected happened along the way—I stopped using IDEs altogether. At first, I thought it was because the IDEs weren't good enough. I liked Zed, for example, a fresh take on an agentic IDE that felt promising. But now I realize it's not even that.

IDEs are no longer sufficient to capture the multi-threaded, event-driven, agent-orchestrated model of development I use today.

What I need instead is better situational awareness. And for the past week, I've been building exactly that—a HUD for agent orchestration that gives me real-time visibility into what's actually happening across my development environment.

The Death of IDE-Centric Development

When you're orchestrating multiple Claude agents working in parallel across different parts of your codebase, traditional IDE interfaces break down completely. You can't meaningfully track five agents implementing different features simultaneously through VS Code tabs or Cursor windows. The mental model is wrong.

Think about it: IDEs were designed around the assumption that one human would edit one file at a time, maybe jumping between a few related files. But agent orchestration operates on entirely different principles:

Multiple agents working simultaneously across unrelated parts of the codebase

Event-driven coordination where agents respond to each other's work

Context that spans projects and persists across sessions

Task decomposition that humans need to monitor, not micromanage

Traditional IDEs have no conceptual framework for this reality. They're optimized for human-scale, sequential development workflows that agent orchestration has rendered obsolete.

Building Situational Awareness

The solution isn't a better IDE—it's a completely different interface paradigm. Over the past week, I've been building what I call a development HUD (Head-Up Display) that intercepts all Claude Code events and gives me real-time situational awareness across my entire development environment.

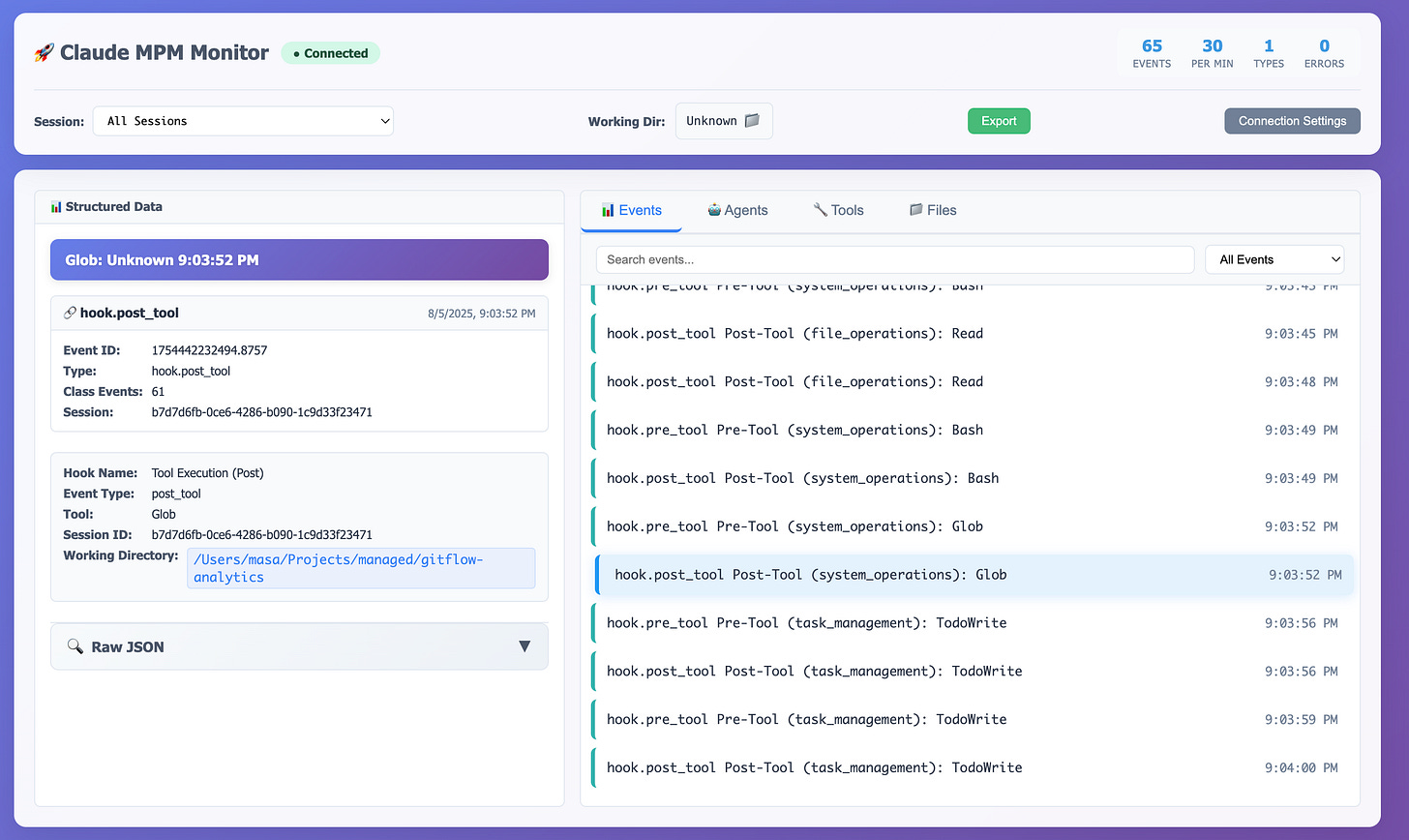

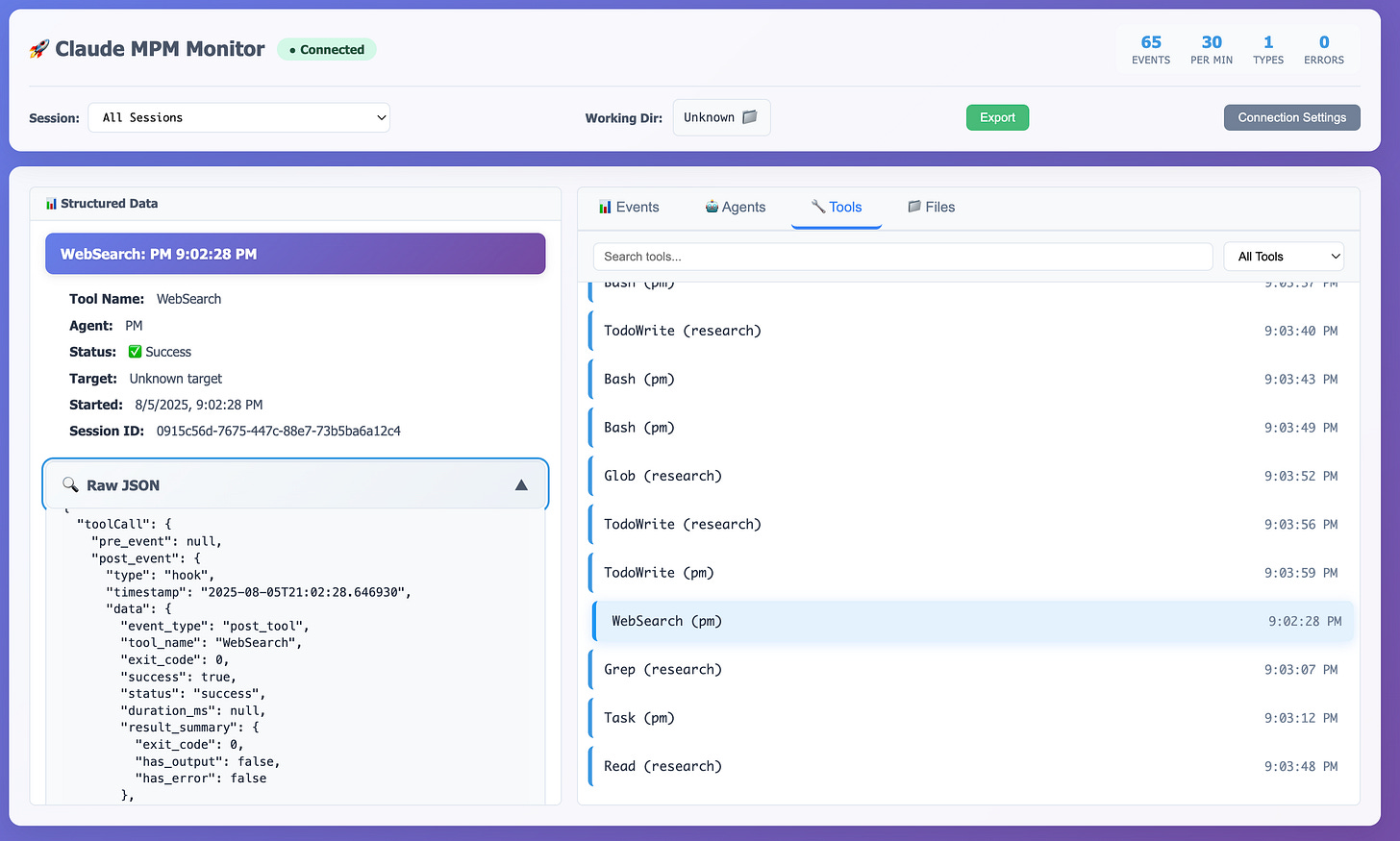

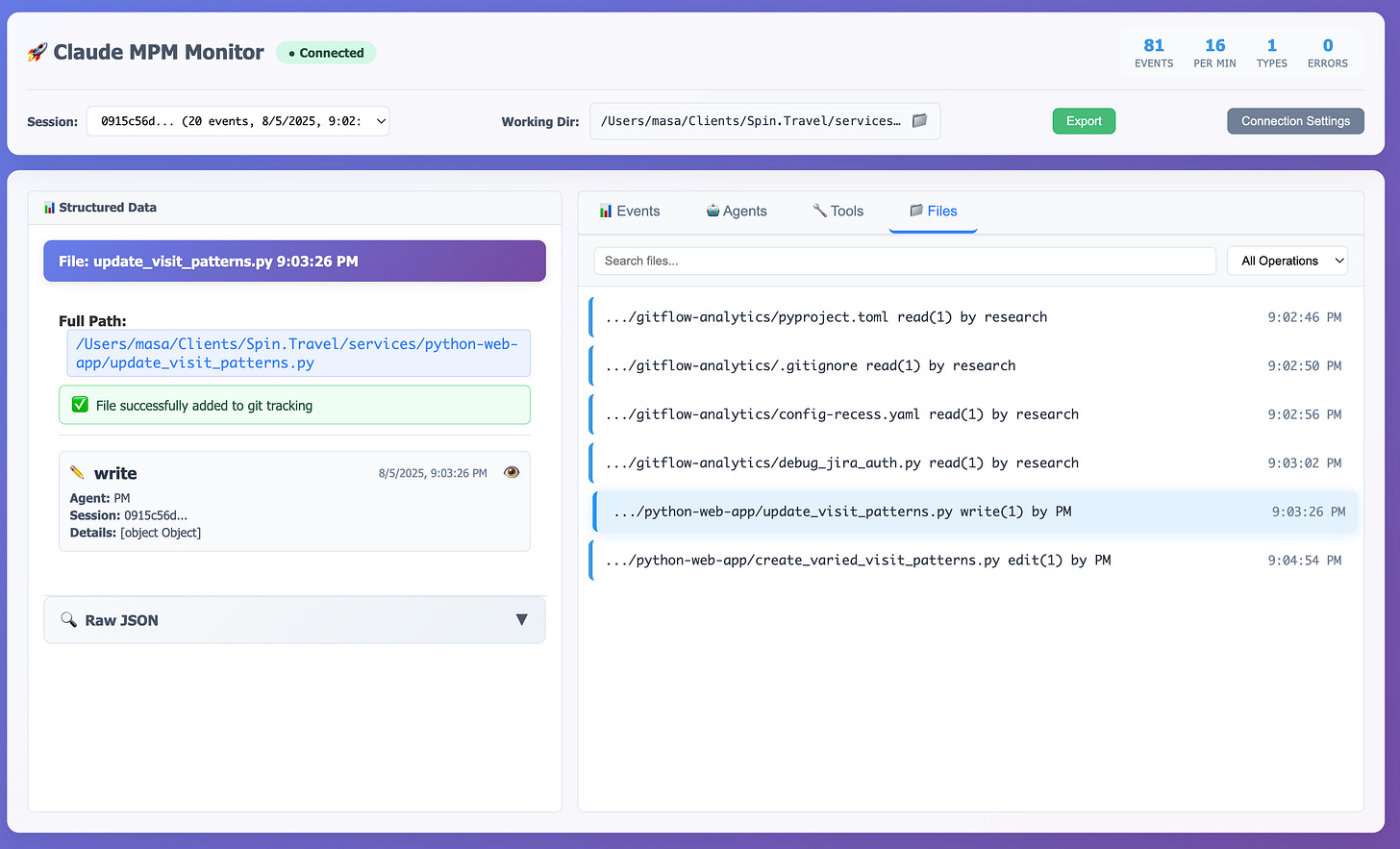

The architecture is event-driven: I capture all Claude Code hooks, publish them using socket.io, and visualize them through parsers that identify common data elements—agents, tools, files modified, task progress. It's like having a radar screen for your development process.

The HUD concept comes from a great piece by Geoffrey Litt that crystallized something I'd been feeling for weeks. Citing Mark Weiser's 1992 critique of "copilot" metaphors, Litt argues that instead of virtual assistants that grab our attention, we need interfaces that fade into the background and extend our natural capabilities.

"You'll no more run into another airplane than you would try to walk through a wall" was Weiser's goal—an invisible computer that becomes an extension of your body rather than a chatty copilot demanding your attention.

That's exactly what orchestrated development needs.

What A Development HUD Actually Looks Like

My current implementation in Claude-MPM version 3.3.3 provides several key visibility layers:

Agent Activity Monitoring: I can scan agents as they work, zooming in where needed and getting a sense of overall activity across one or more projects. No more wondering what's happening in that Claude session I started twenty minutes ago.

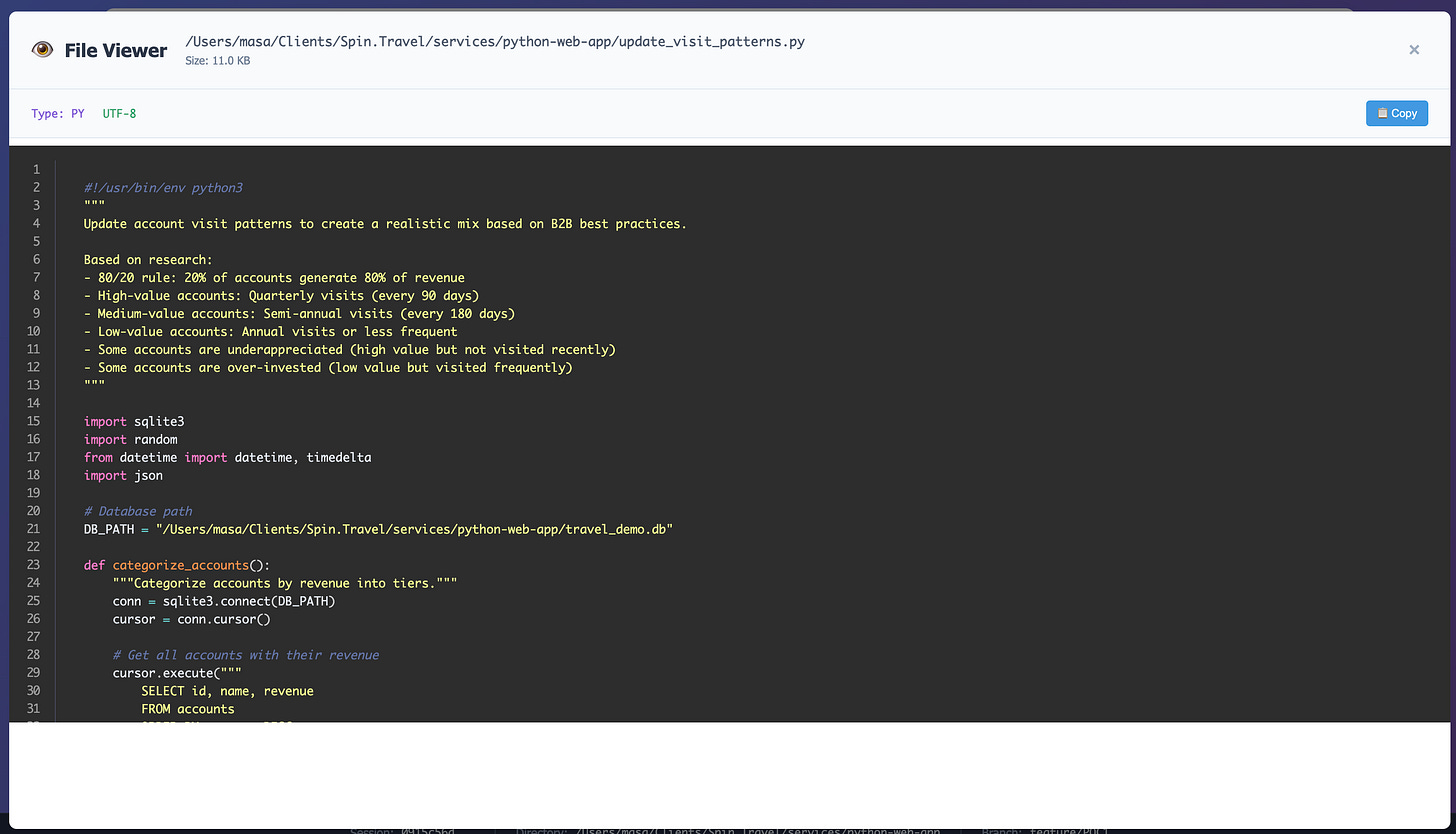

Real-Time Diff Visualization: The diff viewer shows exactly what each agent has changed, with timeline scrubbing to see all changes over time. When Agent A modifies a component that Agent B depends on, I can immediately see the impact and coordination needed.

Task Flow Tracking: All todo lists and task decompositions are visible in real-time. I can see the steps the PM agent and sub-agents set up to solve my problems, watching progress flow through the task tree.

Prompt Transparency: I can see both my original prompts and the system-generated prompts sent to sub-agents. Even though I control agent prompts, there's learning and base context injection, so seeing the final output helps me understand agent behavior.

Structural Organization: The viewer adds form to all framework output, making sense of the event stream in ways that traditional file trees and terminal output simply can't match.

The Evolution Toward Trees

This is a first pass. Future versions will implement a true tree-style view where the initial todo list becomes the trunk, and each agent's activity flows from branches of that tree. Task decomposition will be visual and interactive rather than buried in terminal output.

The goal is ambient awareness—understanding system state without actively monitoring it, the way you're aware of your car's speed without staring at the speedometer.

The Smart UI Connection

This HUD approach aligns perfectly with the prompt-to-tool "smart UI" framework I explored with Sumin several weeks ago. Claude-MPM operates as a wrapper around Claude Code—you can prompt your way to results directly, but when you need to peek behind the scenes, a structured UI is there waiting.

The HUD isn't smart in the sense of being AI-powered itself. It's smart because it surfaces the right information at the right time without requiring you to dig through logs or switch contexts. You get the best of both worlds: natural language interaction for task specification, and visual awareness for system monitoring.

Why This Matters Beyond Personal Productivity

The shift from IDEs to HUDs represents more than interface evolution—it signals a fundamental change in how we think about software development. We're moving from tools that help humans write code to interfaces that help humans orchestrate intelligent systems.

Traditional development tools assumed scarcity: scarce compute resources, scarce context windows, scarce AI capabilities. We built IDEs around optimizing individual human productivity within those constraints.

But when you can spin up multiple specialized agents, maintain persistent context across sessions, and coordinate complex workflows automatically, the bottleneck shifts. Human attention becomes the constraint, not computing resources.

HUDs solve the attention problem by making system state visible without demanding focus. They let you maintain situational awareness while agents handle implementation details.

The Broader Implications

Weiser's 1992 insight about invisible computers feels prophetic when applied to AI development tools. The future isn't more sophisticated chatbots or smarter autocomplete—it's ambient intelligence that extends human capabilities without interrupting human flow.

Consider spellcheck, which Litt uses as an example. It doesn't interrupt you with suggestions or pop up dialog boxes. It just shows you misspelled words with red squiggles, giving you a new sense you didn't have before. That's a HUD.

Now imagine that same ambient awareness applied to code quality, system architecture, performance bottlenecks, security vulnerabilities, and team coordination. Instead of tools that demand your attention, you get enhanced perception of your development environment.

The orchestration HUD I'm building represents an early step in that direction.

What You See Is What You Get

IDEs have outlived their usefulness as primary coding interfaces when we move to agent orchestration. They're conceptually mismatched to multi-agent, event-driven development workflows.

The future belongs to HUDs that provide situational awareness without attention theft. Interfaces that make you naturally aware of system state the way good airplane instruments make pilots aware of flight conditions.

We're entering the era of ambient development intelligence. The question isn't how to make better IDEs—it's how to build better HUDs that extend human perception into the increasingly complex world of AI-orchestrated software development.

The development HUD functionality is available in Claude-MPM version 3.3.3. For more insights on the evolution of AI development tools, subscribe to HyperDev.