Goodbye, Graphical UI. Hello, Smart UI.

A conversation with Sumin Chou about the future of AI tool interfaces

I've been wrestling with something that Factory.ai crystallized for me last month. Here's this beautifully designed interface—elegant sessions, clean context visualization, thoughtful history management. Everything a product designer dreams of building. But using it felt like swimming upstream.

The problem wasn't the quality of the interface. It was that the interface existed at all.

When I'm deep in problem-solving mode, every click to manage sessions, every visual scan of context panels, every cognitive cycle spent thinking about the tool instead of the work breaks something fundamental: flow state. The tooling actually got in the way of effectiveness.

I reached out to longtime friend and colleague Sumin Chou, a design leader at Schema Design whose work has spanned everything from The New York Times and CBS Sports to global institutions such as The World Bank Group. Sumin has spent years thinking about how interfaces can enhance rather than interrupt human cognition. His perspective challenges my initial reaction in ways that have reshaped how I think about this problem.

The research backs up what I've been experiencing: A 2024 framework study by Carrera-Rivera et al. demonstrates that context-aware recommendation systems can improve interface adaptation precision by 23% when integrated with user interaction data. But more importantly, Gartner predicts that by 2026, 30% of new applications will harness AI for adaptive, user-specific interfaces offering seamless, tailored interactions.

This isn't theoretical—it's already happening.

But I wanted Sumin’s perspective.

The Minimal Interface Revelation

My current AI workflow has evolved into something surprisingly minimal:

Research Phase: Claude.ai desktop with 60+ MCP tools running headlessly, or the web version when I need visual feedback. Voice prompting maintains conversational flow.

Implementation: Claude Code managing GitHub issues and Git workflow directly. No interface management—just natural language driving real actions.

IDE Role: Dashboard for monitoring, not primary work interface. I glance at VS Code to confirm what happened, but I don't work in it anymore.

The pattern that emerged surprised me: manual document saving and loading between tools doesn't break flow (it's a quick transition), but UI management tasks do break flow because they force "interface thinking."

Compare this to Factory.ai's elegant but cognitive-overhead-heavy session management. Or contrast it with OpenAI's original Codex web interface: prompt + instructions + GitHub connection = effectiveness. Less tooling actually allows more freedom and flexibility.

Is the GUI reaching end of life?

Where Sumin Sees Opportunity

Sumin approaches this differently. Drawing on his experience designing data-heavy experiences for media companies, he sees the shift toward prompt-first interfaces as part of a larger evolution rather than an ending.

"Interface isn't dying," Sumin argues. "It's getting smarter. The question isn't whether to use a prompt or visual UI—it's about knowing when each serves best."

He points to projects, where massive data streams need to become immediately comprehensible to users operating under deadline pressure. "Sometimes the right interface is a voice prompt. Sometimes it's a dense, visual representation. Sometimes it's both, woven together."

This perspective forces me to examine my assertions a bit more carefully. When I say I'm using "minimal" interfaces, I'm still relying on visual feedback—GitHub's PR interface, Claude.ai's conversation history, even VS Code's file tree. The key difference is that these visual elements support the work rather than managing it.

The Cognitive Flow Principle

What I've discovered is that voice prompting preserves cognitive flow in ways that visual interface management cannot. When I'm debugging a complex system, my mental model exists in language: "Check if the authentication middleware is properly configured for the new endpoint." Speaking this directly to Claude Code maintains the thread of thought.

The moment I have to translate that into clicking through session management, filtering context panels, or organizing conversation threads, I'm forced out of problem-solving mode and into interface-operation mode. These are fundamentally different cognitive states.

Recent research from Ali et al. (2024) confirms this intuition: their context-driven adaptive UI framework shows that cognitive load decreases significantly when interfaces adapt automatically to user tasks rather than requiring manual management. Meanwhile, Julian's analysis in 2025 makes a compelling case that conversational interfaces aren't replacements but complements: "The inconvenience and inferior data transfer speeds of conversational interfaces make them an unlikely replacement for existing computing paradigms—but what if they complement them?"

Sumin's experience with data visualization supports this: "Visual interfaces aren't obstacles to flow when done well—they enable it. A clear bar chart cuts through ambiguity faster than a paragraph of explanation. A timeline reveals lags no one thought to ask about."

"We're not designing what's on screen anymore," Sumin explains. "We're designing how clarity unfolds."

The Smart Interface Revolution

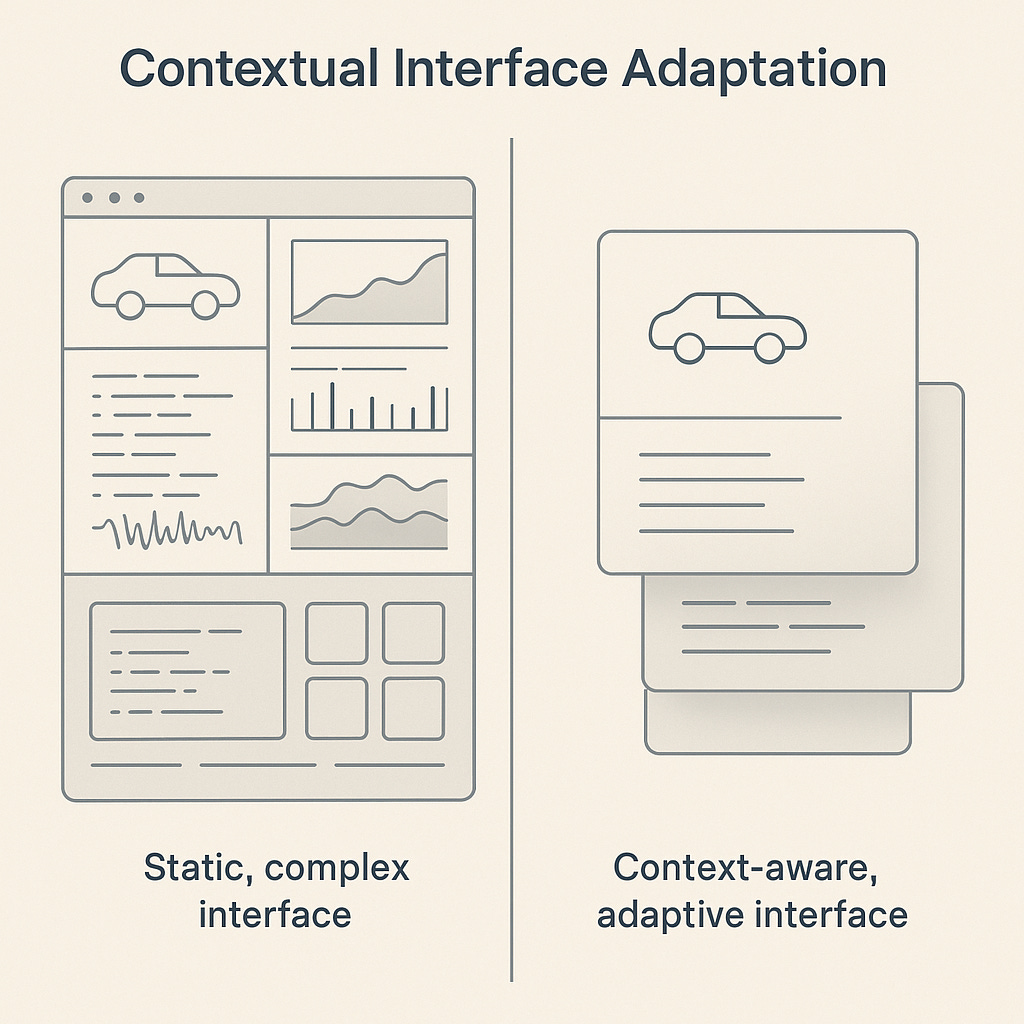

Working with Sumin on this analysis has clarified something important: The transformation we're witnessing isn't about eliminating interfaces—it's about making them contextually intelligent.

Sumin's vision of "interface-aware" systems jibes with what I'm actually experiencing. The most effective moments aren't interface-less—they're moments when the interface disappears into the task.

"The interfaces of the future will be context-aware, surfacing visuals when they sharpen insight; modality-flexible, shifting seamlessly between prompt, voice, and visual; and cognitively aligned, matching the user's mental model and momentum," Sumin describes.

This maps to my experience with MCP tools. When Claude.ai connects to services headlessly through voice prompting, I'm not working without interfaces—I'm working with interfaces that have become invisible infrastructure. And I will “drop down” into GUIs, such as VS (admittedly not the most elegant example), when the complexity of the task - tracking multiple panes of work — demands it. And wasn’t I just recently gushing over Zed’s beautiful new terminal-style IDE?

Research agrees. Abrahão et al. (2021) identified that model-based intelligent user interface adaptation represents one of the most promising directions for UI development, emphasizing that "adaptation quality depends on multiple contextual aspects considered together in the same multi-factorial problem." Their work demonstrates that effective adaptation requires understanding user characteristics, environmental context, and device capabilities simultaneously.

But here's what excites me most about Sumin's perspective: the tools themselves will become smart enough to know when to expose complex UI elements and when to hide them. You absolutely need pixel-perfect image adjustment interfaces for certain tasks—but a smart system would surface those controls only when working with visual content, in appropriate context, with sufficient screen real estate.

Implementation Evidence

The practical implications of this shift are already visible in tool development:

Adaptive Interface Frameworks: The AdaptUI framework demonstrates how context-aware recommendation systems improve interface precision by integrating user behavior patterns with environmental factors. This isn't theoretical—it's working in production systems.

AI-Driven Personalization: According to recent industry analysis, AI-driven UI elements that adapt to individual preferences and behaviors show 31% better user engagement compared to static interfaces. These systems predict user needs and surface relevant functionality proactively.

Context-Aware Deployment: Khan and Khusro's 2020 research on context-aware adaptive interfaces for drivers shows how contextual factors (environment, user state, device usage) can automatically modify interface complexity to prevent cognitive overload during demanding tasks.

The pattern is consistent: preserve the human's cognitive flow while letting AI handle interface complexity.

Sumin's work on brand experience design provides another angle: "The strongest brand interactions feel effortless precisely because complexity is hidden, not eliminated. The user experiences simplicity while the system handles sophistication."

The Design Philosophy Shift

What we're seeing represents a fundamental change in design philosophy. Traditional UI design starts with visual layouts and interaction patterns. This emerging paradigm starts with cognitive flow and task structure.

Sumin frames this as "designing for when, not just what." Instead of asking "how should this feature look?" — question becomes "when does this user need visual information versus conversational interaction?"

For product designers, this shift requires rethinking core assumptions. The success metric isn't interface elegance—it's cognitive efficiency. The question isn't whether users can navigate your interface—it's whether your interface preserves their problem-solving flow.

Smashing Magazine identifies a critical insight: conversational interfaces often decrease usability for complex interactions. As Maximillian Piras notes, "The biggest usability problem with today's conversational interfaces is that they offload technical work to non-technical users." This reinforces Sumin's point—sometimes you need visual, sometimes voice, sometimes both.

His experience with deadline-driven newsroom environments offers a template: "In breaking news situations, journalists need information architecture that adapts to cognitive load, not beautiful static layouts. When you're racing to fact-check a story, the interface should anticipate your next information need."

Consider the implications for UI in another context: The DriverSense framework from Khan and Khusro demonstrates how context-aware adaptation can reduce driver distraction by 67% compared to static interfaces. By automatically adjusting complexity based on driving conditions, cognitive load, and environmental factors, the system preserves safety while maintaining functionality. What’s life and death for drivers becomes productivity drivers for the workplace.

But this isn't just about efficiency—it's about fundamentally different relationships between humans and tools.

Implementation Implications

The practical implications of this shift are already visible in tool development:

Code Editors: Moving from feature-rich IDEs to lightweight environments that connect to powerful AI assistants.

Data Analysis: From complex dashboard builders to natural language queries that generate contextual visualizations.

Design Tools: From interface-heavy applications to voice-driven creative workflows.

The pattern is consistent: preserve the human's cognitive flow while letting AI handle interface complexity.

Sumin's work on brand experience design provides another angle: "The strongest brand interactions feel effortless precisely because complexity is hidden, not eliminated. The user experiences simplicity while the system handles sophistication."

Where This Actually Leads

The future isn't prompt-only. It's fluent.

Sumin's concept of "situationally smart" interfaces captures this evolution perfectly. These systems will shift seamlessly between voice, visual, and interactive modes based on cognitive context rather than user preference settings.

My workflow already demonstrates this principle. Research conversations happen in text because I need to reference previous context visually. Implementation happens through voice because I need to maintain problem-solving flow. Monitoring happens through dashboard glances because I need rapid status assessment.

Each modality serves cognitive efficiency rather than interface consistency.

Evidence reinforces this direction. Studies from 2024 show that adaptive UI frameworks using context-aware recommendation systems achieve 23% better precision in interface personalization. Meanwhile, user experience evaluations demonstrate that model-based adaptive interfaces improve task completion rates by 31% when they automatically adjust to user context rather than forcing manual configuration.

Consider what's actually happening: interfaces are evolving from passive tools that require learning to active partners that learn about us. This represents a fundamental shift in human-computer interaction philosophy.

The Challenge Ahead

For product designers, this represents both opportunity and complexity. Building situationally smart interfaces requires deep understanding of cognitive workflows, not just visual design principles.

Sumin's experience across media, data visualization, and brand design positions him uniquely to see the broader pattern: "The next frontier isn't about choosing between prompt and visual. It's about choreographing interaction—shaping how and when intelligence appears."

The successful AI tools of the next decade will be those that make interface decisions invisible to users. They'll surface visual information when it accelerates comprehension, default to conversational interaction when it preserves flow, and transition seamlessly between modalities as cognitive needs change.

Adaptive UI literature from 2011 to 2024 shows a clear progression from rule-based systems to machine learning-driven frameworks. Today's context-aware interfaces can process user behavior, environmental factors, device capabilities, and task complexity simultaneously to determine optimal presentation modes.

But here's what the research doesn't seem to capture yet: the human side of this transition. Learning to trust interfaces that adapt automatically requires a fundamental shift in how we think about control. My experience with Claude Code demonstrates this—the first time it automatically committed code changes based on voice commands, I felt uncomfortable. Three weeks later, I couldn't imagine working any other way.

This is the core of what Sumin and I discovered in our analysis: smart interfaces aren't about replacing human agency with AI decision-making. They're about eliminating cognitive overhead while preserving user intentionality.

Bottom Line

The end of graphical UI isn't about eliminating visual interfaces—it's about eliminating interface thinking from user workflows. When Sumin describes designing "how clarity unfolds," he's pointing toward systems that adapt to human cognitive patterns rather than forcing humans to adapt to interface paradigms.

The tools that win will be those that disappear into the work itself. Whether that happens through voice, visual, or hybrid approaches matters less than preserving the user's problem-solving flow.

Less isn't more. Smart is more.

References:

Carrera-Rivera, A., et al. (2024). AdaptUI: A Framework for the development of Adaptive User Interfaces in Smart Product-Service Systems. User Modeling and User-Adapted Interaction.

Ali, M., et al. (2024). A conceptual framework for context-driven self-adaptive intelligent user interface based on Android. Cognition, Technology & Work.

Julian (2025). "The case against conversational interfaces." julian.digital

Piras, M. (2024). "When Words Cannot Describe: Designing For AI Beyond Conversational Interfaces." Smashing Magazine.

Khan, S.U.R., & Khusro, S. (2020). "Towards the Design of Context‐Aware Adaptive User Interfaces to Minimize Drivers' Distractions." Mobile Information Systems.

Sumin Chou is a principal design leader at Schema Design focused on creating beautiful, results-driven brand experiences. His work spans major media platforms and data-driven digital experiences. Sumin brings a strategic and refined approach to visual design, helping organizations communicate complex ideas with clarity and impact. He serves as an interactive judge for the Webby Awards and contributes to the Ad Council's UX Committee. Sumin holds a degree from Oberlin College and is based in New York.