Sometimes timing is a funny thing in tech. I just finished reviewing Factories.ai, which perfectly exemplifies what happens when you build tool-based experiences for AI workflows: clunky interfaces, fragmented interactions, the kind of UX that makes you wonder if anyone actually used the thing before shipping it.

Then along comes Zed.

I'll be honest: I wasn't familiar with this editor before diving in. But after spending serious time with it on a real testing framework conversion, I'm convinced they've cracked something fundamental about AI-first development environments. They didn't start with an editor and add AI—they started with the AI session and built an editor around it.

Unlike Zed's web-based editor, this very responsive and fast, made-up desktop editor allows complete window flexibility.

What Zed Actually Is

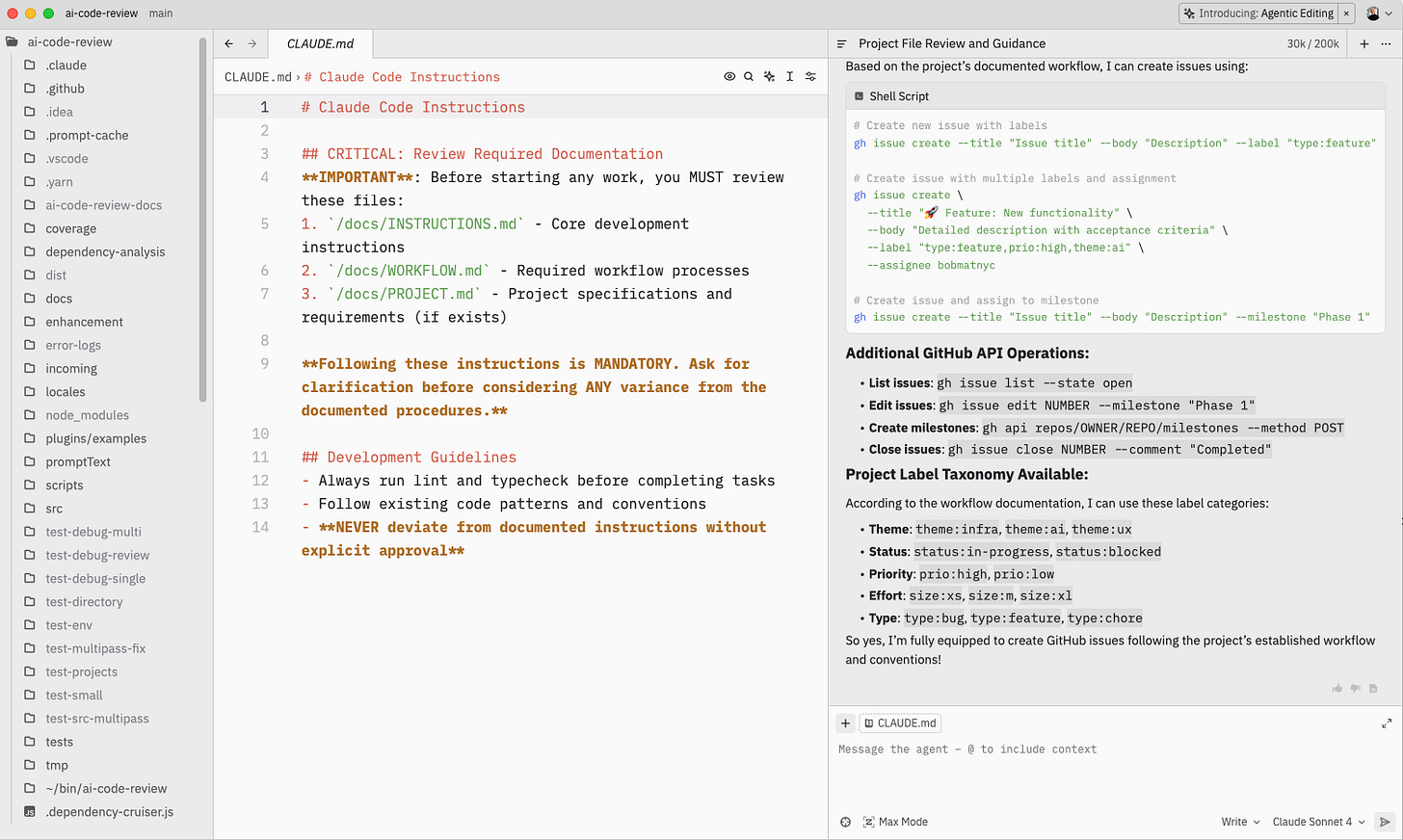

Zed positions itself as a task-focused, minimalist editor built around three configurable pane areas. You get an editor window (which you can dismiss entirely if you're doing pure AI work), a context-aware navigation pane that intelligently toggles between file trees, code outlines, and git status, and here's the key: a dedicated AI session view where the real work happens.

The interface feels deliberately uncluttered. After years of VS Code's extension sprawl and notification chaos, Zed's clean lines are refreshing. But don't mistake minimalism for lack of power.

The AI Experience: Claude-Quality Results

Zed offers multiple model choices, but I worked primarily with Claude Sonnet. The responses felt identical to Claude Code, matching the quality I've seen using Claude Code in VS Code. Same reasoning patterns, same ability to understand complex instructions.

I put this to the test with a real workflow. I fed Zed my Claude.md reference file (the one I've customized with project instructions, file references, and development workflows) and asked it to handle a testing framework conversion. Not only did it parse and follow the instructions correctly, but it seamlessly worked with the GitHub API to create issues, managed Git branching, and maintained context across a complex, multi-step process.

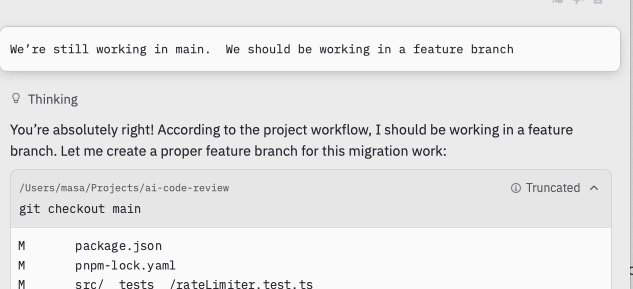

It did make one mistake initially, forgot to work in a feature branch, but Zed recovered smoothly by re-scoping all changes to the proper branch structure. A small miss, but handled well.

Nice recovery, Zed.

What impressed me most: when I asked Zed to commit changes, it automatically re-reviewed the project instructions I'd provided earlier. This is context management that Claude often misses—maintaining awareness of core project requirements across long sessions. The fact that Zed preserves and actively references foundational instructions shows sophisticated session state management.

Even more impressive: Zed took my workflow instructions seriously and kept working on CI/CD test requirements until they actually passed. Unlike Claude, which tends to give up when tests fail repeatedly, Zed persisted until it got them working. Good thing too—I'd been skipping those failing tests for weeks. Zed basically forced me to clean up technical debt I'd been avoiding.

In terms of raw AI capability, this is Claude Code territory. But the interface makes all the difference.

The Streaming Session Revolution

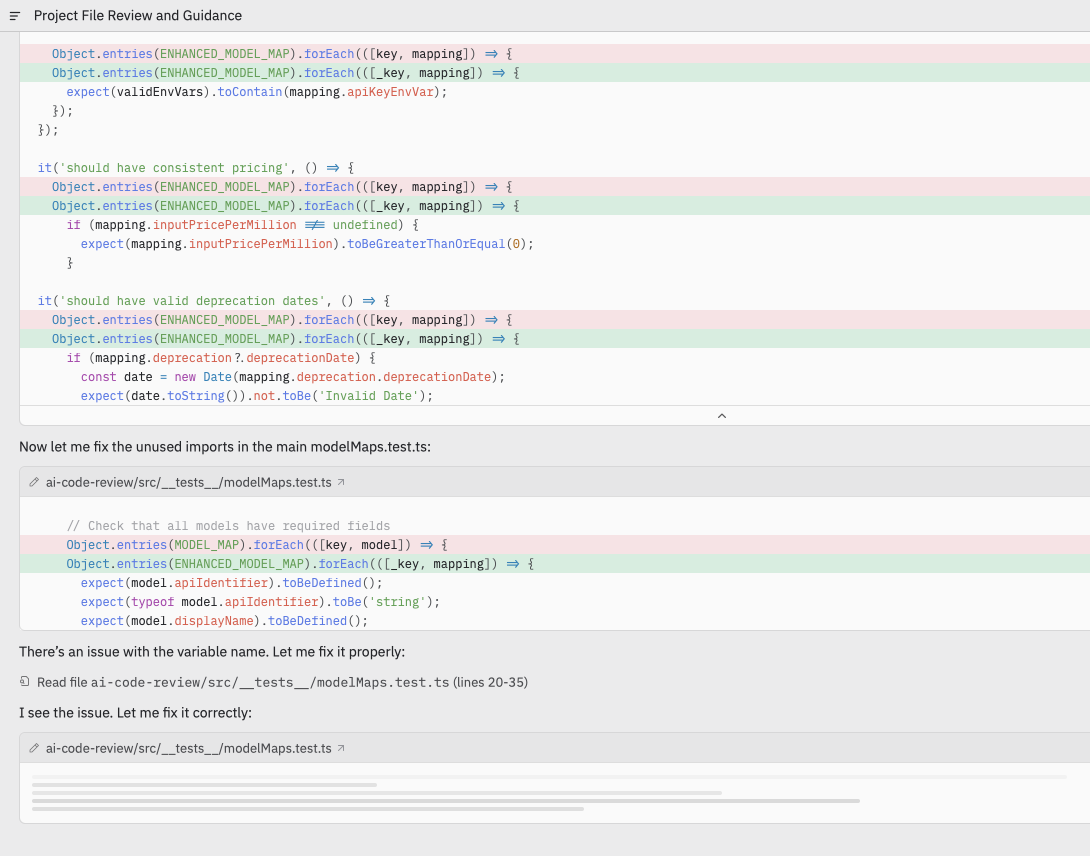

Here's where Zed fundamentally changes the game. Unlike static diffs, Zed streams each micro-change as a real-time breadcrumb trail. You're not just watching aggregate results—you're seeing the AI's thought process unfold sequentially.

Each edit appears granularly: "Updating function signature," followed by the actual change, then "Adding error handling," with its corresponding code. Unlike traditional editor-based changes where you can't trace which request triggered which modification, Zed's session view maintains perfect lineage.

But here's the killer feature: full terminal session capture. When Zed runs tests, builds, or any command-line operation, you see the entire terminal history embedded in the session. Each interactive run becomes a timestamped moment you can scroll back to and review.

This is profound. You're not just getting code changes—you're getting the complete development context. Test failures, build outputs, deployment logs, all integrated into a coherent narrative of what the AI actually did.

If I were Anthropic, I'd be looking at this interface as a superior paradigm to plain terminal interactions. The session view provides visibility that transforms AI coding from blind trust to informed collaboration.

One fix needed: scroll position jumps when reviewing past edits.

Streaming diff snippets.

Architecture Enabling AI Innovation

What makes this possible is that Zed didn't start with an existing editor and bolt on AI features. They built everything from scratch in Rust with AI workflows as a first-class consideration.

The team completely rewrote their approach from Atom's JavaScript/Electron foundation. Nathan Sobo's team implemented what they call a "custom streaming diff protocol that works with Zed's CRDT-based buffers to deliver edits as soon as they're streamed from the model." You see the model's output token by token, which creates the granular change tracking I described above.

Their GPUI framework treats the entire interface like a video game, rendering everything on the GPU. This isn't just for performance—it enables the seamless integration of terminal sessions, code changes, and AI interactions into a single, coherent stream.

By owning the full stack from Tree-sitter parsing to GPUI rendering, they could architect tight integration between their "fast native terminal" and "language-aware task runner and AI capabilities." This is why terminal sessions appear seamlessly in the AI session view rather than feeling like separate tools awkwardly connected.

The contrast with Atom is striking. Where Atom used "an array of lines, a JavaScript array of strings" for the buffer, Zed uses "a multi-thread-friendly snapshot-able copy-on-write B-tree that indexes everything you can imagine." This architectural foundation enables the real-time collaboration and streaming AI features that define Zed's experience.

Performance and Polish

Zed's Rust foundation delivers on its performance promises. The editor is genuinely fast—no spinning wheels, no stuttered typing, no lag switching between files. Coming from Electron-based tools, the responsiveness feels almost shocking.

I found myself closing the traditional editor pane entirely, working in a two-screen setup with git/tree view on the left and the AI session on the right. And here's the concerning part: I might prefer this UX to VS Code with Claude Code integration.

Also lots of little niceties: when you “copy relative path” in the file browser, it automatically adds it to context. Another is that it can allow changes and modifications to the stream and handle them automatically, integrate them into their workflow while then resuming the tasks they were working on before.

One UX improvement I'd love: show the current git branch consistently, regardless of whether you're in code view or git view. Small detail, but it matters for workflow awareness.

That said, I'm convinced enough that I'm already planning to use Zed for several upcoming projects.

Pricing Reality Check

Zed's pricing is refreshingly straightforward: $20/month for 500 prompts, then usage-based billing beyond that (at the time of writing). For context, I converted roughly 50% of the testing framework for a substantial codebase (47,000+ lines of TypeScript) using 150 prompts.

If you're doing heavy AI-first development—100% prompt-driven like I was testing—you'd probably exhaust the 500 prompts within a week. But for mixed human/AI workflows, this pricing feels sustainable and affordable.

I suspect they'll adjust pricing upward or offer higher-tier prompt packages as adoption grows. The current model feels designed to attract users rather than maximize revenue.

Bottom Line Assessment

Zed has earned a spot among my favorite new development tools. It might be superior to Augment, and it's definitely a better AI coding experience than traditional editor-based approaches.

The streaming session view alone justifies trying Zed. Watching AI development unfold with full context—code changes, terminal outputs, git operations—provides insight into AI reasoning that other tools simply can't match.

Is it perfect? No. The scroll behavior needs fixing, pricing will likely increase, and you're locked into their ecosystem rather than enhancing your existing workflow.

But they've solved a fundamental UX problem that most AI coding tools ignore: how do you make AI development visible, trackable, and collaborative rather than mysterious and opaque?

What This Means for AI Development Tools

Zed proves that AI coding tools need rethinking from the ground up, not just bolting AI features onto existing editors. The session-based paradigm offers advantages that traditional editor-based or terminal-based approaches can't match.

More importantly, it demonstrates that UX innovation matters as much as AI capability. Claude's reasoning power paired with Zed's interface creates a development experience that's genuinely superior to either component alone.

For developers frustrated with existing AI coding workflows, Zed deserves serious evaluation. For established players in this space, it should be a wake-up call about the importance of interface design in AI-first development environments.

Zed doesn't just add AI—it rethinks what working with it should feel like.