TL;DR

Code alone isn’t sufficient for AI agents - they need synthetic documentation artifacts explaining architecture, patterns, and operations beyond what inline comments provide

Documentation structure measurably affects AI accuracy - IBM’s study of 669 developers shows up to 47% improvement in response accuracy with well-structured documentation

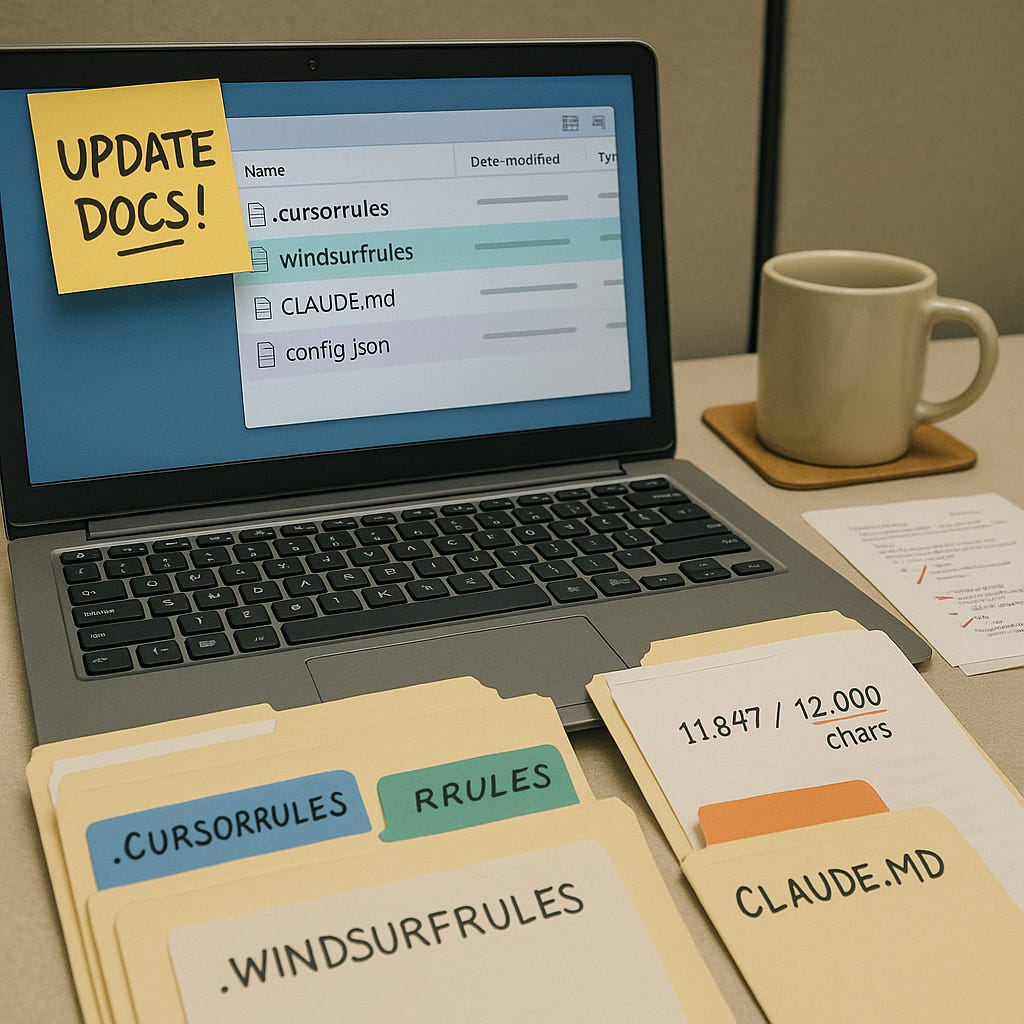

Every major tool uses configuration files - Cursor’s

.cursorrules, Windsurf’s.windsurfrules, Claude Code’sCLAUDE.md- all have strict character limits (6K-12K) forcing extreme concisionVector search often outperforms grep for semantic understanding but some scenarios favor grep - Augment’s approach achieves 200K token context by deprioritizing code patterns their models recognize from training data

Most common mistakes I’ve seen: Not updating documentation frequently enough, and not providing enough detail in specifications

Practical approach: Update documentation nearly every session, link second-level indexes to special files, document project-specific operations thoroughly

Documentation for AI agents works completely differently than documentation for humans. You can’t just write good inline comments and hope your agents figure it out. They need contextual documentation—high-level synthetic artifacts that explain how pieces fit together.

Here’s what I’ve learned: Code alone isn’t enough. Agents need synthetic documentation artifacts explaining architecture, patterns, and operations.

Proper documentation means your agent consistently finds the right files and understands project conventions. Without it, you’re re-explaining basic project structure every session, or watching agents modify the wrong files because they couldn’t distinguish between similar implementations across different modules.

My Documentation Workflow (What Works)

I’ve developed three practices that keep my agents productive:

First: Update often. My claude-mpm has a /mpm-init command that initializes and updates documentation based on source. I run it nearly every session—not because I’m obsessive, but because watching agents struggle with outdated context taught me this lesson the hard way. Simple refactoring tasks that should take minutes stretch to hours when the agent thinks you’re using v2.0 APIs but you’ve moved to v3.0.

Second: Keep it rational. Don’t over-create docs. Link second-level indexes to your AI coder’s special files like CLAUDE.md. Think map, not encyclopedia. I’ve seen teams create 50-page documentation files that agents never read because they exceed context budgets.

Third: Document project-specific coding styles and especially operations. How to deploy locally. How to work with your hosting platforms—Vercel, Railway, AWS, Digital Ocean. One of my projects has three different deployment targets with different environment variables. Without explicit documentation, agents consistently deployed to the wrong environment or forgot critical configuration.

Every Tool Has Its Own Documentation Language

Cursor pioneered the .cursorrules format but evolved it into .cursor/rules/*.mdc using Markdown with metadata. These MDC files support glob patterns for path-specific rules—different standards for frontend versus backend. Rules can be “Always” included, “Auto Attached” based on file patterns, “Agent Requested” when the AI decides it’s relevant, or “Manual” only when explicitly mentioned.

The kicker? Strict character limits. Individual files capped at 6,000 characters with a combined total of 12,000 characters across all rules. Teams need to be extremely concise—distilling entire coding philosophies into tightly constrained guidance.

Windsurf uses .windsurfrules files with four activation modes: Manual (via @mention), Always On, Model Decision (AI determines relevance), and File Pattern (glob-based). But what sets it apart is the three-pillar system: Rules for coding standards, Memories for learned patterns (automatically generated during coding), and Workflows for repeatable automation. The system learns over time, reducing how often you repeat yourself.

GitHub Copilot recently added .github/copilot-instructions.md for repository-wide guidance. Supports path-specific instructions with YAML frontmatter specifying glob patterns. Works across Copilot Chat in IDEs, on GitHub.com, and with the Copilot coding agent.

Claude Code takes a hierarchical approach with CLAUDE.md files that can be nested—both root and subdirectory instructions apply simultaneously in monorepos. Also supports .claude/commands/*.md for custom slash commands and hooks for lifecycle automation. Anthropic emphasizes keeping these instructions concise and human-readable since they’re prepended to every prompt.

Augment Code uses .augmentignore following .gitignore syntax but relies more on its automatic context engine than explicit instruction files. The platform maintains a personal, real-time index for each developer that updates within seconds—versus competitors’ 10-minute delays.

Continue is most flexible, supporting configuration through config.yaml files with rules in .continue/rules/ directories. Rules can be loaded locally or shared via Continue Hub, creating a marketplace of community standards.

The pattern across all tools: these instructions are prepended to every AI interaction, consuming context window space. Teams must distill their coding philosophy into extremely concise guidance—a constraint that forces clarity and specificity.

Vector Search Changed Everything (But Grep Still Has a Place)

Traditional grep can only find literal string matches. In typical agent workflows, this burns 40% more tokens than semantic approaches—searching for every variation of a function name across files instead of understanding you’re looking for related functionality.

Modern tools implement retrieval-augmented generation (RAG) systems that understand code relationships, not just text similarity.

Cursor’s indexing uses Merkle trees to detect changes efficiently—computing a root hash for the entire codebase, then incrementally updating only changed files every 10 minutes. Code is split using Abstract Syntax Tree parsing, not naive character chunking, preserving semantic units like classes and functions. Each chunk receives vector embeddings stored in Turbopuffer with obfuscated file paths for privacy.

GitHub Copilot’s new embedding model delivered a 37.6% improvement in retrieval quality. 110% better acceptance for C# and 113% for Java. The model is 2x faster and requires 8x less memory. The gains came from hard negatives training—teaching the model to distinguish “almost right” from “actually right” context.

Augment developed proprietary embedding models specifically trained for code understanding. Their models identify helpful context rather than textually similar content. The key innovation: deprioritizing code patterns their models already learned during training. If code matches a popular open-source library implementation, the system recognizes the base LLM likely understands it from training data, so it retrieves more novel, project-specific context instead. This selective retrieval approach enabled Augment to achieve 200,000 token effective context capacity—twice that of comparable tools operating at similar token limits.

The vector search uses Approximate Nearest Neighbor algorithms like HNSW graphs. Augment’s quantized vector search: 40% faster for 100M+ line codebases while maintaining greater than 99.9% parity with brute-force search. By reducing embeddings to compact bit vectors, they cut CPU time from ~2 seconds to under 200ms.

Windsurf’s M-Query technique appears to use multiple parallel queries, cross-referencing different code perspectives and reranking based on multiple criteria. Users consistently report it finds relevant files on the first try where Cursor needs “additional keywords and prodding.”

Worth noting: some evidence suggests pure lexical search can outperform vector search for code retrieval in certain scenarios. Claude Code deliberately uses grep-only, arguing it’s more reliable despite burning more tokens. The emerging consensus: hybrid approaches combining semantic vector search with traditional BM25 keyword search deliver optimal results.

Context Windows Are the Real Constraint

Modern models offer 128K-200K token windows (GPT-4 Turbo, Claude 3.5 Sonnet) with some reaching 1M tokens (Gemini 2.5 Pro). But these massive windows create problems: filling them is expensive, processing takes longer, and more context doesn’t always mean better results.

Cursor implements automatic context summarization when approaching limits. For large files, defaults to reading the first 250 lines, occasionally auto-extending by another 250. Specific searches return a maximum of 100 lines. For truly large files, Cursor shows only structural elements—function signatures, classes, methods—with the model able to expand specific sections if needed.

Augment’s personal, real-time indexing updates within seconds versus competitors’ 10-minute delays. This matters for fast-moving development where a 10-minute lag means the AI suggests changes to code that no longer exists.

GitHub Copilot uses implicit context (selected text, active file, code before and after cursor via Fill-In-the-Middle) and explicit context via #-mentions. The Fill-In-the-Middle approach delivered a 10% performance boost. Neighboring tabs provide another 5% improvement.

Context Lineage, launched by Augment in July 2025, indexes Git commit history. The system summarizes diffs using Gemini 2.0 Flash, then retrieves relevant commits when the agent needs historical context. Use cases: replicating patterns from previous implementations, answering “why” questions about decisions, debugging regressions, preserving institutional knowledge.

Research on long context windows shows mixed results. A study of Gemini 1.5 Flash with 1M token context showed 25% accuracy improvement for code understanding. But time-to-first-token initially hit 30-40 seconds. Optimization through prefetching and intelligent caching reduced this to ~5 seconds.

IBM’s study of 669 developers revealed code understanding was the top use case at 71.9%, not code generation at 55.6%. This inverts assumptions about what AI assistants do. Developers use them more for explaining existing code than writing new code.

JetBrains surveyed 481 experienced developers across 71 countries. Top barriers to AI adoption: lack of need (22.5%), inaccurate output (17.7%), lack of trust (15.7%), lack of context understanding (14.4%), limited knowledge of capabilities (10.2%). That “lack of context understanding” barrier directly relates to documentation quality.

Security concerns dominate. Only 23% of developers rated AI-generated code as secure. This massive trust gap represents the biggest adoption barrier. Documentation must explicitly address security requirements, secure coding patterns, validation requirements, and audit trails.

Six anti-patterns appear repeatedly:

Over-abstraction produces vague statements like “write good code” or “follow best practices.” Zero value to AI systems. Include concrete examples with specific constraints.

Inconsistent updates create documentation drift where instructions no longer match code. The human tendency: update code, forget documentation. Automated validation in CI/CD catches this before trust erodes.

Insufficient tool documentation is perhaps the most impactful failure. Anthropic’s SWE-bench experience proved vague tool descriptions lead to incorrect usage—they spent more time on tool documentation than main prompts.

Ignoring error handling leaves developers stranded. Documentation must specify common errors with resolution steps, edge cases, fallback strategies, and escalation paths.

Generic examples don’t help with actual tasks. JetBrains’ study emphasized users want domain-specific assistance, not generic code snippets. Use actual project examples showing real patterns and problems.

Missing context requirements fail to specify what information the AI needs. Teams must explicitly state required context: “To refactor a function, provide the function definition plus all callsites.”

Real Teams Doing This Right

Webflow’s deployment of Augment Code illustrates enterprise success. Complex JavaScript stack with large teams. After implementation: more PRs per engineer, more code submissions and bug fixes, more tests written, faster onboarding. CTO Allan Leinwand: “We are seeing a greater return on our investment in Augment compared to other AIs we’ve used for code.”

Principal Engineer Merrick Christensen noted: “The fact that Augment doesn’t make you think about context means you can ask questions about unknown unknowns. It improves the onboarding experience a lot—you’re able to have these ego-less questions.” Junior developers feel comfortable asking AI questions they’d hesitate to ask senior teammates.

A community implementation of Git workflow automation trained Augment Agent to handle GitHub operations. Automatically creates issues with proper taxonomies, writes conventional commit messages, generates detailed PR descriptions. 35% reduction in workflow overhead over two weeks with dramatically improved repository documentation quality.

Testing comparisons provide concrete data. A developer building an MCP server tested Windsurf versus Cursor side-by-side. Windsurf completed the task in 2 credits with full documentation, demonstrating superior context awareness and automatically fixing linting errors.

But the IBM study reveals the other side. While 57.4% of developers felt more effective with AI assistants, 42.6% felt less effective. Quality issues requiring verification, speed and latency problems, and context understanding gaps created negative experiences.

What You Should Do

Start today with a .ai/guidelines.md file covering core coding standards. Document your top 10 most-used tools with comprehensive examples. Implement basic evaluation metrics tracking accuracy and latency. Set up feedback collection. Establish a documentation review process.

Within three to six months, build a comprehensive tool catalog with detailed documentation. Implement automated documentation testing in CI/CD. Create an evaluation test suite covering edge cases. Set up an LLM-as-judge evaluation framework. Document common anti-patterns with solutions.

The evidence-based success factors are clear: specific tool documentation (Anthropic spent more time here than on prompts), domain-specific context (domain agents consistently outperform general models), continuous evaluation (critical for production quality), user feedback integration (addresses the effectiveness gap), and security guidelines (the major trust barrier).

IBM’s study and Webflow’s deployment data suggest teams executing these factors report 30-50% time savings on routine tasks, up to 47% better response accuracy, higher developer satisfaction, reduced errors, and faster onboarding.

The tools have matured. The documentation practices need to catch up. When AI assistants have access to well-structured, continuously updated, domain-specific documentation with comprehensive tool specifications and clear security guidelines, they deliver measurable value.

Without this foundation? They generate the inaccurate outputs, security concerns, and trust issues that create the 42.6% of developers who feel less effective.

Your agents are only as good as their documentation. Make it count.

I’m Bob Matsuoka, writing about agentic coding and AI-powered development at HyperDev. For more practical insights on AI development tools, read my analysis of multi-agent orchestration systems or my deep dive into AI coding productivity metrics.