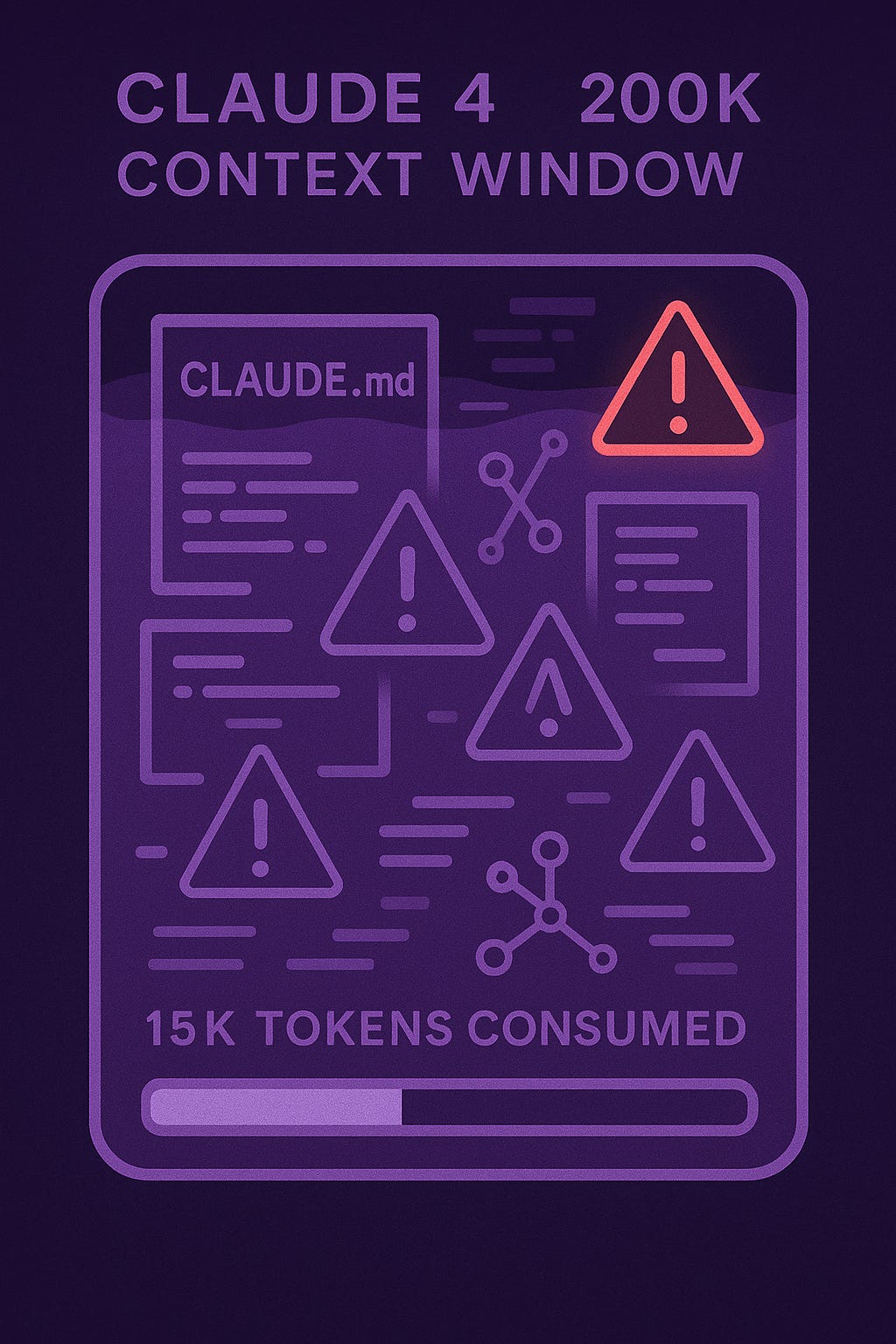

Been hitting Claude 4's 200K context limit faster than expected lately. Even with all that headroom, complex projects fill it up quick. What's clear now is we've crossed a threshold—single AI agents can't handle real development work anymore.

Not because they're not smart enough. Because they're solving the wrong problem.

The breakthrough isn't bigger context windows or better prompts. It's teams of specialized agents working parallel streams, each maintaining laser focus on their piece. This approach doesn't just work better for complex projects—it's becoming the only viable path forward.

Context disappears faster than you think

Here's what happens when you fire up Claude 4 for serious work. Start with your CLAUDE.md instructions—maybe 2K tokens if you're being thorough. Add current file contents, say 8K for a decent-sized component. Pull in relevant dependencies, architecture notes, maybe some error logs. Suddenly you're at 15K tokens before describing the problem.

Then the real work begins. Each debugging session adds context you want to preserve. Architecture decisions pile up. Test requirements, security considerations, integration constraints—all legitimate context that helps the agent understand your project.

What felt infinite at the start becomes cramped real estate within hours.

The "lost in the middle" problem kicks in around 8K-32K tokens for practical tasks. Your carefully crafted project instructions get buried under conversation history. The agent starts missing critical details you established earlier.

I've hit this exact wall. Spent an hour explaining a database schema, only to have the agent suggest breaking foreign key constraints three conversations later.

I'd be willing to bet that most of the problems people are reporting with AI coding are some version of this—context degradation masquerading as model limitations.

Why detailed instructions backfire

Natural reaction? Write more comprehensive prompts. "Remember our coding standards, update tests, check security, maintain backwards compatibility, follow the architectural patterns we discussed in the third conversation..."

Teams create 10,000-word prompt docs packed with every rule an assistant might need. But those rules eat into the space needed for problem-solving. It's like trying to debug while someone recites the employee handbook.

You end up with agents that follow procedures mechanically but miss the bigger picture. They'll update your tests religiously while implementing a solution that breaks the entire service architecture.

The orchestration breakthrough

Multi-agent orchestration fixes this by distributing both context and responsibility.

Instead of one agent juggling everything, you get specialists:

Lead agent: Tracks project goals and coordinates work streams. Doesn't need to understand your specific database schema—just knows the data layer agent handles persistence concerns.

Implementation agents: Focus purely on code generation within their domain. Full context window available for technical problem-solving because they're not tracking cross-cutting concerns.

Quality agents: Handle testing, security, compliance. Specialized context for their expertise area without dilution.

Documentation agents: Maintain project knowledge and decision history. Context optimized for explaining choices and preserving institutional memory.

Each operates in its own focused context window. The lead agent doesn't hold implementation details in working memory—it delegates and tracks outcomes through structured handoffs.

Creates what researchers call "context multiplication." Instead of fighting for space in one 200K window, you effectively get multiple focused context spaces communicating through clean interfaces.

The numbers back this up

Recent research validates the approach with concrete metrics:

AgentCoder study showed 87.8% accuracy for multi-agent systems versus 61.0% for single agents on coding benchmarks. The improvement came from specialization—design agents focusing on architecture, implementation agents on code quality, testing agents on edge cases.

Know a developer who jumped from 14 to 37 story points per week using orchestrated workflows. Debug time dropped 60%. PR rejection rates fell from 23% to under 8%. When you're not constantly re-explaining context, productivity compounds.

Anthropic's research system achieved 90.2% performance improvement on complex research tasks using coordinated agents. Pattern holds across different problem types: when multiple concerns need simultaneous attention, orchestration wins.

Makes sense when you think about it. Human development teams figured this out decades ago—you don't have one person handle frontend, backend, database design, DevOps, and QA simultaneously.

Claude 4's native task handoffs

Claude 4's built-in multi-agent spawning gives it a natural edge—it can spin up subprocesses with clean context boundaries and native memory isolation, no extra tooling required. Unlike frameworks requiring external coordination infrastructure, it creates specialized instances natively through its interface.

Creates lightweight development teams:

Lead agent maintains project vision and coordinates decisions

Subprocess agents handle specific areas (frontend, API, database, testing)

Communication happens through Claude's native interface

Context isolation prevents cognitive overload

Simpler than enterprise orchestration platforms but more powerful than single-agent approaches. For most development scenarios, hits the sweet spot between capability and complexity.

Been experimenting with this pattern recently. Start with a lead agent that understands full project scope, then delegate specific workstreams to subprocess agents. Each subprocess reports back with structured updates.

The lead never gets bogged down in implementation details. Subprocess agents never lose focus dealing with project-wide concerns. Everyone operates in their optimal context space.

Results speak for themselves—cleaner architecture decisions, fewer integration bugs, faster feature delivery.

When you need the team approach

Not every task requires orchestration. The threshold is clearer than I initially expected:

Single agents handle fine:

Feature implementation within one service

Bug fixes with clear, limited scope

Code reviews and targeted refactoring

Documentation updates

Multi-agent becomes necessary when:

Context requirements approach 50K+ tokens

Multiple independent subsystems need coordination

You find yourself constantly re-explaining project context

Parallel work streams could execute simultaneously without dependencies

The telling sign: When you spend more time managing what the agent remembers than describing what you want built, you've hit the single-agent ceiling.

Also worth noting—even with unlimited context, human cognitive load matters. Easier to think about your project in terms of coordinated specialist teams than one super-agent trying to hold everything in working memory.

The engineering mindset shift

This fundamentally changes how you approach development work. Instead of coding alongside AI, you're managing AI teams implementing your specifications.

New skillset emerges:

Architecture thinking becomes primary—designing how agents will collaborate effectively

Context management over syntax knowledge—understanding what each agent needs to know and when

Workflow orchestration rather than individual feature implementation

Quality coordination across specialized agents with different focuses

You operate at a higher abstraction level. Code still needs to work, but you're focused on system design and team coordination rather than implementation details.

Honestly? More interesting work. Less time debugging syntax, more time solving actual business problems.

The tooling reality

Current debugging tools lag behind orchestration capabilities. When something breaks across multiple agent conversations, tracing the failure path can be painful.

Key pain points:

No unified view across agent interactions

Context switches between different conversation threads

Difficulty tracking decision handoffs between agents

Limited rollback capabilities for distributed workflows

Enterprise platforms like LangGraph provide workflow visualization and structured logging. But for individual developers and small teams, the orchestration benefits often outweigh debugging complexity.

This will improve rapidly. We developed sophisticated debuggers for multithreaded applications, we'll get better visibility into multi-agent workflows.

Economic considerations

Multi-agent approaches consume significantly more tokens—research shows 15x increases in complex tasks compared to single-agent workflows. Without Claude Max or similar unlimited plans, this creates real cost pressure.

But productivity multipliers often justify the expense. When orchestration enables completing in hours what previously took days, token costs become secondary to development velocity gains.

Plus, as model efficiency improves and competition drives costs down, this economic barrier will fade. Question becomes whether your team can absorb current costs to gain early experience with orchestrated development.

Early adoption advantages compound over time.

What this means for development teams

We're witnessing a fundamental shift in software development methodology. The transition from single AI assistants to orchestrated teams parallels earlier moves from individual productivity tools to collaborative development environments.

Teams mastering orchestration early gain significant competitive advantages:

Faster feature delivery through true parallel development

Higher code quality through specialized review processes

Better architectural consistency through coordinated planning

Reduced cognitive load on human developers

Even solo developers benefit. Recent projects using subprocess delegation finish faster with fewer iterations. Context stays cleaner, decisions remain consistent, and less time spent managing what AI remembers.

The question isn't whether this transition happens—it's whether you lead or lag in adopting orchestrated workflows.

Your choice: keep fighting context limits with single agents, or start building with teams.

Single-agent coding showed us what's possible. Orchestration shows us what's next. For serious dev work, it's no longer optional—it's just how the future gets built.

Bottom line: The shift from individual AI assistants to coordinated agent teams isn't optional—it's the natural evolution of how we build software in the AI era.