Why I Dropped The CMS AND The Database for AI Power Rankings

Sometimes the most expensive lessons are the ones you thought you already knew

I rebuilt AI Power Rankings with a full CMS and database. Three days later, I tore it all out.

Let me tell you about a mistake that burned several million tokens and three full days of work. It's the kind of mistake I warn teams about all the time—but apparently needed to experience viscerally myself.

The Seduction of Structure

AI Power Rankings started simple. A Next.js app, some JSON files, basic ranking algorithms. It worked. Not elegantly, but it worked. Users could see tool rankings, read analysis, browse historical data. Classic MVP stuff.

But then I got ambitious.

"This needs a proper database," I told myself. "And while we're at it, let's add a CMS for easier content management." Supabase for the backend, Payload CMS for the admin interface. Both excellent tools, and Claude Code made the migration surprisingly straightforward.

Implementation went smoothly—too smoothly. Within a few days, I had a fully structured data model with proper collections, relationships, and a polished admin interface. It felt professional. It felt scalable.

Tooling should follow data, not lead it.

It was also completely wrong for where the project actually was.

When Good Tools Make Bad Decisions

The problems started surfacing when I tried to implement the news collection workflow I'd been planning. Suddenly, every schema change required database migrations. Adding new fields meant updating multiple API endpoints. Testing different data structures involved complex rollback procedures.

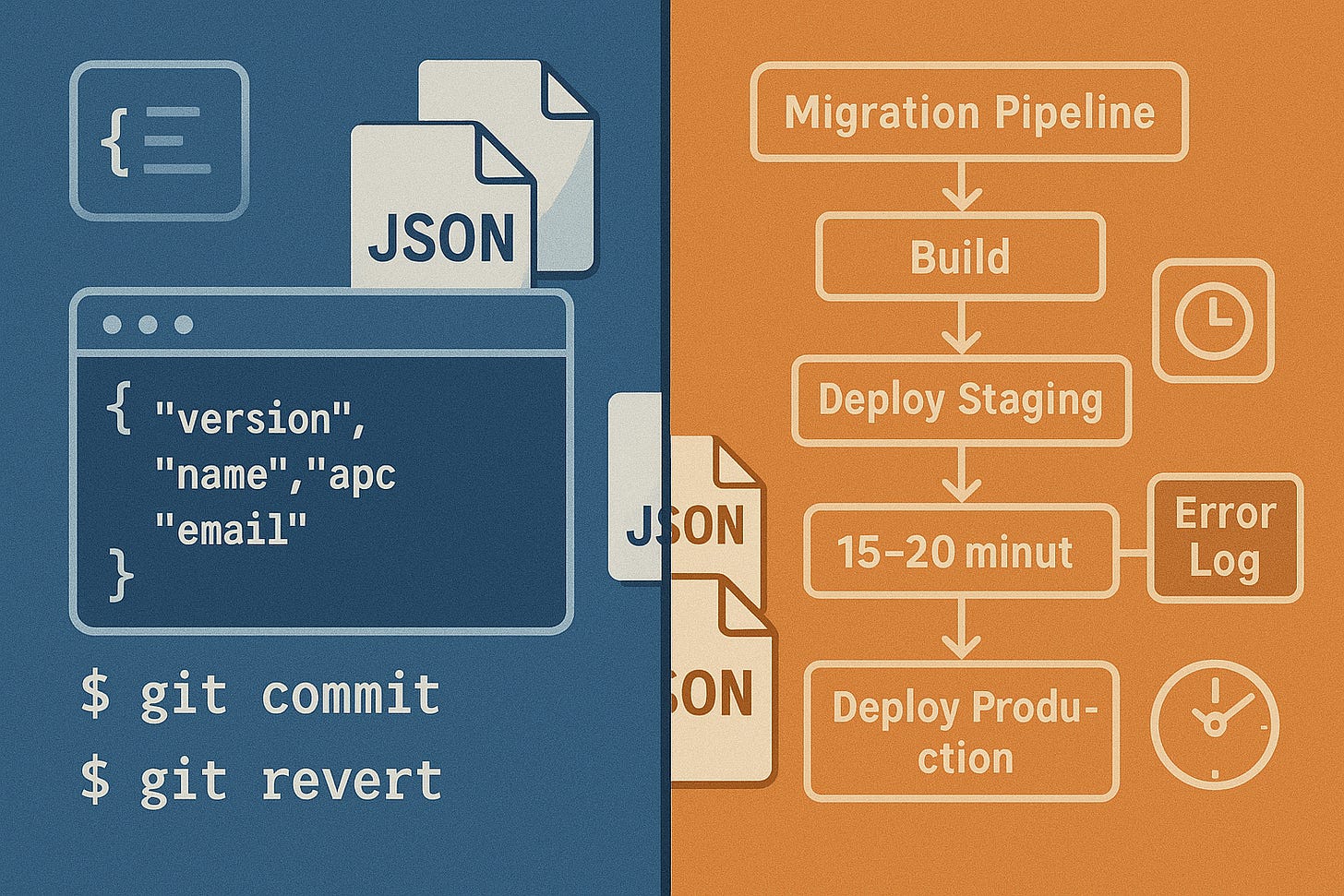

What had been quick JSON edits became multi-step processes:

Design the schema change

Create the migration

Test in development

Deploy to Supabase

Update the API endpoints

Modify the admin interface

Test the complete flow

And here's the kicker: versioning became a nightmare. With JSON files, you can experiment freely, roll back instantly, and keep multiple versions running side by side. With a CMS and database? Every change needs to be carefully orchestrated.

Even Claude, which handled the technical work competently, was painfully slow at schema adjustments—taking 15-20 minutes to work through migration logic that should have been instant.

(For what it's worth, Supabase does offer a versioning system that supposedly syncs with code versions, but I never made it far enough to try it. The overhead had already become prohibitive.)

When infrastructure slows you down, it's the wrong infrastructure.

For a single-person project where I'm still figuring out the basic workflows? That's insane overhead.

The Moment of Clarity

The breaking point came when I realized my news ingestion pipeline wasn't working the way I'd designed it. I needed to restructure how articles related to tools, add new metadata fields, and completely rethink the scoring algorithms.

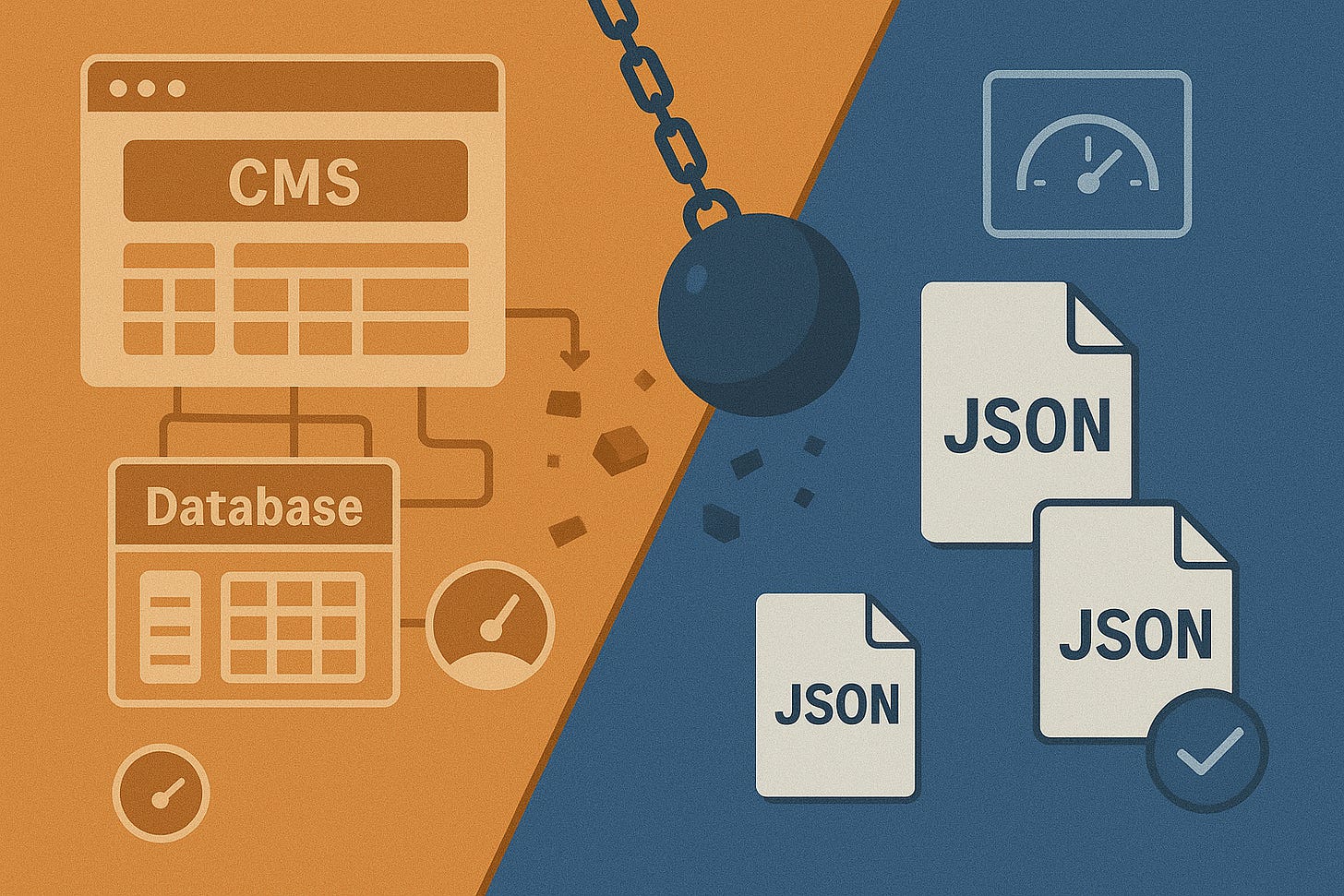

In JSON land, that's an afternoon of experimentation. In structured-database-with-CMS land, that's a week-long project with rollback planning.

I caught myself procrastinating on feature development because the infrastructure overhead was too high. When your tooling makes you avoid building features, something is fundamentally wrong.

The Great Unmigration

So I ripped it all out.

Removed Payload CMS entirely. Ditched Supabase. Migrated everything back to JSON files stored in the repository. The APIs still work—they just read from JSON instead of making database queries. Performance improved—no more network round-trips.

The irony isn't lost on me. I regularly tell clients to stay light on tooling until their data flows are established. "Premature abstraction is the root of all evil," I've said in probably a dozen presentations.

But I'd been seduced by how easy AI made the technical implementation. Claude Code could scaffold the CMS, write the migrations, update all the endpoints. The capability to do something complex quickly made me forget to ask whether I should do it.

AI lets you move fast—but it won't tell you when to stop.

What Actually Matters

Here's what I learned (or re-learned) viscerally:

Tool sophistication should match process maturity. I was trying to encode workflows I hadn't actually proven yet. JSON files forced me to keep things flexible while I figured out what I actually needed.

AI acceleration amplifies both good and bad decisions. Being able to implement complex architectures quickly doesn't make them the right choice. If anything, it makes it easier to over-engineer.

Infrastructure friction is a feature, not a bug, in early-stage projects. Some amount of manual work keeps you close to your data and forces you to understand it deeply before automating it away.

The numbers tell the story: Three days of work, several million tokens of AI assistance, and I ended up exactly where I started—but with a much clearer understanding of why simple was right.

The Path Forward

I'll go back to a database and CMS eventually. Once I have the news collection pipeline working smoothly, once I understand the data relationships properly, once I've proven the workflows manually. Then structure and tooling will accelerate the right things instead of encoding the wrong assumptions.

For now, JSON files and direct API responses work perfectly. They're fast, they're transparent, and most importantly, they don't get in the way of figuring out what I'm actually building.

The best practice hasn't changed: Establish your data flow and your data structures before you encode them into tooling. I just needed to experience the pain of violating that principle to really understand why it matters.

Sometimes the most expensive lessons are the ones you thought you already knew. Next time I reach for structure, I'll make sure the workflows truly warrant it.