Three weeks ago, my AI code review tool flagged two issues that needed fixing: an inconsistency in our versioning system that could publish wrong versions, and our shebang management for deployments needed to be more robust. Perfect test cases for Augment Code's Remote Agents—and an opportunity to see firsthand what autonomous AI development actually looks like in practice.

That experience led to something unexpected: the chance to speak directly with Lior Neumann, Augment Code's team lead on Remote Agents. Neumann brings deep machine learning research experience from Meta and academic work to his current role architecting autonomous AI development systems. Emma Webb from their team arranged this conversation after I'd written about my experience, providing insight into both the current capabilities and the strategic direction that shapes how we should think about AI-powered development.

What emerged from both using Remote Agents and talking with Neumann is evidence of what could be called a "great divergence" in AI coding tools—a fundamental split between autocomplete-style assistance and prompt-first development that changes how we structure development work itself.

The Breakthrough Experience: True Autonomous Development

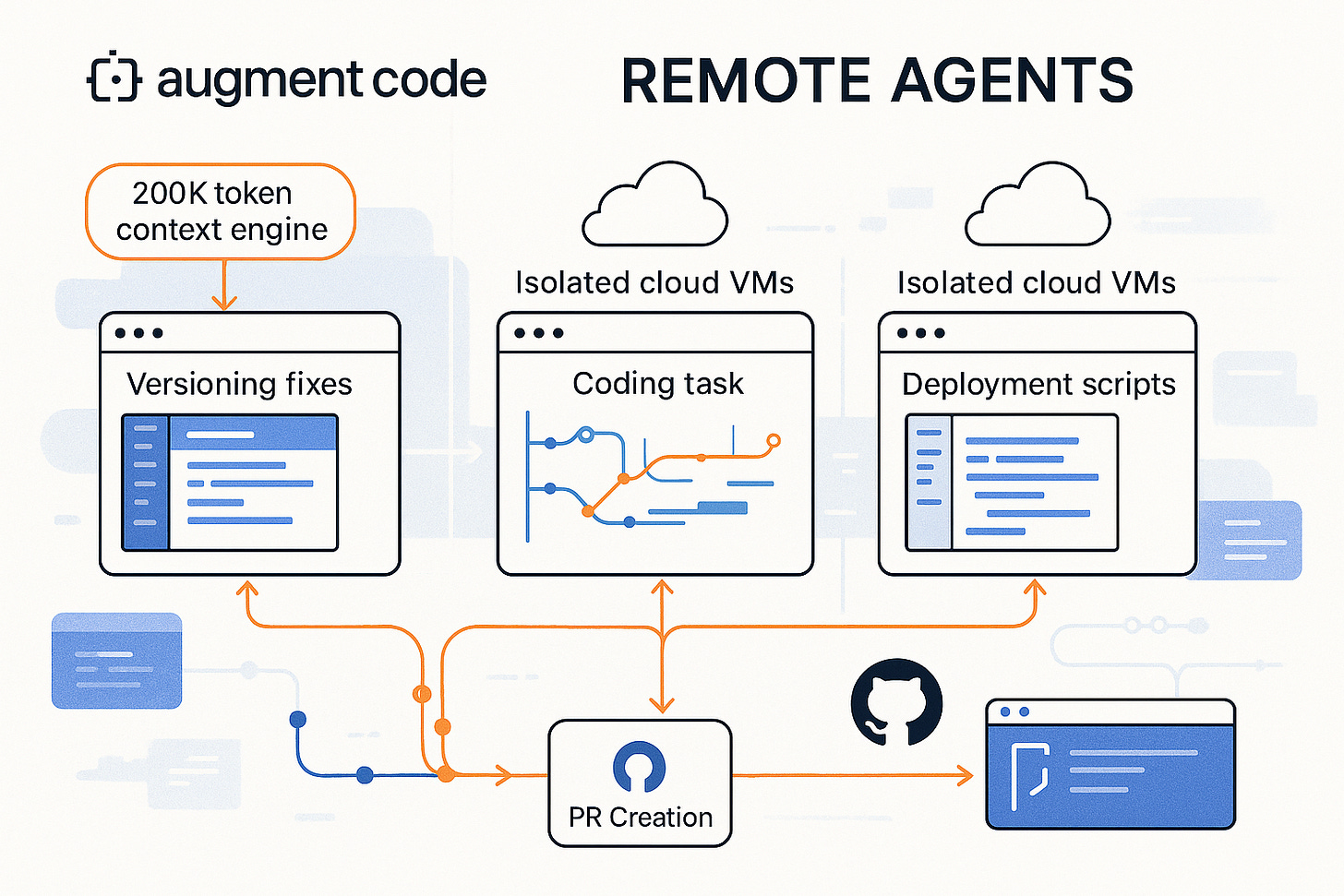

Remote Agents do something no other AI coding tool offers: they spin up completely isolated VMs in the cloud to handle your work. While GitHub Copilot stays bound to your local environment and Cursor requires your IDE to remain active, Remote Agents keep working even when you close your laptop.

I assigned one agent the versioning fix and another the shebang improvements. Then I left for a meeting. When I returned two hours later, both agents had made progress, created branches, and were methodically working through the implementations.

This was the first time I'd used an AI coding tool that truly operates independently. The upside isn't just convenience—it's a shift in how we can architect work itself. Running up to 10 agents simultaneously in isolated environments means parallel development that was previously impossible without a full team.

Augment Code's proprietary Context Engine, which processes 200,000 tokens—twice the capacity of competitors—while retrieving relevant code snippets in milliseconds, made the difference noticeable. The agents understood existing architecture patterns and integrated changes properly without any additional prompting.

But the experience also revealed friction points. Managing multiple remote agents requires discipline that most development teams haven't built yet. Without careful task scoping and branch management, you'll create merge hell.

Most importantly, I discovered that this pushes the boundaries of what VS Code plugins can handle gracefully. The interface works, but managing multiple remote VMs through a sidebar feels constrained. The confirmation prompts, designed for collaborative pair programming, don't fit when you're trying to delegate and walk away. It wasn't obvious how to shut down agents after their tasks completed—there's a remote agent list feature tucked away in the interface, but from within the agent itself, there should be a simple "Task complete → Code merged → Close Agent" flow.

Newman's Vision: Beyond Current Limitations

When I shared these observations with Neumann, his response revealed just how aligned my experience was with Augment Code's strategic thinking. "For a lot of the tasks that we're doing and a lot of the agent interactions that we're having, an IDE might not be the best surface," he explained. "And this is why we are now focusing on our future projects which is escaping the IDE and creating a new surface to do all of these things."

This represents a fundamentally different bet than competitors like Cursor and Windsurf, which fork VS Code to create dedicated AI-native environments. Neumann sees the current plugin architecture as a stepping stone: "That's going to provide us a lot more flexibility, a lot more ways to make the experience a lot nicer for you as you interact with multiple agents at the same time."

My experience with lifecycle management gaps directly maps to what Newman describes as necessary UX evolution. "The entire IDE extension ergonomics is going to change," he acknowledged. "But also, obviously, with the new surface that we're creating, with the new client, we're going to create a lot more flexibility."

The Technical Foundation and Current Constraints

Neumann explained their technical advantage: "Our ability to essentially understand very large code bases and fit the right relevant pieces into the context window without having to sort of peek and choose files manually." This capability enabled the seamless GitHub integration I experienced—agents created PRs and even fixed CI/CD issues by working directly on GitHub Actions workflows.

Neumann’s technical background in machine learning research shows in his approach to these challenges. His experience spans both academic research and industry implementation at Meta, giving him perspective on what's theoretically possible versus what works in production systems. Neumann was candid about current limitations. "There are some technical reasons and also some UX reasons for introducing these kind of limits. We are actively working to alleviate a lot of that."

The company has fully transitioned to Claude Sonnet 4, moving away from the earlier Sonnet 3.5 models. Neumann indicated they're "playing nice with everyone" among model providers to ensure access to best-performing capabilities as they emerge.

The Multi-Agent Future: Orchestration at Scale

Neumann’s vision extends far beyond my two-agent test case. "We are already looking at some interesting ideas around orchestration of multiple agents and then one single agent that launches multiple sub agents," he explained.

This approach addresses context limitations through intelligent handoffs: "Because you are transferring context between one agent and another, you can do it in a much more curated and dedicated way. You're essentially summarizing the context and then passing it to another agent."

The implications for team productivity are significant. Neumann envisions scenarios where senior engineers work with 10 remote agents simultaneously. In a 10-person engineering team, that represents 100 agents requiring coordination. Augment Code has built infrastructure for this reality: "We are also creating more around task management, you can call it. You have a task list, list of different things you want to accomplish. Each one might be dedicated to a separate agent."

This matches my observation that success requires treating agents like "an army of eager interns"—giving them specific, validated tasks that can be reviewed in one sitting, rather than open-ended architectural decisions.

Market Strategy: The Great Divergence

Neumann’s perspective on market adoption challenged assumptions about rapid AI tool proliferation. "There is just this long tail of users that are in sort of different states of their adoption of AI," he explained. "We're seeing that with enterprises, for example, larger enterprises, it takes much longer to adopt this kind of new behaviors, new tools, and that's a lot of the market."

This observation helps explain Augment Code's enterprise focus versus competitors pursuing viral developer-led growth. While Cursor achieved explosive growth from $4M to $300M ARR in a year, Neumann sees opportunity in professional developers working with larger codebases: "We have definitely decided to focus on something different. We are focusing on professional developers, larger code bases, and that's where our strength is."

The economic model underlying this split matters. Current pricing models—$20, $30, even $60 monthly plans—essentially serve as "hobby plans" for developers dabbling with AI assistance. The real economic model emerging suggests prompt-first development will command $200-400 monthly subscriptions, where AI handles 70-80% of initial code generation and engineers focus on architecture, review, and refinement.

Neumann acknowledged this reality when I asked about the actual LLM costs for heavy usage: "These numbers are going down super fast. So every time you think about a number, it's probably not gonna stay the same three months from now." The competition among model providers is driving prices down while capabilities improve.

Implementation Challenges: DevOps and Integration

Neumann acknowledged several areas requiring continued development that align with my hands-on experience. Front-end development integration remains problematic: "Being able to interact with a client from the browser is something that we have seen as a pain point across the board with all of these agents."

This matches my observation about needing to manually track browser logs and server processes—areas where the autonomous promise breaks down into manual reporter work between the AI and running programs.

Database configuration and deployment friction also need attention. Augment Code is building first-party integrations with services like Supabase and GitHub, while considering whether to develop additional tooling internally or rely on third-party solutions through protocols like MCP (Model Context Protocol).

However, Neumann expressed caution about MCP adoption: "We've seen a lot of problems with MCP, especially around authentications.

Competitive Pressures: The Platform Wars

When discussing competition from Microsoft's GitHub Copilot, Google's Gemini Code Assist, and Anthropic's Claude Code, Neumann emphasized rapid innovation as the primary defense: "There is no real moat right now. The only moat you have is your pace of innovation. How quickly you can innovate and create the next big improvement in terms of user experiences or models."

The company currently employs around 60 engineers, all using Augment Code for their own development—a validation of the eat-your-own-dogfood principle that Neumann emphasizes as crucial for product improvement.

Neumann sees competitive advantage in Augment Code's team composition: "People with a ton of experience coming from other ventures that have grown significantly or from the state of the art research labs." But he's realistic about market pressures: "The concerning part is obviously the fierce competition that's happening right now in the space."

The Discipline Problem

Both my experience and Neumann’s insights highlight a crucial challenge: success with Remote Agents requires new workflow disciplines that most development teams haven't built yet. Newman emphasizes treating agents like directed resources rather than open-ended problem solvers.

This mirrors broader patterns in AI tool adoption—technical capability often outpaces organizational readiness to use new tools effectively. Teams need to develop practices around task decomposition, branch management, and review workflows that accommodate parallel autonomous development.

The learning curve matters. As Neumann put it: "Different tools for different jobs," but the orchestration capabilities they're building deserve an interface designed for them rather than constrained by traditional IDE plugin architecture.

Looking Forward: Post-IDE Development

Neumann’s timeline suggests this transformation won't happen overnight: "I don't expect us to be in a situation where we can work without an IDE in the next two years or so." But the direction is clear.

My experience with Remote Agents provided the first glimpse of what post-IDE development might look like—where the primary interface becomes task delegation and review rather than direct code manipulation. The automatic environment setup based on existing configuration worked seamlessly. No manual Docker deployments or complex setup procedures. You connect GitHub, index your workspace, and start assigning tasks through natural language.

The productivity multiplier is real for medium-complexity work—tasks that typically require half a day to a full day of focused development time. Below that threshold, the overhead isn't worth it. Above it, you need human architectural judgment.

Bottom Line: Betting on the Right Future

Augment Code's approach represents a specific thesis about AI coding's future: that professional developers working with large codebases will drive transformation, and that enterprise adoption will ultimately prove more sustainable than viral consumer growth.

Neumann’s insights suggest this isn't just about building better tools—it's about reimagining how development work gets structured when AI can handle significant portions of implementation autonomously. Remote Agents offer a preview of this future, though success depends on whether teams can build the discipline to coordinate AI work effectively.

The capability's here. My hands-on experience confirms that Remote Agents deliver on their core promise of autonomous development. But Neumann’s candid assessment of current limitations and future direction reveals the real unlock: escaping the constraints of traditional development environments to build interfaces designed for AI orchestration from the ground up.

The technical capability is demonstrably here. The organizational adaptation—and the interface evolution to support it—remains the harder challenge to solve.

Thanks to Lior Neumann for taking the time to share his insights on the future of AI-powered development, and to Emma Webb for arranging this conversation. Look for a future article on the Great Divergence and its implications for the AI coding tools market.