When Your Agentic Coder Does Exactly What You Asked—But Not What You Meant

Why specificity and system-awareness matter more than ever

1. The Story: When “Move This Field” Goes Nowhere

Let me walk you through a real example.

I was updating a basic contact form. The kind of thing we’ve all done a dozen times—move “Location” up top, shuffle a couple of fields, tighten the layout.

I passed the task to Augment, one of our Agentic Coders. It acknowledged the request and returned code updates.

Result? No visible change.

I revised the instruction. Clarified what I meant. Tried again. Still nothing.

So I looked under the hood—and found the issue. The fields were being served via an API the agent had generated earlier. The field labels were technically correct, but the API was populating them with the wrong data—mixing up source fields behind the scenes. The agent had moved the correct visual field, but because the underlying data was mismatched, the change didn't reflect what I expected in the UI.

The agent wasn’t wrong. It just wasn’t aligned with reality.

2. The Two Levers: How to Avoid Misfires in Agentic Systems

🛠️ Lever 1: If You're Not Seeing the Result—Stop and Inspect

Even when you give clear, explicit instructions, agents can misfire if the system underneath is inconsistent. If you ask for a visible change and nothing happens—don’t keep rewording the request.

Pause and look under the hood.

Ask yourself:

Is the agent acting on the expected data?

Are the labels just right, but the data source wrong?

Is something being cached or misconfigured downstream?

The trap is thinking your instruction was ignored. In reality, the agent may be faithfully executing a task against a broken or misleading system state.

Instructional clarity is table stakes. System inspection is what unlocks insight.

🧠 Lever 2: Add Verification Steps for Assumption-Sensitive Tasks

By default, agents assume the world is clean and well-labeled. Spoiler: it isn’t.

Before rearranging fields, a well-instructed agent should check:

Are field labels consistent with their IDs?

Are any fields duplicated or hidden from rendering?

Is the form layout dynamically generated (e.g. from config)?

You can guide this with a simple step:

“Before modifying layout, audit all field names and labels for consistency, and warn if mismatches are found.”

This costs almost nothing—and saves cycles.

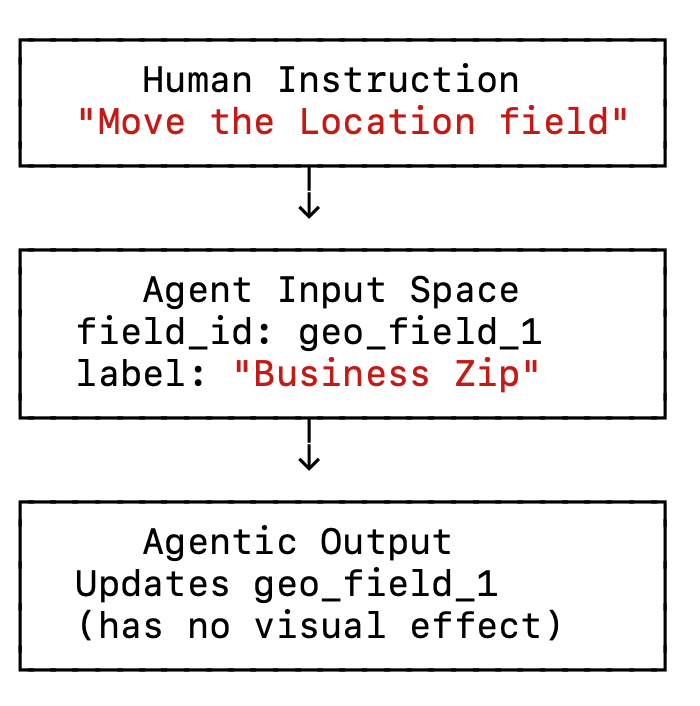

3. Diagram: The Agentic Instruction Gap

Problem: Label and ID mismatch → Instructions don’t map to reality

4. Checklist: Designing Better Instructions for Agentic Coders

✅ Before you delegate a task, check these:

🎯 Clarify What You Mean

☑ Are you using visible labels and internal field keys?

☑ Did you define the scope? (e.g. just this form vs. the whole layout)

☑ Is the task rooted in a UI effect, or data model change?

🔍 Question Assumptions

☑ Is the field config auto-generated or hand-written?

☑ Are there discrepancies between the code and UI?

☑ Should the agent audit before acting?

🧠 Structure Prompts with Intent

☑ Break into discrete steps (e.g. "Check", "Update", "Verify")

☑ Provide examples when naming or matching patterns

☑ Add fallback logic or ask for confirmation if mismatches arise

📤 Validate the Output

☑ Ask for a diff or preview before applying changes (most agents support this by default now)

☑ Request confirmation that visible UI reflects the change

☑ Run an automated test if the action is sensitive

Final Word: Don’t Assume the Agent Sees What You See

As we build with agents, we’re not just managing code—we’re managing interpretation. The work is less about writing logic and more about bridging intent and system state.

So yes, agents move fast. But if they’re following the wrong map? All that speed gets you is a deeper hole.

Let’s make sure we’re solving the real problem.