I have three “golden” rules for working with AI:

Have AI write your prompts for you (don’t prompt, metaprompt)

Do deep research on all subjects

Check—at least sniff test—the results

This week, I broke rule three. And my partner Joanie called me out on it, rightly. Not a good look for an AI “Expert”.

The Setup

We’re switching health insurance plans. Four options, each with its own Summary of Benefits and Coverage document—those wonderfully dense PDFs that health insurers create to make comparison shopping as painful as possible.

I thought I’d be clever. Upload all four plans to a GPT folder, have it analyze them, create a nice comparison chart. Easy to share with Joanie for our decision-making. I picked GPT over Claude.AI specifically because it’s simpler to share outside an organization.

I even followed rule one religiously. I had GPT generate its own prompt for analyzing and comparing health plans. Meta-prompting at its finest.

What could go wrong?

The Explosion

Joanie opened the comparison. Started cross-referencing against the source documents.

“THEY’RE ALL WRONG!!”

Not some of them. Not mostly accurate with minor errors. All of them. Completely wrong.

The Systematic Failure

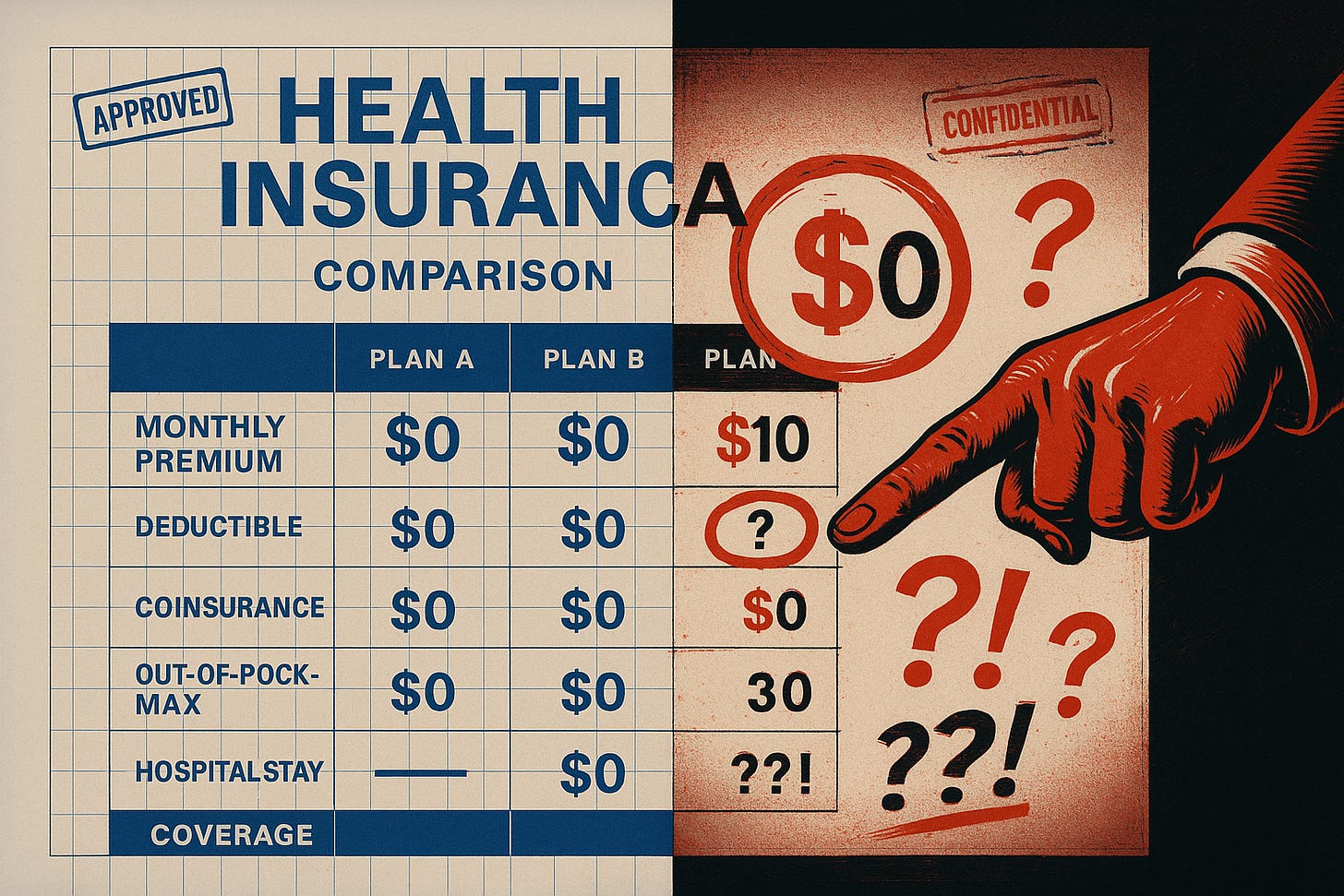

Here’s what GPT did to Plan A2:

GPT claimed: $1,500 family deductible

Reality: $0 deductible

GPT claimed: $8,000 in-network family out-of-pocket maximum

Reality: $10,000

GPT claimed: $25 primary care copay

Reality: $30

GPT claimed: 10% coinsurance for diagnostic tests

Reality: No charge (0%)

GPT claimed: 10% coinsurance for hospital stays

Reality: $500/day copay for first 3 days, then no charge

The errors weren’t random. GPT appeared to have copied F1’s cost structure and just... pasted it over A2. It made two completely different plans look nearly identical in its comparison chart.

Then it wrote this summary: “Both A2 and F1 have the same deductible and OOP maximum structure.”

They absolutely do not.

The Dark Comedy

What makes this particularly maddening is how confident GPT was. No hedging. No uncertainty. Just clean comparison tables with precise numbers—all wrong.

The prescription drug costs? Mixed data from two different plan types (POS and PPO) while labeling everything consistently.

The whole thing reads like GPT speed-read the first document, got confused, then just made up plausible-sounding numbers for the rest while maintaining an air of authority.

It’s the AI equivalent of that student who didn’t do the reading but speaks with complete confidence during class discussion.

Why This Happened

GPT’s vision models struggle with structured documents. PDFs with tables, formatted text, complex layouts—they’re harder to parse accurately than plain text. The models make mistakes, then the language model builds confidently on those mistakes.

Here’s what probably happened:

Vision model misread or partially read the PDFs

Language model filled gaps with plausible health insurance numbers

Consistency algorithm made sure all plans looked comparable

Output generator created clean tables with false precision

The result? Professional-looking analysis that was systematically wrong.

The Sniff Test I Didn’t Do

Here’s where I failed. The comparison looked reasonable. The numbers were in plausible ranges. The formatting was clean. I should have spot-checked a few key items against the source documents.

I didn’t.

The “sniff test” isn’t about verifying every detail. It’s about checking enough to catch systematic errors. Pick three random items from the comparison. Look them up in the source. If those match, you’re probably okay. If they don’t, you’ve got a problem.

I skipped it because I was in a hurry. Because the output looked professional. Because GPT is usually pretty good at structured tasks.

Joanie caught it because she actually read the documents. Revolutionary concept.

What This Means for Development Work

We talk about AI-assisted coding like it’s fundamentally different from other AI tasks. More structured. More verifiable. Better feedback loops through tests and type systems.

But here’s the thing: GPT failed at a simpler task than most coding work. Reading four standardized documents with consistent formatting and extracting comparable data points. No complex reasoning required. Just accurate reading and systematic comparison.

It couldn’t do it.

Yet we’re trusting these same models to:

Read complex codebases

Understand system architectures

Make refactoring decisions

Generate production code

The development feedback loops help. Tests catch many errors. Type systems catch more. Code review catches others.

But systematic misunderstandings? Those can slip through when they produce plausible code that compiles and passes existing tests while missing the actual requirement.

Practical Lessons

1. The sniff test is non-negotiable

Even for mundane tasks. Especially for mundane tasks where you’re tempted to skip verification because “it’s just a simple comparison.”

Pick three random data points. Verify them against source. If they’re wrong, everything’s suspect.

2. Professional formatting hides errors

Clean tables and confident prose make errors less obvious. The better the output looks, the more carefully you should verify it.

3. GPT isn’t the right tool for every job

I chose GPT for shareability. Should have chosen Claude Code for accuracy, verified the output, then shared the verified results.

Tool selection matters. Convenience isn’t everything when accuracy is critical.

4. Meta-prompting doesn’t save you

Having GPT write its own analysis prompt created a professionally structured workflow. It also gave GPT more opportunities to compound errors while maintaining consistency.

Good process helps. It doesn’t replace verification.

The Bottom Line

I’ve written extensively about AI reliability, hallucination detection, and verification strategies. I know better.

But I got lazy. The task seemed simple. The output looked professional. I was in a hurry.

Joanie caught my mistake before we made a $10,000 health insurance decision based on completely fabricated data (we would have eventually caught it).

That’s the real lesson. AI failures in mundane tasks—reading health insurance documents, summarizing meeting notes, comparing spreadsheets—don’t trigger our technical skepticism the way code generation does.

We assume if the task is simple enough, AI will get it right. But “simple” and “structured” don’t guarantee accuracy when the underlying model is guessing.

Always check your work. Even when—especially when—you think you don’t need to.

I’m Bob Matsuoka, writing about agentic coding and AI-powered development at HyperDev. For more practical insights on AI development tools, read my analysis of The Ghost in the Machine: Non-Deterministic Debugging or my deep dive into What’s In My Toolkit - August 2025. Three rules for working with AI. I follow two consistently. This week reminded me why all three matter.

Hey, great read as always, thanks for sharing this crucial reminder that even experts like you nead to sniff test AI output rigorously.

The confessional perspective of this piece is fantastic!

Your content has inspired many of my own thought threads, and I appreciate your contributions.