I need to tell you about a recent hiring investigation that revealed something important about AI-assisted development—and why sometimes the simplest approach is the hardest to execute.

The Assignment That Exposed Everything

We gave a candidate what seemed like a straightforward task: build an MVP data layer for a local events platform. The instructions were explicit:

"Speed and Simplicity > Complex and Complete"

"Should start locally in under 5 minutes"

Skip user authentication, booking functionality, payments

Basic CRUD operations and simple search

What we got back was a masterclass in over-engineering. Multi-container Docker setup. Microservices architecture. Complete booking system (explicitly forbidden). And—the dead giveaway—OpenAI integration for natural language SQL conversion.

Perfect code. Zero iteration artifacts. And a .windsurf file with the original AI prompt still sitting in the repo.

The Investigation Tools

Claude Code immediately flagged the submission as AI-generated. My own ai-code-review tool's "evaluate" mode missed it initially, but I've since improved the detection algorithms specifically for this pattern.

The real smoking gun? That .windsurf file. Reading the prompt made it clear: the candidate had essentially asked an AI agent to "build an event booking platform" and submitted the output wholesale.

Not even trying to hide it.

What This Really Tests

Here's the thing—we use metacoding challenges for a reason. We want to see thinking, not syntax fixing. We want to understand how candidates approach problems, make trade-offs, follow constraints.

A smart candidate would have asked: "Is AI assistance allowed?" For an hour-long assessment, our answer is typically no—or we pivot to a much harder architectural challenge where tool usage becomes necessary and we evaluate how effectively they leverage AI.

This candidate did neither.

The Bigger Problem

AI doesn't help you follow directions. In fact, it often works against constraint-following because LLMs default to "impressive" solutions rather than appropriate ones.

This candidate failed on the most fundamental requirement: following explicit instructions. They added forbidden functionality, chose complexity over simplicity, and delivered enterprise architecture for an MVP request.

The code quality was irrelevant. They couldn't follow basic directions.

Two Types of Technical Assessment

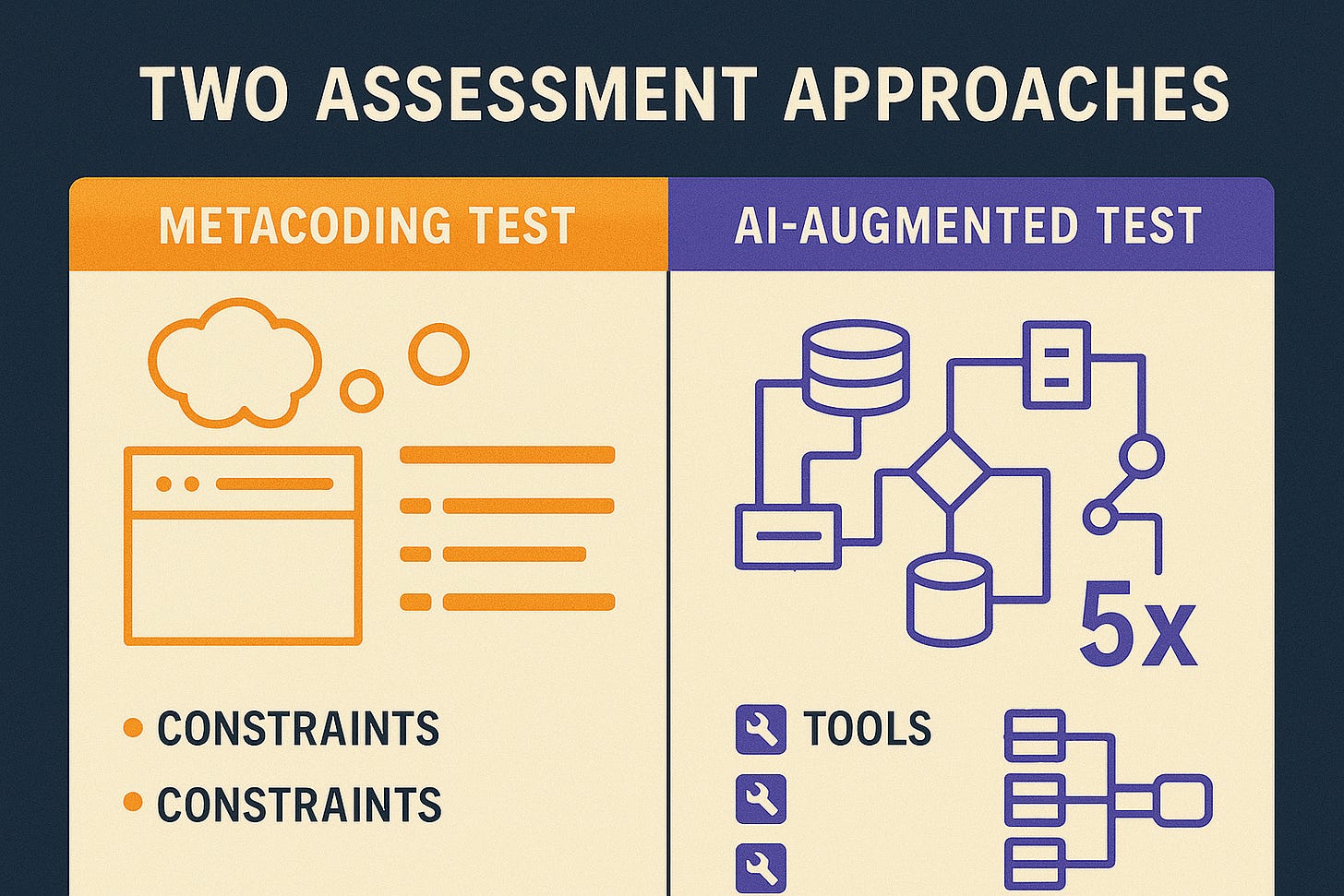

This experience clarified our approach to technical hiring:

Type 1: Pure Problem-Solving (No AI tools)

Focused on thinking and constraint-following

Tests ability to work within limitations

Reveals actual coding thought process

Type 2: AI-Augmented Development (Tools encouraged)

Much higher complexity expectations (5-10x)

Must explain tool usage and decision rationale

Tests professional AI integration skills

Requires sophisticated architecture and comprehensive test coverage

Both have their place. But you need to know which test you're taking.

The Professional Standard

Using AI isn't the problem—misrepresenting your work is. The candidate could have:

Asked about tool usage policies upfront

Disclosed AI assistance in submission

Demonstrated understanding of the generated code

Identified and fixed obvious security vulnerabilities

Instead, they presented AI output as original work while violating multiple explicit requirements.

That's not a technical failure. That's a professional one.

What We Actually Learned

The proliferation of AI coding tools requires us to evolve technical assessment. But the fundamentals remain unchanged:

Integrity matters more than perfect code. Following directions trumps technical sophistication. Understanding your solution beats generating impressive output. Professional transparency builds trust.

When a candidate can't distinguish between appropriate and inappropriate solutions—or worse, actively violates clear instructions—the technical quality becomes irrelevant.

The Real Lesson

AI is reshaping how we code. But it's not changing the fact that following directions remains the most important skill in professional software development.

Use AI tools. Be transparent about it. But remember—the hardest part of any coding challenge isn't writing the code.

It's understanding what you're actually being asked to build.

Bottom line: Perfect code that ignores requirements is worthless. Simple code that solves the actual problem is everything.