I wrote about pricing pressures in AI development earlier this month, predicting that current token economics couldn't last. This week, I discovered what those economics actually look like for heavy Claude Code users.

It's more dramatic than I expected.

A Reddit user named mattdionis built a usage tracker and shared something remarkable: they burned through over 1 billion tokens this month, worth $2,200+ at API rates, while paying just $200 for Claude Max. That's a 10x value differential.

After running my own numbers, their usage looks conservative.

My Own Token Reality Check

Looking at my AI development consumption from May 4th through June 29th tells an even more dramatic story. Over that ~8 week period, I consumed over 3.2 billion tokens—equivalent to $5,794 at API rates.

That's nearly 30x the value of my $200 monthly subscription.

The daily breakdown is revealing:

Peak day (June 23rd): $442 worth of tokens in a single day

Average heavy usage day: $200-400 worth of tokens

Lighter days: Still $80-150 worth of token consumption

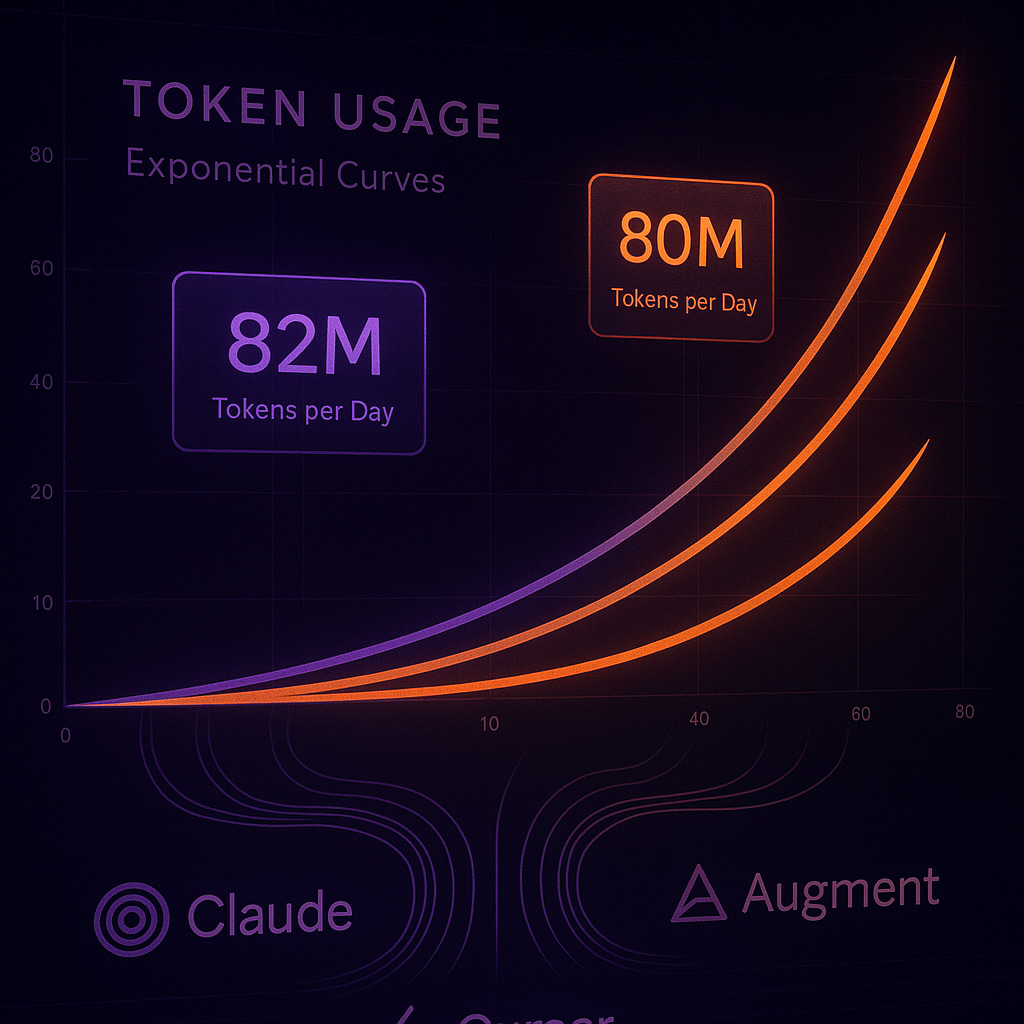

This consumption spans multiple tools in my development workflow. Claude Code handles most of my coding tasks, but I also use Claude AI Desktop for research and writing, Cursor for quick iterations, Augment Code for complex refactoring, and Windsurf for specific project types. Each tool runs on the same economic engine: compute-intensive LLMs subsidized far below actual cost.

This isn't "vibe coding" or experimental tinkering. Like mattdionis, I spend most of my time in planning and specification phases before any code generation. When you're potentially burning through hundreds of dollars worth of tokens daily, every prompt needs to count.

The Numbers Behind Heavy AI Development

The Reddit thread reveals usage patterns that would have been unthinkable six months ago. The original poster leads agentic AI engineering at a startup and describes their workflow:

"I spend many hours planning and spec-writing in Claude Code before even one line of code is written... The code that is generated must be ~90% of the way to production-ready."

Other users reported similar token consumption: one hit $9,178 in equivalent API costs, another burned through $7,600 worth of tokens. These aren't hobby projects or experimental tinkering—these are professionals building production systems.

What's particularly interesting is the methodology. As mattdionis notes: "I think the main mistake vibe-coders make is having CC write code wayyyyy too early. You have to be willing to put the time into planning and spec-writing."

Strategic Planning Actually Pays Off

The heavy emphasis on upfront planning before code generation makes sense from both quality and cost perspectives. When you're potentially consuming thousands of dollars worth of tokens monthly, you can't afford to iterate through poorly specified requirements.

This represents a fundamental shift in development methodology. Traditional agile approaches emphasized rapid iteration and "fail fast" cycles. But when AI can generate entire codebases from detailed specifications, the optimal strategy becomes "plan extensively, then execute precisely."

The workflow described involves sequential specification files. Each has validation requirements—linting, type-checking, testing with 80% coverage targets—that must pass before proceeding. It's more disciplined than most human-only development processes.

The Subsidy Reality (Maybe)

Here's what makes this economically fascinating: Anthropic is almost certainly losing money on these power users. As one commenter observed: "For this level of usage, Anthropic is subsidizing people on the MAX plans—it's costing them more than the $200/month they're charging."

But wait. Let's do the math differently.

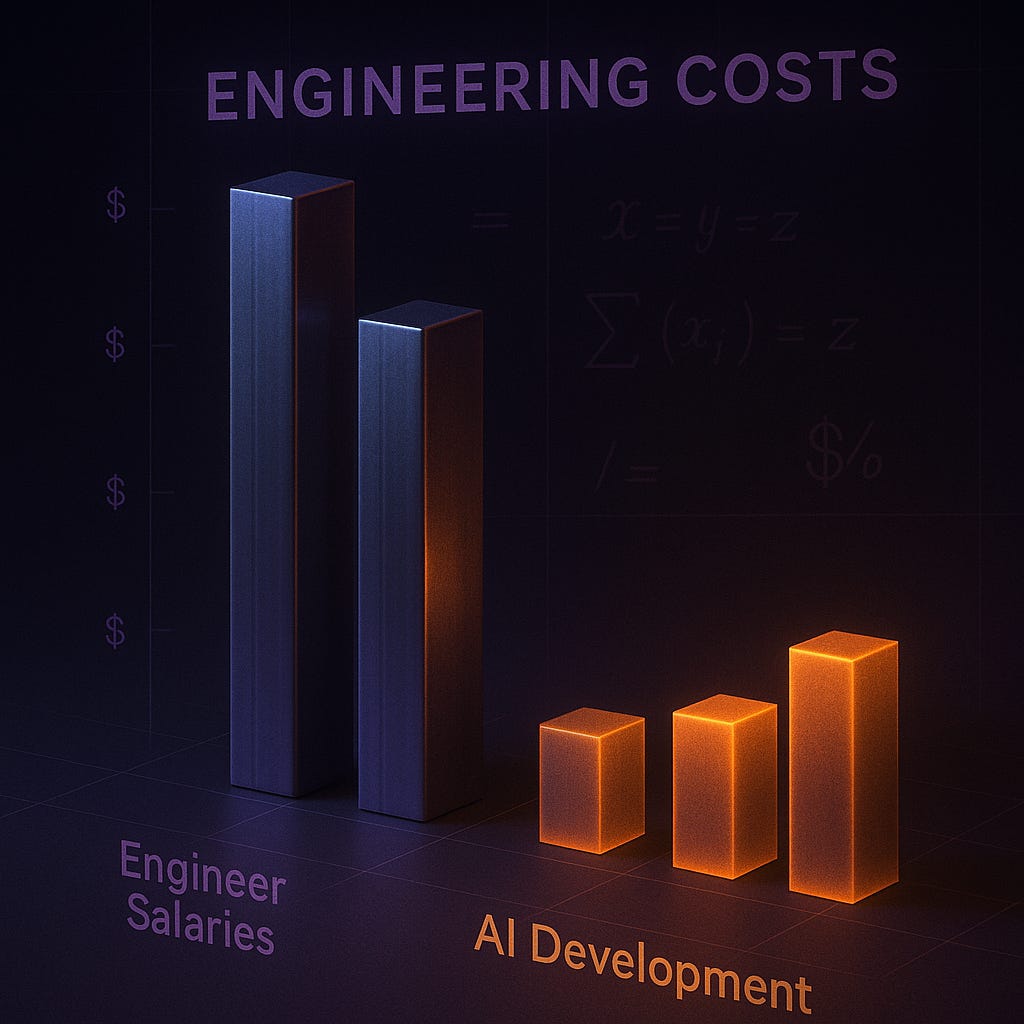

If I'm actually consuming $3,000+ worth of tokens monthly (extrapolating from my 8-week usage), that's not far from real engineering costs. But here's the thing: that's spread across five different projects. So we're talking roughly $600 per project monthly.

A senior US engineer runs $12-25k monthly. Offshore talent ranges $2.5-7k monthly. So $600 monthly for AI development capability on a single project? That's actually a credible bargain.

There's definitely still a massive subsidy from Anthropic. But even if I paid the full $3,000+ monthly (broken across five projects), it would still be a bargain for what I'm delivering. Even with most of these being self-funded side projects, the return has been substantial.

For a startup with funding? I'd invest in that capability without hesitation. Which makes the current opportunity even more compelling—you're accessing engineering-level productivity at a fraction of engineering costs, just with dramatically faster delivery.

AI companies are in a land-grab phase, betting that developer mindshare today translates to market dominance tomorrow. But the economics might be closer to sustainable than they appear.

The parallel to early cloud computing is striking. Amazon famously operated AWS at thin margins (or losses) for years to build market position. The bet: infrastructure costs decrease faster than usage grows, eventually reaching profitability with a locked-in customer base.

The Market Forces Reality Check

There's another perspective worth considering. In my recent conversations with industry insiders, including leadership at companies like Augment Code, there's a belief that market economics will eventually drive these prices down rather than up.

The argument: As competition intensifies and model efficiency improves, the cost per token should decrease significantly. Multiple players (OpenAI, Google, Anthropic, Meta) are racing to optimize inference costs. Historical precedent suggests compute-intensive technologies eventually become commoditized.

But here's the key caveat: We still have a long way to go before reaching that equilibrium. Current subsidy levels are unsustainable in the near term, even if long-term economics favor lower prices.

What This Means for Development Teams

If you're doing serious AI-assisted development, the subscription economics are compelling. Probably too compelling to ignore.

For individual developers: The productivity gains described in the thread represent genuine competitive advantages. When someone can build production-ready systems at 5-10x traditional speeds, that's not just efficiency—it's a different category of capability.

For engineering teams: The planning-heavy methodology emerging around AI development may require process changes. Traditional sprint cycles and story point estimation become less relevant when specification quality determines output quality.

For startups: This is the golden window. Access to engineering-level productivity at current pricing represents a massive competitive advantage. Small teams can now tackle projects that previously required much larger engineering organizations. While established companies debate governance and risk management, startups can move fast and build significant moats before the pricing window closes.

When the Other Shoe Drops

Current pricing is clearly unsustainable in the short term. The question isn't whether AI development costs will increase, but when and how much.

Infrastructure costs keep growing. While compute efficiency improves, the absolute scale of model training and inference continues to expand. Someone has to pay for those GPUs.

Market maturation is coming. As the developer ecosystem stabilizes around specific tools, pricing pressure will shift from customer acquisition to revenue optimization.

The subsidy war between AI providers will eventually end. Likely when one or two players achieve dominant positions.

My prediction: Expect significant pricing changes within 12-18 months. The current $200 Max plans are essentially loss leaders that can't persist indefinitely.

Practical Recommendations

Start now. Current economics won't last.

Focus on methodology. The planning-heavy approaches described in the Reddit thread aren't just token-efficient—they're likely to remain best practices even when pricing changes.

Plan for transition. Build internal capabilities that don't completely depend on current pricing models. Understand which aspects of your workflow would survive 2-3x price increases.

Measure true productivity. Don't just track token usage. Measure actual development velocity, code quality, and time-to-production. These metrics will help justify higher costs when they inevitably arrive.

What We're Actually Documenting Here

The developers sharing their massive token consumption aren't just showing off usage statistics. They're documenting the early phase of a fundamental shift in how software gets built.

The methodology they're developing—heavy planning, specification-driven development, AI-assisted implementation—will likely define professional development practices for years to come. Just at very different price points.

Current pricing won't last. But the workflows emerging now? Those will persist long after the economics change.

The other shoe will drop—probably sometime in 2025. The question is whether you'll be ready when it does.

Related reading: The Other Shoe Will Drop - my original analysis of AI pricing sustainability