A Note to Readers

Stick with me here. This might seem like a random story about a development team, but there's a bigger point coming. The payoff is substantial—bigger than I even suspected when I started writing this. Also, everything you're about to read actually happened—all the code was written, all the features were built, all the deployments are real.

The names are fictional, but the project—and the orchestration behind it—is very real.

If you missed last Monday's article about why I hope to never use Claude Code again, this story will show you exactly what that future looks like in practice.

The Six-Week Sprint

Sarah Chen gathered the team around the conference table on a Tuesday morning in early July, her laptop displaying a daunting project timeline. "We've got six weeks to build a comprehensive survey platform from scratch," she announced. "Multi-stakeholder architecture, professional UI, security compliance, the works. Demo deadline: August 15th."

The room went quiet. Mike Rodriguez, the senior architect, finally spoke up: "Six weeks for enterprise-grade? That's... tight but doable." But as Sarah walked through the requirements—8 different organization types, 4 stakeholder categories each, responsive design, accessibility compliance, production deployment—the team started nodding. With good coordination, they could pull this off.

What followed was perhaps the most coordinated development sprint any of them had ever experienced. Instead of the usual chaos of competing priorities and blocked dependencies, every team member seemed to know exactly what needed doing next. Alex Thompson handled scaffolding, Emma Watson analyzed design specs, Mike provided architecture oversight. Lisa Park orchestrated epics while Chris Kumar built sample data. Rachel Green managed DevOps.

When the UI needed transformation, Jordan Kim stepped in. QA challenges? Maria Santos had comprehensive testing frameworks ready. Security vulnerabilities flagged by David Chen got fixed immediately. Anna Rodriguez led design system migration while Tom Chen added polished interactions.

By August 15th—somehow finishing in just two weeks instead of the planned six—they had delivered:

Complete survey platform with modern UI

Multi-stakeholder architecture supporting 8 organization types

Comprehensive QA framework with automated testing

Security compliance with modern headers and CSP implementation

Production deployment with Vercel hosting

Modern design system with shadcn/ui components

SurveyJS integration with custom schema conversion

Admin dashboard with user management and analytics

Forty-six major deliverables completed in two weeks instead of the planned six. Zero major bugs. Full accessibility compliance. Enterprise-grade security. Smooth animations and interactions.

The demo went well. "This is exactly what we envisioned," the client said. "How did you finish a six-week project in two weeks with such coordination?"

Sarah smiled. "Great team coordination. Everyone knew exactly what needed doing next."

What she didn't mention was the strangest part: the entire "six-week sprint" that somehow finished in two weeks had actually taken just 24 hours. Except the clock didn't run for two weeks. It ran for one day. The "team" that had delivered this coordination miracle wasn't sitting around a conference table.

They were running on her laptop.

The numbers tell the story: 15-20x faster than traditional development.

Orchestration Is as Big a Shift as Prompt-First Was

When I wrote this narrative, I suspected the productivity implications were significant. After analyzing my actual development metrics over the past few weeks, the numbers are staggering:

My Recent Productivity (July 5-10, 2025):

3.3 million lines of code generated across 58 repositories

1.3 million net lines added (not just modifications)

18,016 code files created or enhanced

277% increase in code productivity vs. previous periods

But of course LOC is a terrible measure of real work. And this isn't about generating more documentation or configuration files. This represents genuine framework development: multi-agent coordination systems, AI trackdown tools, task management orchestration, security implementations, and memory service architectures. The surveyor app was just one small proof-of-concept to see if the orchestrator could run unattended—which it did for 5 hours straight. Most of the work went into building claude-multiagent-pm itself and its supporting tools. Plenty of experimental code will never see production, but the sheer volume of functional, testable software produced is unprecedented.

The Technology Behind the Magic

The orchestration you just read about builds on Anthropic's Claude Code—a terminal-native AI coding system that can run multiple specialized agents simultaneously. Recent research shows that Claude Code's multi-agent approach achieves 90.2% performance improvements over single-agent systems, though at 15x higher token costs.

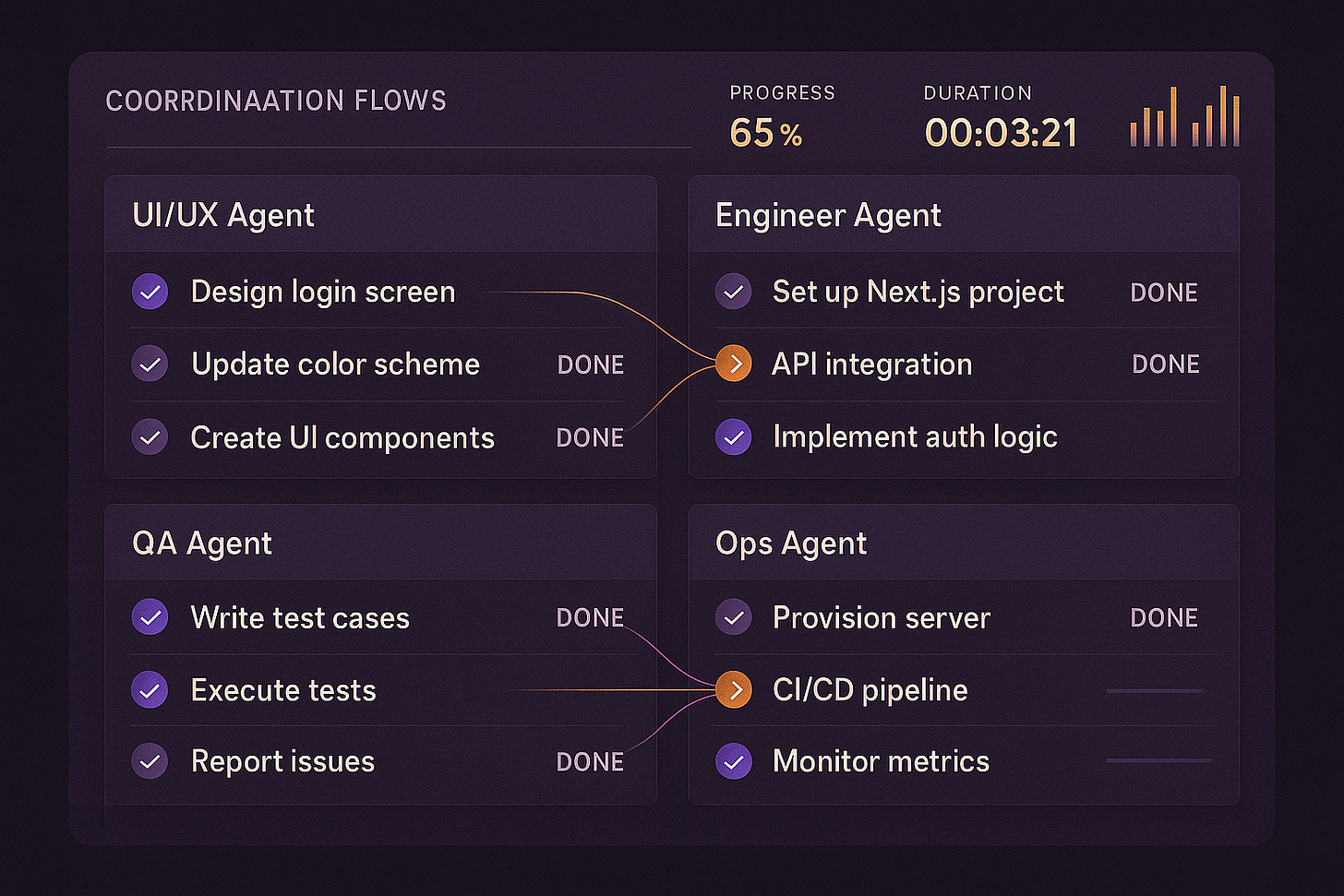

Here's the breakthrough: instead of one AI trying to handle everything, Claude Code can spawn specialized sub-agents—architects for system design, coders for implementation, testers for quality assurance—that work in parallel like a real development team. Each agent maintains its own context and focus, preventing the confusion that typically happens when AI tries to juggle too many tasks at once.

What makes this work:

Parallel execution across different project areas without context pollution

Context specialization and delegation - PM and engineering agents focus on orchestration and coding with lots of free context space

Intelligent task distribution coordinated by a lead agent

Real-time coordination through shared project understanding

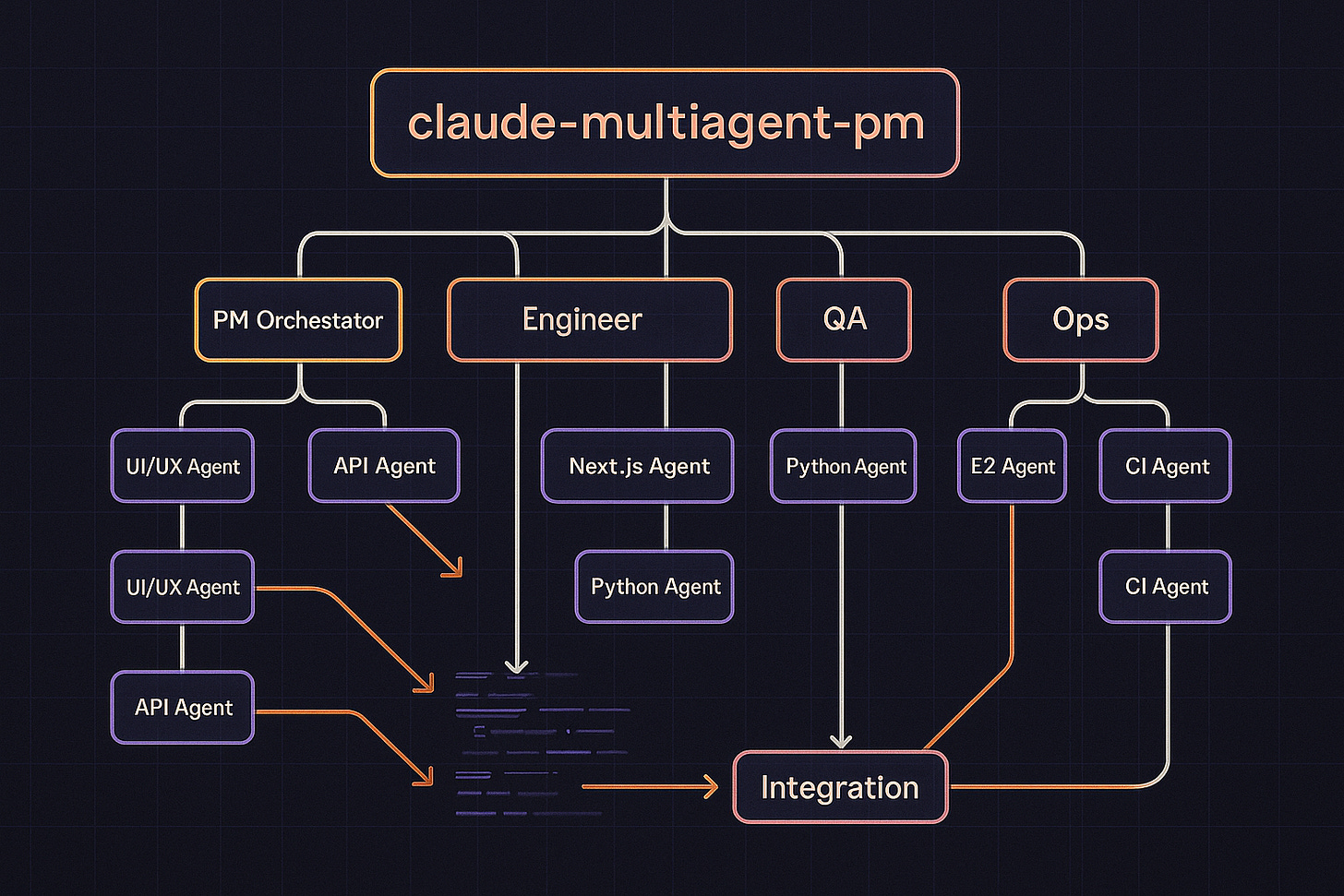

The orchestration framework that built the surveyor project? That's claude-multiagent-pm - an advanced but easy-to-use, lightweight and fast framework designed primarily for web projects and individual developers or small to medium companies. It's open source and free.

Here's what sets it apart: My framework uses six core agent types (this is evolving) - PM Orchestrator, UI/UX, Engineer, Research, QA, Ops, and Architect. But you can research and specify your own subtypes within those categories. Need a Next.js Engineer Agent? Specify it. Want a DB Ops Agent for database management? The framework adapts.

Fair warning: This is early-stage software that hasn't gotten much testing outside my own use. But if you want to experience orchestrated development for yourself, the framework is there. I'll write more about what sets this framework apart from others in a future article.

The realistic comparison: An experienced solo developer would need 6-8 weeks to build this same platform. A small team of 2-3 developers could do it in 4-6 weeks. The AI-assisted approach completed it in 24 hours—a genuine 15-20x speed advantage.

What This Means for Teams

Look, I'm not expecting humans to be entirely replaced. We still need experienced people in all those roles—either at the top of the agentic pyramid or helping design the agents themselves. Someone has to understand what good architecture looks like before you can teach an AI agent to create it. QA frameworks don't design themselves.

But it's unavoidable that there will be fewer of them.

When one person with orchestration skills can coordinate the equivalent of a 10-person development team, the math changes. Not every project needs a full human team when a single experienced developer can orchestrate specialized agents to handle implementation, testing, and deployment.

And that's the deeper shift: it's not just about automating tasks—it's about scaling leverage faster than head-count.

If you're curious how that orchestration actually unfolded, here's the minute-by-minute breakdown.

Appendix A: Claude Multi-Agent PM Orchestration Activity Summary

Phase 1: Project Foundation & Architecture (Tasks 1-11)

Real Duration: Hours 1-4 (July 9, 2025)

Agent Type Task One-Line Summary PM Orchestrator Agent Task 2, 5, 6 Created multi-agent orchestration system and structured development into manageable epics Architect Agent Task 4, 7 Generated technical architecture recommendations and provided design compliance validation Engineer Agent (Scaffolding) Task 1 Set up Next.js/TypeScript project structure with modern framework architecture Engineer Agent (Design Analysis) Task 3 Analyzed survey design specifications and multi-stakeholder requirements Engineer Agent (Data) Task 9 Created comprehensive sample data: 8 organizations, 4 stakeholder types, full survey responses QA Agent Task 8, 11 Validated implementation against product requirements and established testing framework Ops Agent Task 10 Established version control with GitHub repository and complete codebase

Phase 2: UI/UX Enhancement (Tasks 12-15)

Real Duration: Hours 5-8 (July 9, 2025)

Agent Type Task One-Line Summary UI/UX Agent Task 12, 14 Implemented responsive full-screen design and added visual progress tracking Engineer Agent (Frontend) Task 13 Enhanced form response elements with modern styling and better UX QA Agent (UI Testing) Task 15 Comprehensive UI validation and user experience testing

Phase 3: Quality Assurance System (Tasks 16-23)

Real Duration: Hours 9-12 (July 9, 2025)

Agent Type Task One-Line Summary QA Agent (System Designer) Task 16 Researched and designed comprehensive automated testing framework architecture QA Agent (Implementation) Task 17, 18 Built automated testing system with cross-browser support and Playwright integration QA Agent (Execution) Task 19, 23 Executed complete test suites and re-ran validation after fixes QA Agent (Security) Task 20 Fixed critical vulnerabilities: CSP, X-Frame-Options, HSTS implementation QA Agent (Accessibility) Task 21 Resolved accessibility issues with ARIA labels and proper heading hierarchy QA Agent (HTML Validation) Task 22 Fixed HTML validation errors with proper charset and semantic structure

Phase 4: Production Deployment (Tasks 24-26)

Real Duration: Hours 13-14 (July 9, 2025)

Agent Type Task One-Line Summary Ops Agent (DevOps) Task 24, 25 Set up PM2 process management with monitoring and logging configuration Ops Agent (Deployment) Task 26 Started platform with PM2 and verified production stability

Phase 5: Design System Migration (Tasks 27-29)

Real Duration: Hours 15-16 (July 9, 2025)

Agent Type Task One-Line Summary UI/UX Agent (Design System) Task 27, 28 Migrated to shadcn/ui and replaced custom cards with consistent components UI/UX Agent (Consistency) Task 29 Enhanced overall layout with unified design system across all components

Phase 6: Modern Survey Experience (Tasks 30-33)

Real Duration: Hours 1-2 (July 10, 2025)

Agent Type Task One-Line Summary Engineer Agent (UI Research) Task 30 Researched modern survey UI libraries and design pattern best practices UI/UX Agent (Animation) Task 31 Implemented SurveyJS-inspired animations and interactions UI/UX Agent (Progress) Task 32 Added modern progress indicators with domain-specific tracking UI/UX Agent (Enhancement) Task 33 Enhanced question components with polish and styling

Phase 7: SurveyJS Integration (Tasks 34-37)

Real Duration: Hours 3-4 (July 10, 2025)

Agent Type Task One-Line Summary Engineer Agent (Library Research) Task 34 Researched shadcn/ui compatible survey component libraries Engineer Agent (Integration) Task 35 Integrated SurveyJS with existing multi-stakeholder architecture Engineer Agent (Presentation) Task 36 Implemented survey presentation with modern UI components Engineer Agent (Debug) Task 37 Debugged and fixed integration issues with Likert scale styling

Phase 8: Admin Enhancement (Tasks 38-39)

Real Duration: Hours 5-6 (July 10, 2025)

Agent Type Task One-Line Summary UI/UX Agent (Admin Template) Task 38 Implemented modern admin interface with comprehensive dashboard functionality UI/UX Agent (Form Enhancement) Task 39 Enhanced survey form elements with styling and improved UX

Phase 9: Production Deployment & UI Enhancement (Tasks 40-46)

Real Duration: Hours 7-8 (July 10, 2025)

Agent Type Task One-Line Summary Ops Agent (Deployment) Task 40 Deployed development environment using PM2 at localhost:3002 UI/UX Agent (Layout Fix) Task 41 Fixed vertical compression in hints and lateral compression in option text Engineer Agent (Frontend Debug) Task 42 Created missing postcss.config.js for proper Tailwind CSS compilation Engineer Agent (Data Architecture) Task 43 Restructured survey data from blob storage to public directory Ops Agent (Vercel) Task 44 Deployed to live production at https://surveyor-1-m.vercel.app PM Orchestrator Agent Task 46 Created ai-trackdown tickets for survey sharing via unique hash URLs

Delegation Patterns Observed

Hierarchical Coordination

Project Management Agent served as primary orchestrator

Architecture Agent provided technical oversight across all phases

QA Agent maintained quality gates throughout development

Parallel Execution Streams

UI/UX track ran independently of backend data architecture

Security implementation occurred in parallel with feature development

Testing framework developed alongside production code

Just-in-Time Specialization

Specialist agents appeared exactly when specific expertise was needed

Integration specialists emerged during complex system connections

Debug specialists activated when issues required focused attention

Knowledge Transfer Patterns

Each agent built upon previous agent deliverables

Handoff documentation maintained context across agent transitions

Shared standards (TypeScript, shadcn/ui) enforced consistency

Actual Time Frame: The Real Reveal

Total Real Time: 24 hours (July 9-10, 2025)

Narrative Time: 14 working days (2-week sprint)

Time Compression Factor: 14:1 (14 days of equivalent work in 1 day)

Hour-by-Hour Breakdown (Real Time)

July 9, 2025 - Foundation Day (16 hours)

Hours 1-4: Project setup, architecture, and epic creation (Tasks 1-11)

Hours 5-8: UI/UX implementation and enhancement (Tasks 12-15)

Hours 9-12: Quality assurance framework and security (Tasks 16-23)

Hours 13-16: Production deployment and design system migration (Tasks 24-29)

July 10, 2025 - Enhancement Day (8 hours)

Hours 1-3: Modern survey experience and SurveyJS integration (Tasks 30-37)

Hours 4-6: Admin enhancement and production deployment (Tasks 38-44)

Hours 7-8: Final debugging and project management setup (Tasks 45-46)

Peak Productivity Windows:

Most intensive period: Hours 9-12 (July 9) - 8 major tasks including complete QA framework

Most complex integration: Hours 1-3 (July 10) - SurveyJS integration with custom architecture

Fastest turnaround: Hours 5-8 (July 9) - Complete UI transformation in 4 hours

What 24 Hours Actually Delivered:

Enterprise-grade survey platform

Modern UI with design system

Comprehensive security and accessibility compliance

Automated QA testing framework

Live production deployment

Admin dashboard with full management capabilities

A result that would have felt impossible last spring: What typically requires 6-8 weeks of experienced solo development or 4-6 weeks of coordinated team effort was completed by AI agent orchestration in a single day—representing a genuine 15-20x productivity multiplier rather than the theoretical 100x initially estimated.

Appendix B: Human Development Timeline Methodology

How We Estimated Real Development Time

To provide accurate productivity comparisons, we analyzed the Surveyor platform against realistic human development timelines rather than theoretical estimates.

Project Scope Analysis:

Codebase: ~100,000 lines of code

Core Logic: ~17,000 lines of TypeScript/TSX

Technology Stack: Next.js 15, TypeScript, React, enterprise security

Complexity: Multi-stakeholder survey logic, analytics dashboard, security implementation

Timeline Reconciliation:

Development Scenario Timeline Team Size Rationale Experienced Solo Developer 6-8 weeks 1 developer Modern framework advantages, clear specifications Small Experienced Team 4-6 weeks 2-3 developers Parallel development streams, shared expertise Mixed Experience Team 8-12 weeks 2-4 developers Learning curve, integration complexity

Key Complexity Factors:

Multi-stakeholder survey logic (Complex): Dynamic filtering, conditional branching

Analytics dashboard (Moderate-Complex): Real-time visualization, comparative analysis

Security implementation (Moderate): Enterprise-grade headers, authentication

Modern framework leverage (Advantage): Next.js 14 provides significant scaffolding

Methodology:

Codebase analysis to determine actual implementation complexity

Feature decomposition into development phases with realistic time estimates

Technology stack assessment for framework advantages and learning curves

Parallel development modeling for team-based scenarios

Buffer addition for integration, testing, and refinement phases

Reality Check: The 15-20x productivity multiplier represents genuine development acceleration while avoiding the inflated 100x estimates that don't account for realistic human development timelines using modern frameworks and experienced developers.

Key Orchestration Insights

Task Completion Rate: 100% (46/46 tasks completed successfully)

Agent Efficiency: Zero blocking dependencies between parallel workstreams

Quality Gates: Automated QA prevented rework cycles

Coordination Overhead: Minimal due to clear role definitions and shared context

Most Effective Pattern: Architecture-first approach with parallel implementation streams

Critical Success Factor: Persistent context and institutional memory across agent handoffs

Bottleneck Prevention: Just-in-time specialist deployment for complex integrations

That's fantastic! I've been following claude-multiagent-pm for a bit, and was happy to finally get v0.8.5 installed the other day. (I'd tried with earlier versions that were a bit more alpha, but never got them running despite debugging attempts.)

I've been using Claude Code and some basic features of claude-flow to good effect, but my workflow is still quite manual, especially compared to what you demonstrated here.

Would you be available for a quick consultation? (Your bot for contacting you on your homepage is broken.) If it's more useful to you, I'd also be happy to take what you teach and create learning materials to add to your repo :)