Nine engineering leaders walk into a garden. Sounds like setup for a joke, but what happened next was no laughing matter.

On June 18th, I hosted what was supposed to be a casual afternoon in Hastings-on-Hudson—garden tour at 2pm, some demos, good conversation, dinner if people wanted to stick around. The guest list included Google alums who'd built enterprise LLM solutions, startup founders scaling AI platforms, consultants reinventing business software. All practitioners actually shipping with agentic AI tools, not theorists or evangelists.

What emerged wasn't the usual conference-circuit pontificating. It was something more unsettling: despite working in complete isolation across different companies and problem domains, these builders had converged on nearly identical insights about how software development is fundamentally changing.

The convergence was so striking it's catalyzed plans for larger salon-style gatherings starting this fall in New York City. But first, let me tell you what we discovered.

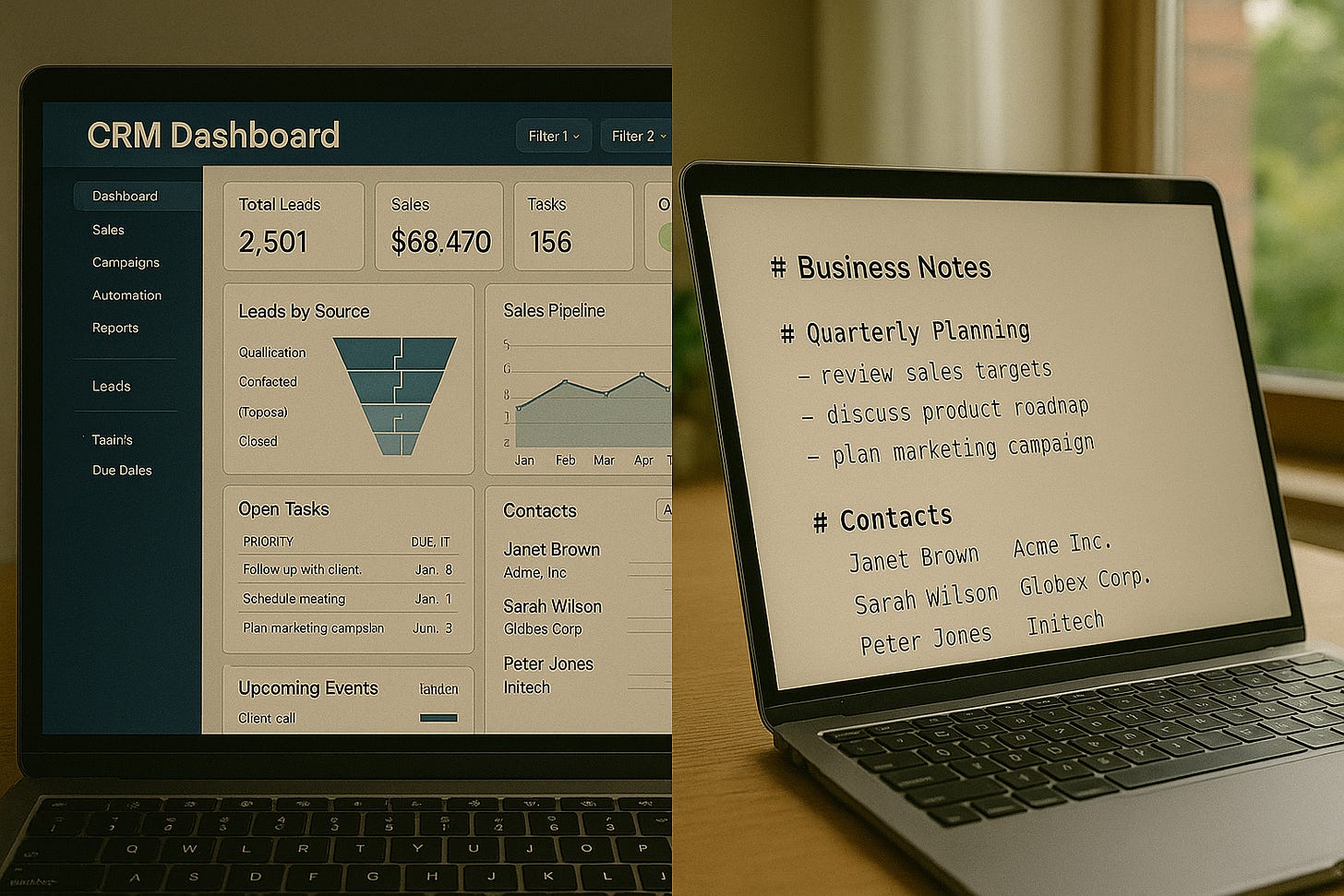

Two surprises surfaced immediately. Educational technology wasn't a niche application—it was driving the most sophisticated human-AI interaction models. And multiple people had independently turned to AI to solve one of business software's most persistent problems: building CRM systems that don't suck.

Everything Is Prompt-First Now

The biggest consensus point hit like a freight train: traditional software architecture is backwards.

One seasoned consultant with 45 years of coding experience put it bluntly:

"I realized that generative AI was going to make it cheap and disposable to write software, but I now believe that actually it's for a large class of applications to eliminate software."

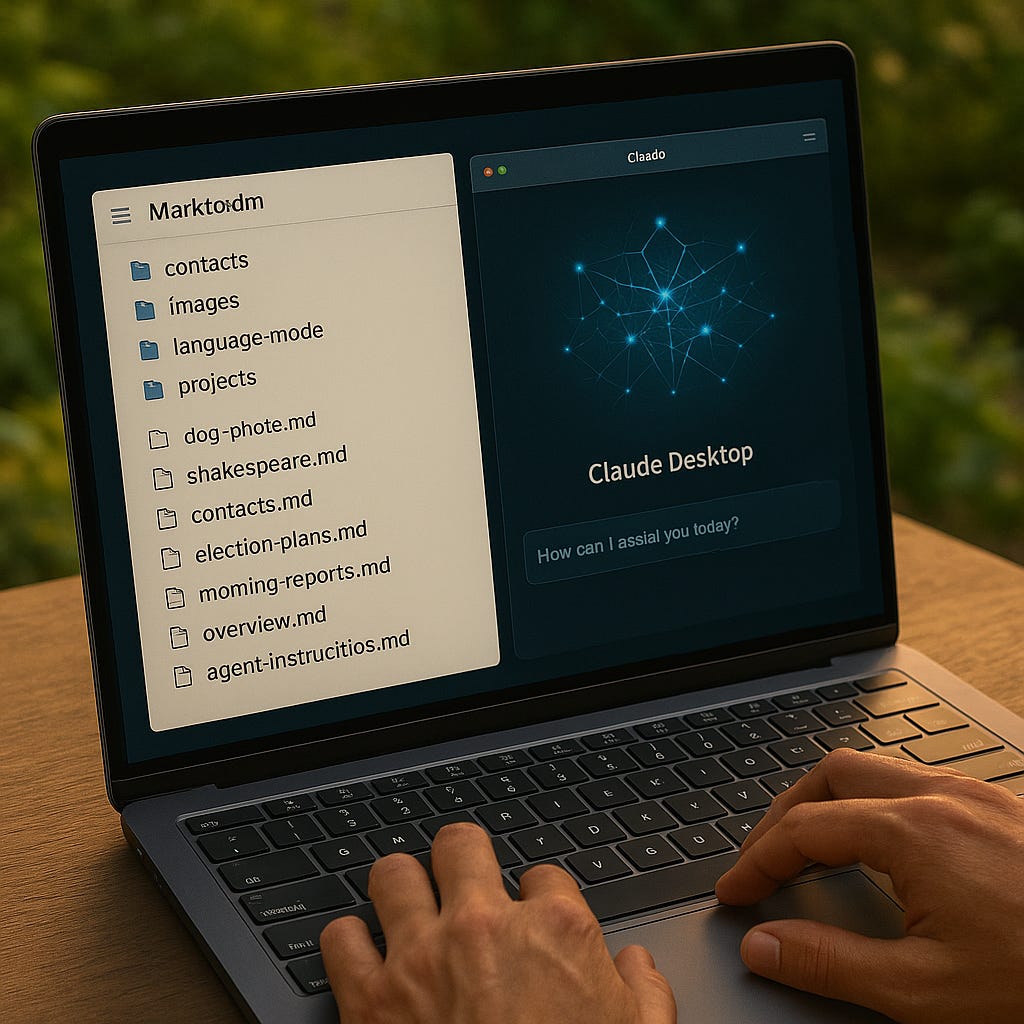

His demo proved the point. A fully functional CRM system—no database, no traditional code, just markdown files and Claude Desktop integration. Morning reports, business intelligence views, contact management. All of it running on natural language instructions.

An education platform founder echoed this from a different angle:

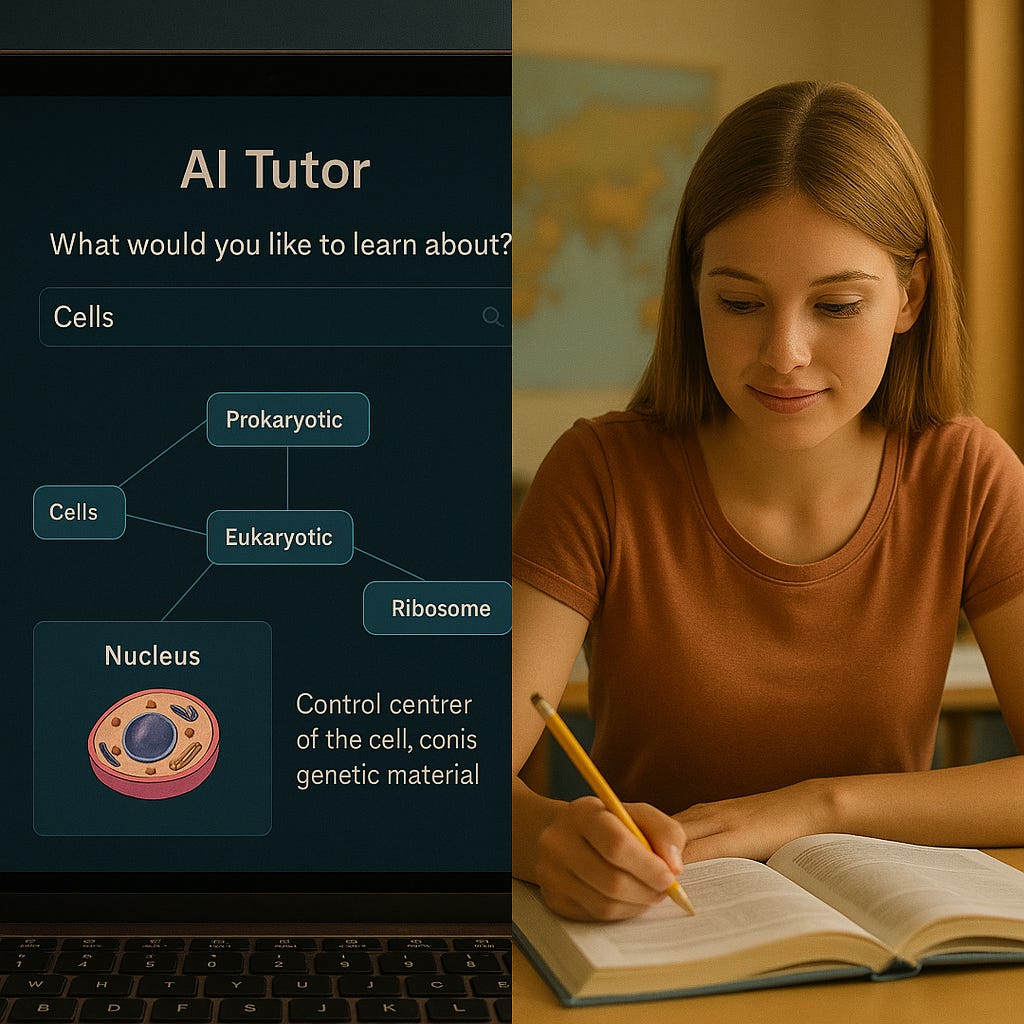

"Chatbots are dead because they sit in a box and they have a text channel in and out and it limits a lot of what they can do."

His AI tutoring platform had moved beyond interfaces entirely. Screen-sharing interactions where the AI could see and respond to any content in real-time. All orchestrated through sophisticated prompting strategies, not rigid programming logic.

The pattern was unmistakable. Start with the conversation. Build the scaffolding around that. Code becomes the last resort, not the first instinct.

Prompt Scaffolding Is Everything

Second consensus: AI tools work best when wrapped in thoughtful prompt scaffolding frameworks.

A former VP of Engineering showed his service maturity evaluation rubrics. Six levels from Experimental to Market Dominant. Not documentation—operational scaffolding that guided every implementation decision.

The education platform founder described similar systematic approaches:

"Underneath this, you know, if you want to call it like this sort of the scaffold is representations of skills and knowledge. I make a distinction between those two. When I say skills, I mean like cognitive programs... Skills are programs, knowledge is data."

This wasn't overhead. It was enablement. The most successful implementations had moved beyond ad-hoc AI usage to structured methodologies that could be repeated and scaled.

Every demo included systematic approaches for context management, prompt engineering, output validation. The prompt scaffolding was the application.

Tools Need Your Help (Obviously)

Third area of agreement: the AI isn't autonomous, and that's fine.

Everyone had learned this lesson the hard way. One participant captured the reality perfectly:

"I'm almost always working between three projects at a time. It's just too... It's too tedious to sit and watch it. And sometimes it's thinking, it's not even the output, so it's all..."

The most sophisticated implementations included human-in-the-loop mechanisms. The CRM system builder described his approach:

"I asked Claude to generate some reports for me, and Claude writes the query logic and I get these incredible views."

Human curates the requests. AI executes the technical implementation. Partnership model, not replacement model.

Engineering Principles Haven't Disappeared

Here's what was most gratifying: what we learned about engineering BEFORE AI is what makes it work now.

The group agreed unanimously on this point. One participant articulated it perfectly:

"Good engineers don't invent unless you are being paid specifically to innovate and invent something which is a really expensive, dangerous, risky operation."

Another added:

"engineering is the science of trade offs and compromises and optimizations."

The discipline hadn't disappeared—it had moved up a level of abstraction. Instead of optimizing algorithms, we're optimizing prompts. Instead of architecting databases, we're architecting conversation flows.

Good engineering practices amplify AI effectiveness. Bad practices amplify AI failures.

The Workforce Problem

Fourth consensus was also the most painful: hiring for this new reality is brutal.

One founder shared his experience with a senior developer who refused to adopt AI tools:

"I had one senior super experienced senior developer. And the biggest issue that I have... I'm not at what I was hoping. I'm 3.5 multiplier. I feel like I'm best case between 0.8 and 1.2. And the reason being that my senior developer does not want to use AI."

The group's advice was unanimous and harsh: in a venture-backed environment, cultural fit around AI adoption is non-negotiable.

"People's attitudes fall into three camps. One are like us early adopters, jump forward. AI first. One are maybe like your guy, active resistors. And there's people in the middle that will probably go to the first camp when they actually understand what's going on."

The solution isn't technical training. It's hiring for hyper-learners who can adapt to fundamentally new ways of working. The 5% who thrive in ambiguity rather than the 95% who need established patterns.

Value Without Code

Final convergence point: you can build serious business applications without traditional programming.

The markdown CRM was the most dramatic example. A design director showed interactive concept exploration through visualization interfaces. The education platform integrated complex pedagogical frameworks through conversational interactions.

As one participant put it:

"We're all ontologists now."

This wasn't about replacing programmers. It was about expanding who could build meaningful software solutions.

The EdTech Revelation

Educational technology emerged as the secret laboratory for AI advancement. Not because education is inherently more important, but because modeling human learning pushes AI systems to their limits.

The AI tutoring platform demonstrated screen-sharing interactions that could see and respond to any content. A classroom analysis system used multimodal AI to process recordings and provide teacher reflection tools. Even the design visualization platform was fundamentally about learning—helping users explore connected concepts dynamically.

As one EdTech founder noted:

"What you've got in a skill tree is metadata. It represents the skill structure or skill relations... this skill is related to this skill, it's a prerequisite for this skill."

The complexity of modeling human learning was driving innovations in AI system design with applications far beyond education.

The CRM Revelation

Multiple practitioners had independently tackled one of business software's most persistent problems: CRM systems that actually work.

The group quickly discovered they shared universal frustration with traditional CRMs. One participant explained:

"CRMs are terrible because the primary driver for the design of commercially licensed CRMs is that they are systems of record whose primary purpose is to allow CROs and sales managers to hold sales reps accountable and to own their data."

But AI offered a different approach. Instead of forcing human behavior to conform to database schemas, practitioners were building systems that understood natural language descriptions of business relationships and processes. The markdown-based CRM eliminated traditional programming entirely, operating through conversational instructions like "give me the morning report" that triggered complex business intelligence workflows.

This wasn't just about better user interfaces. It represented a fundamental shift from data-first to language-first business software design.

What This Means

The convergence among these practitioners suggests we're witnessing something deeper than coincidental thinking. These builders may have discovered fundamental principles about how humans and AI systems work best together—principles that transcend specific technologies or applications.

If these insights represent genuine invariants of human-AI collaboration, then organizations everywhere will need to grapple with the same transformations: shifting from code-first to prompt-first thinking, building systematic scaffolding around AI processes, accepting human guidance as essential rather than optional, applying engineering rigor at new levels of abstraction, prioritizing learning agility in hiring, and recognizing that value creation no longer requires traditional coding skills.

The small gathering was so revealing that it's catalyzed plans for larger exploration. Starting this fall, we'll be hosting salon-style gatherings in New York City, bringing together more practitioners who are actively building with these technologies. The goal isn't networking or pitching—it's deepening our collective understanding of what appears to be a fundamental shift in how software gets built.

For organizations trying to navigate this transformation, the message is clear: the fundamental patterns are stabilizing. The question isn't whether these changes are coming—it's whether you'll learn from early practitioners or discover these lessons the hard way.

The future belongs to those who can think prompt-first, scaffold systematically, guide intelligently, engineer abstractly, hire adaptively, and create value conversationally. The practitioners in that room have already started building it. The larger community of builders exploring these ideas will shape how the rest of us follow.

This article draws from real conversations with AI engineering leaders who shared their experiences and insights anonymously. Their convergent thinking suggests we're witnessing the emergence of fundamental principles for human-AI collaboration. If you're actively building with agentic AI tools and interested in participating in future gatherings, reach out.