Three months into 2025, the AI coding assistant landscape has changed dramatically. We've seen Cursor hit $100M ARR with just 60 employees, Google launch free Gemini Code Assist for individuals, and new research showing 4x more code cloning in AI-assisted development.

Here's what hasn't changed: you still need to watch what these agents are doing.

Building on my previous pieces about Nanny Coding and Capturing Context, let me explain why staying engaged matters more now than ever.

Where Things Go Wrong First

Most Claude Code errors happen during the reading phase. The agent misinterprets your codebase structure, makes assumptions about dependencies, or overlooks edge cases in your current implementation. These aren't dramatic failures—they're subtle misreadings that compound if you're not paying attention.

What happens when you don't catch it? Small bugs snowball.

What's particularly valuable about Claude Code's approach is those user confirmation checkpoints. When the agent pauses to ask "Should I proceed with this approach?" that's your moment to catch trajectory errors before they propagate through the entire workflow.

The Battle for Memory Control

While Claude Code requires manual documentation of patterns and errors, the competition has automated everything. Cursor's new Memories feature launched in beta this year and stores coding preferences across sessions. Windsurf's Cascade Memories system automatically generates context when it encounters useful information. Even Google's free Gemini Code Assist maintains chat windows that persist across coding sessions.

The economics tell the real story. Cursor hit $100M ARR while operating at negative gross margins. They're spending more on compute than they're making in revenue. That memory persistence comes at a cost—literally.

Claude Code's approach isn't a limitation. It's a feature designed for sustainable operations

Where Memory Still Fails

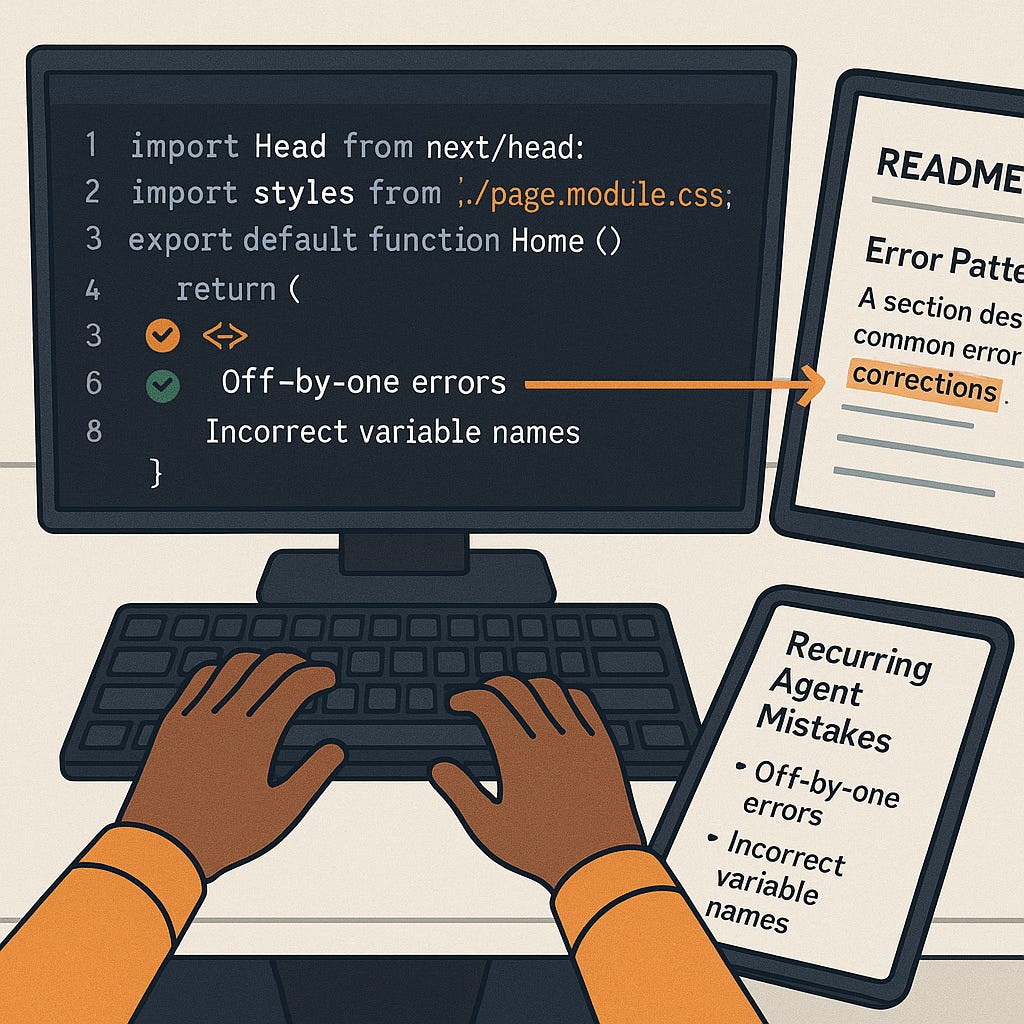

Most AI agents recognize when they make mistakes but won't automatically remember those errors for future sessions. You'll see Claude Code acknowledge the problem, adjust course, and deliver a working solution. Start a new workstream tomorrow? Same error again.

Recent research from GitClear shows 4x more code cloning in AI-assisted development. When agents don't remember their mistakes, they default to patterns they've seen before—often generating repetitive, low-quality code instead of learning from corrections.

This is where active nanny coding becomes strategic. Similar to Capturing Context, but focused specifically on coding workflows, maintain a running record of:

Common misinterpretations the agent makes about your codebase

Patterns it tends to overlook (specific linting rules, architectural constraints, deprecated approaches)

Successful corrections that should become standard practice

The TODO Queue Strategy

Claude Code offers a pattern that's become more valuable as other tools get expensive. When you notice an error or want to add guidance mid-stream, append "(add to TODO)" to your instruction.

Example: "Fix the date parsing logic to handle UTC timezone properly (add to TODO)"

Instead of immediately re-tasking the agent and potentially leaving current work incomplete, this queues your guidance for later implementation. The agent continues with its current task while noting your addition for the task list.

This prevents context switching that might cause the agent to forget partially completed work—a real risk when working with complex, multi-step implementations. More importantly, it keeps oversight costs predictable while industry leaders struggle with compute expenses.

Practical Implementation

The most effective nanny coding I've observed follows this pattern:

During active development:

Review each confirmation checkpoint carefully

Note patterns in misreadings or assumptions

Use TODO queuing for non-urgent corrections

Between sessions:

Update project README or instructions file with recurring error patterns

Document successful correction strategies

Add specific architectural constraints the agent tends to miss

Example correction documentation:

## Common AI Assistant Patterns to Avoid

- Tendency to use older React patterns (class components vs hooks)

- Misreads our custom error handling in /lib/errors.js

- Often suggests npm packages we've specifically avoided for security reasons

This isn't about not trusting the agent—it's about creating a feedback loop that makes future interactions more effective

The Compound Benefits

What starts as error correction becomes process improvement. Each mistake you catch and document makes subsequent coding sessions more productive. The agent spends less time backtracking and more time building features correctly from the start.

More importantly, this active engagement keeps you connected to what the code is actually doing. The goal isn't to eliminate human oversight—it's to make that oversight more strategic and less reactive.

Think of it this way: nanny coding isn't about babysitting the AI. It's about creating a collaborative debugging process where human pattern recognition guides AI execution efficiency.

Bottom Line

Those confirmation checkpoints aren't speed bumps—they're strategic advantages. While Cursor and Windsurf spend more on compute than they earn trying to automate everything, Claude Code's manual approach keeps costs predictable and control explicit.

The current research on code quality degradation backs this up. When AI agents operate without human oversight, they default to repetitive patterns that reduce long-term code quality.

Nanny coding isn't about babysitting the AI. It's about creating a feedback loop where human pattern recognition guides AI execution efficiency. While the industry burns cash figuring out sustainable economics, that hands-on approach remains the most reliable path to quality code.