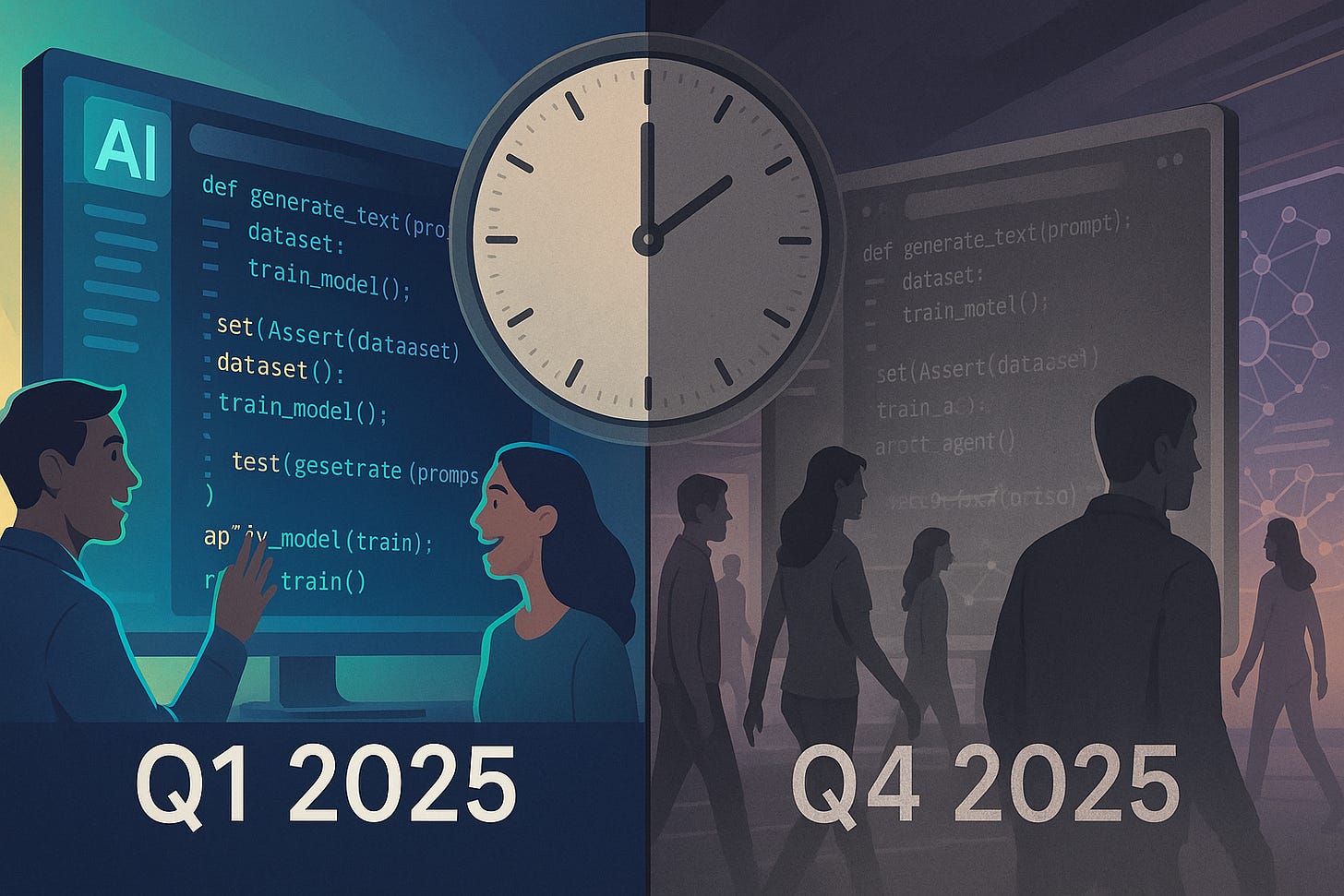

OpenAI’s Codex-Max Solves Q1 2025’s Problem in Q4 2025

It is definitely an improvement, but not enough to make anyone want to switch from it's better competitors.

I spent the day putting GPT-5.1-Codex-Max through real-world testing on actual production features. Not demos, not toy projects - features for my AI Power Rankings site, tracked through proper tickets, evaluated by my multi-agent orchestration system.

The results? Claude MPM gave it a B-. Not my assessment - an AI orchestration framework evaluating another AI tool based on delivered work quality.

That grade tells you everything about where Codex-Max stands: technically competent, meaningfully improved over the original Codex, and fundamentally too late to matter competitively.

TL;DR

Tested Codex-Max on two production features for AI Power Rankings, tracked via mcp-ticketer with full receipts

Claude MPM evaluation: B- grade on delivered work quality

Significant improvements over original Codex - better UX, stronger coding capabilities

Critical gap: No local operations, no deployment automation, no QA integration

Augment Code’s single agent handles full lifecycle including deployment/QA that Codex-Max can’t touch

Bottom line: Solving Q1 2025’s problem in Q4 2025 when the market has moved to complete workflow orchestration

Better than vibe coding, but that’s no longer the competitive bar

The testing setup: real features, real tracking

I don’t do toy demos. When I evaluate tools, I use them on actual production work and document everything. For Codex-Max, I assigned two features on AI Power Rankings:

Feature enhancement for tool comparison views

Data integration improvements for ranking algorithms

The methodology was straightforward: Codex-Max handled the full implementation, creating and managing its own tickets through mcp-ticketer - its MCP integration works fine. When it was done (or done-ish), I brought in Claude MPM to assess the results and fix issues, also using proper ticket tracking and updates. This isn’t me giving vibes-based opinions - it’s one AI system reviewing another’s delivered work based on measurable outcomes.

The receipts are public: GitHub Issue #52 shows exactly what was attempted, what succeeded, what failed, and how long everything took. You can see the actual code Codex-Max produced in the implementation branch.

What Claude MPM found

The B- grade breaks down into specific strengths and weaknesses:

What Codex-Max got right:

Clean, readable code generation

Reasonable architectural decisions for straightforward features

Significantly better UX than the original Codex

Multi-file editing that actually worked most of the time

GitHub integration that properly understood PR context

Where it fell short:

No ability to handle local deployment workflows

Zero QA automation capabilities

Cloud-only execution created friction for iterative testing

Context preservation degraded on longer tasks despite compaction claims

Required more hand-holding than expected for edge cases

The specific example that crystallized the problem: When the feature needed deployment validation and QA checks, Codex-Max just... stopped. It generated the code, opened the PR, and that was it. My agents handle local ops deployment and QA automatically - Codex couldn’t touch any of that workflow.

The timing problem: Q1 in Q4

Here’s what makes this interesting: If I’d had Codex-Max five months ago, in Q2 2025, I would have been thrilled. The improvements over the original Codex are substantial. The UX polish is real. The coding quality bump matters.

But we’re not in Q2 2025. We’re in Q4 2025, and the market evolved faster than OpenAI shipped.

What would have been competitive in January 2025:

Better code generation than GitHub Copilot

Multi-file editing with reasonable accuracy

Cloud execution for async workflows

GitHub integration for PR reviews

What the market actually looks like in November 2025:

Complete workflow orchestration from specification to deployment

Multi-agent systems handling specialized tasks in parallel

Local operations integration as table stakes

Full QA automation and testing frameworks

Context preservation across days-long development sessions

Codex-Max improved a single-agent cloud execution model. The market moved to orchestrated multi-agent systems with full lifecycle coverage.

In AI development tools, nine months isn’t a product cycle. It’s a generation.

What modern tools handle

My daily driver is Claude MPM on top of Claude Code - that’s what handles my orchestration needs. But here’s what makes Codex-Max’s limitations so stark: Even Augment Code’s single agent handles everything Codex-Max can’t.

To be fair to Codex-Max: I didn’t spend as long building scaffolding for it as I would for Claude Code. I gave it an AGENT.md file (converted from my CLAUDE.md), but I didn’t invest the same level of context setup. That said, the comparison to Augment Code remains instructive - Augment doesn’t need extensive scaffolding because it builds a thorough semantic index of your entire codebase automatically. That’s where modern tools actually are.

Deployment automation: Augment Code manages local deployment, runs validation checks, and handles rollback if needed. Codex-Max stops at code generation.

QA integration: Augment Code triggers test suites, analyzes failures, and iterates on fixes. Codex-Max requires manual testing.

Context acquisition: Augment Code builds semantic indexes automatically, understanding codebases without manual scaffolding. Codex-Max relies on explicit configuration and context seeding.

Local operations: Augment Code executes locally with full filesystem and process access. Codex-Max lives in cloud sandboxes with limited local integration.

Modern tools handle the complete development lifecycle with minimal setup overhead. Augment Code does it with a single agent and automatic context acquisition. I do it with Claude MPM’s multi-agent orchestration and explicit context management. Both approaches work - they just represent where the market actually is.

The fact that a single modern agent handles what Codex-Max can’t shows this isn’t a fundamental AI limitation. It’s an architectural choice OpenAI made that the market already moved past.

What Codex-Max delivers

Credit where it’s due: OpenAI shipped meaningful improvements over the original Codex.

The UX polish is real. The terminal interface works smoothly. The GitHub integration feels native rather than bolted-on. The multi-file editing produces sensible diffs. The cloud execution model eliminates local environment concerns for teams that want that abstraction.

The coding quality improved. Comparing Codex-Max output to original Codex shows clear advancement. The code is cleaner, the architectural decisions are more sound, and the edge case handling is better. That 77.9% SWE-bench Verified score isn’t marketing fiction.

The compaction technology works. Tasks do run longer than the original Codex’s hard limits. The 24+ hour sessions OpenAI advertises are real, though in practice I found context degradation kicked in well before that for complex features.

For teams that don’t have orchestration frameworks, don’t need deployment automation, and primarily want better code generation with nice GitHub integration, Codex-Max represents a solid upgrade. It’s a good tool.

It’s just a good tool for a problem space that evolved past it.

Better than vibe coding—but that’s not the bar anymore

Codex-Max beats casual “vibe coding” - developers prompting ChatGPT or Claude for code snippets and copying them over.

That’s not the competitive bar anymore.

The bar in Q4 2025 is:

Complete workflow automation from specification to deployment

Multi-agent orchestration handling specialized tasks in parallel

Full lifecycle coverage including ops, testing, and monitoring

Context preservation across multi-day development sessions

Local and cloud integration based on actual workflow needs

Codex-Max cleared the “better than copy-paste from ChatGPT” bar. It didn’t clear the “competitive with actual orchestration frameworks” bar.

For hobbyists and individual developers doing straightforward features, Codex-Max might be an improvement over their current workflow. For teams doing serious development work with orchestration systems, it’s a step backward from where they already are.

The architectural problem: single-agent vs. orchestration

Codex-Max improved a single-agent model. The market moved to orchestrated multi-agent systems.

Single-agent limitations:

One context window (even with compaction)

One reasoning path

One set of tool integrations

Sequential execution only

Multi-agent advantages:

Specialized agents for different tasks (research, coding, testing, deployment)

Parallel execution across workstreams

Persistent shared memory across agents

Coordinated hand-offs at natural boundaries

I can spin up Claude MPM with specialized agents for:

Specification agent: Analyzes requirements and creates detailed specs

Architecture agent: Designs system structure and integration points

Implementation agent: Writes code following architectural guidelines

Testing agent: Creates test suites and validates functionality

Deployment agent: Handles local ops, QA checks, and rollout

Review agent: Evaluates overall work quality and assigns grades

That’s not aspirational. That’s what evaluated Codex-Max and gave it a B-.

OpenAI optimized the wrong abstraction. They built a better single agent when the market needed orchestration infrastructure.

Market context: the window closed

The timing becomes even more painful when you look at the competitive landscape:

Claude Code Max ($200/month): 7-hour autonomous sessions, local-first execution, full MCP support for tool integration, sub-agent coordination

Augment Code ($50-100/month): Complete lifecycle automation, deployment integration, single-agent simplicity with full capability

Cursor ($20-200/month): Model-agnostic deep indexing, local execution, proven developer adoption

GitHub Copilot ($10-39/month): Ubiquitous IDE integration, massive installed base, continuous improvement

Codex-Max at $200/month (Pro tier) matches Claude Code Max’s price but not its capabilities. It matches the capabilities of tools costing 1/4 the price but lacks their features.

The positioning is stuck between market segments. Too expensive to be the “good enough” option. Not capable enough to be the “complete solution” option.

What this means for OpenAI

This isn’t just a product timing miss. It reveals a strategic challenge:

OpenAI treats AI coding tools as improved single agents. The market treats them as orchestration infrastructure for multi-agent workflows.

That’s a fundamental difference in how you approach product development:

Single-agent optimization focuses on:

Better code generation quality

Longer context windows

Faster execution

Smoother UX

Orchestration infrastructure focuses on:

Agent coordination protocols

Shared memory systems

Tool integration frameworks

Workflow automation patterns

OpenAI delivered the former. The market needs the latter.

The compaction technology is clever. The UX improvements are real. The coding quality gains matter. But they’re all optimizations of an architecture that became obsolete while OpenAI was building it.

The larger pattern

This isn’t unique to Codex-Max. I’m seeing the same pattern across the AI tools market:

Companies that win: Ship fast, iterate based on actual usage, adapt to market evolution

Companies that struggle: Build technically impressive solutions to problems that moved on

OpenAI’s challenges:

Long development cycles

Feature announcements without product availability

Building for what the market was, not what it’s becoming

The market’s evolution:

Quarterly shifts in competitive dynamics

Rapid adoption of new paradigms

Ruthless abandonment of tools that don’t keep pace

In fast-moving technology markets, timing isn’t just important - it’s definitional. A technically superior solution shipped nine months late loses to an adequate solution shipped on time.

Bottom line

OpenAI built a good product that arrived after the market moved on. Codex-Max would have been competitive in Q1 2025. It’s not competitive in Q4 2025.

The B- grade from Claude MPM captures this perfectly: Technically competent, meaningfully improved, fundamentally inadequate for current market needs.

For developers evaluating tools:

Skip Codex-Max if: You need deployment automation, local operations integration, QA workflows, or multi-agent orchestration. Use Claude Code Max or Augment Code instead.

Consider Codex-Max if: You want better code generation than Copilot, like cloud execution, primarily work with GitHub, and don’t need full lifecycle automation. At $20/month (Plus tier) it might make sense.

In AI development tools, market evolution outpaces product development cycles. Building the best version of yesterday’s architecture loses to shipping adequate versions of tomorrow’s architecture.

OpenAI solved Q1 2025’s problem in Q4 2025. That’s not fast enough.

I’m Bob Matsuoka, building multi-agent orchestration systems and writing about practical AI development at HyperDev. For more on AI coding tools in practice, read my analysis of Claude Code’s multi-agent capabilities or my comparison of orchestration frameworks in production.

Excellent analysis! You really put Codex-Max through its paces. Its wild how quickly the goalposts shift in AI, solving Q1 2025's problems in Q4 is just too slow. That lack of local ops and full deployment integration is such a miss, totally get the B- grade.

Your point about single-agent vs orchestration hits hard. I've been seeing the same patern in my work where tools that just genrate code well are losing to tools that handle the whole workflow. The deployment gap you mentioed is huge, teams I know basically ignore tools that stop at PR creation now. curious if you think OpenAI can catch up or if they're too locked into that single-agent model?