Introducing AI Power Rankings: The Data-Driven View of Agentic Coding Tools

Building the benchmark system the industry actually needs

I promised this Monday. Running a couple days late, but for good reason.

After three months of tracking agentic coding tools through monthly rankings, one thing became clear: we needed better data. Not just adoption metrics or funding announcements, but actual performance benchmarks that matter to developers making tool decisions.

So I built AI Power Rankings. Two days of focused development. Worth the delay.

Why Another Ranking Site?

The market moved faster than anyone anticipated. When I started the monthly HyperDev rankings in March, we had maybe a dozen serious agentic coding tools. Today we're tracking 30+ with new ones launching weekly. The problem isn't finding tools—it's evaluating them systematically.

Most developer tool reviews focus on subjective experience. "This feels better" or "the UX is smoother." That matters, but it's not enough when you're choosing infrastructure for your team. You need quantifiable performance data.

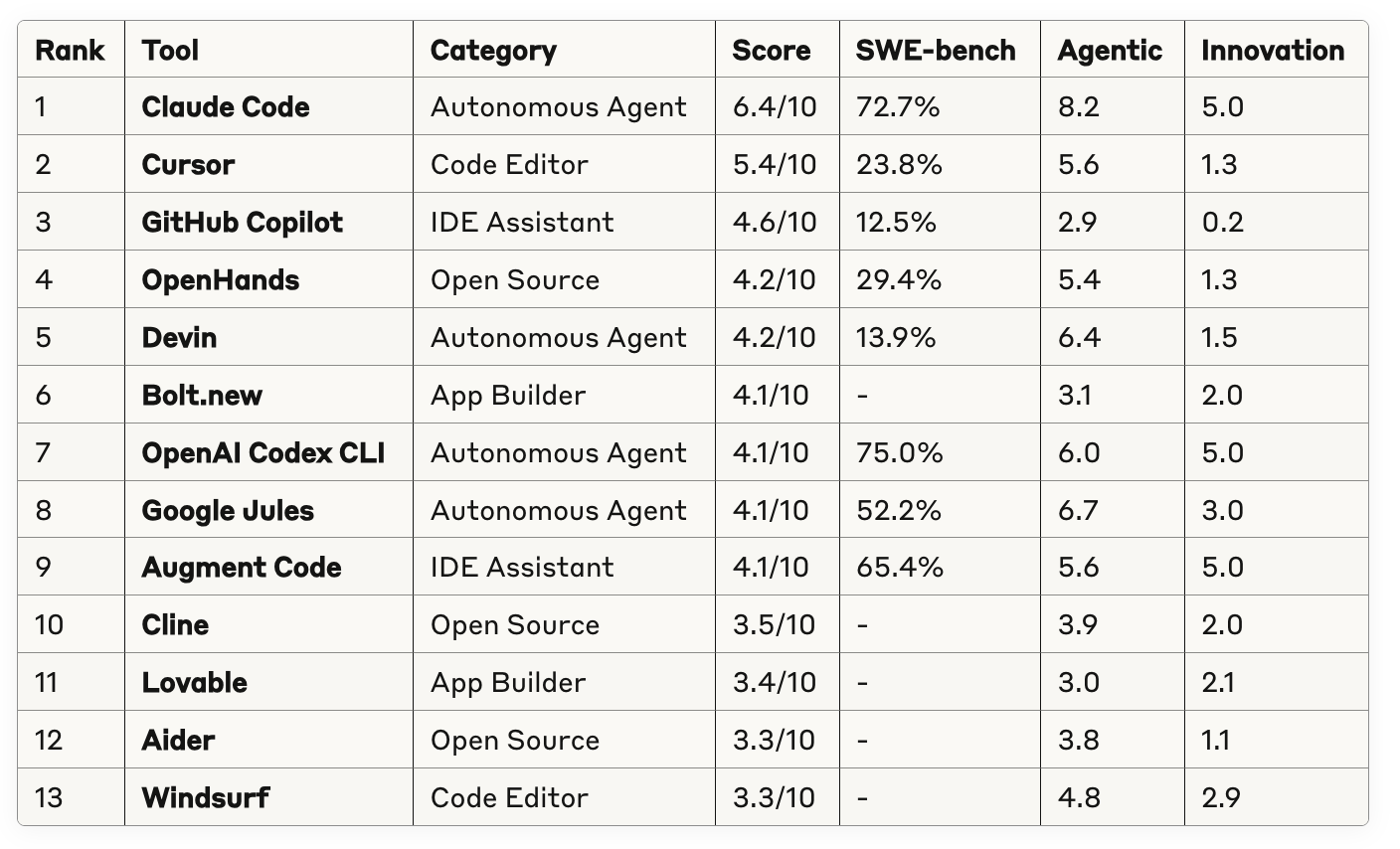

AI Power Rankings combines three critical metrics:

SWE-bench Performance - How well does the tool solve real software engineering tasks? Claude Code's 72.7% score vs Cursor's 23.8% tells a story that user sentiment doesn't capture.

Agentic Capability - Can it work autonomously, or does it need constant hand-holding? The shift from assistive to autonomous is the defining trend in coding AI.

Innovation Factor - Is this pushing the field forward, or just packaging existing capabilities differently?

The composite score gives you a single number to compare tools, but the breakdown shows where each tool excels.

Current Rankings (June 2025)

Full rankings for 30+ tools at aipowerranking.com

What I'm Learning from the Data

Claude Code dominates technical benchmarks with a 72.7% SWE-bench score and 8.2 agentic rating. That #1 ranking reflects pure capability. But it's not capturing market adoption the way Cursor is.

The benchmark vs. adoption gap is real. Cursor's $500M ARR and 360,000+ paying developers earned it #2, but the 23.8% SWE-bench score suggests there's more to developer tool success than raw performance.

Open source tools are punching above their weight. OpenHands at #4 with a 29.4% SWE-bench score and high innovation rating (1.3) shows the community is building serious alternatives.

The Windsurf situation needs updating. The site currently lists them as "acquired by OpenAI for $3 billion in April 2025" - that deal was announced but hasn't closed, and Anthropic cutting their Claude access suggests it might not happen. This is exactly the kind of real-time data challenge I'm solving for.

Full transparency: this entire site was built in two days. Database architecture, ranking methodology, responsive design, data collection—all of it. I'll write a future article breaking down exactly how I did it, because the speed of building useful tools has fundamentally changed.

One-Man Operation, Work in Progress

This is a one-person project with all the errors and limitations that implies. I'm balancing tool evaluation, data collection, and site development while consulting and writing. Some data points are outdated. Some tools are missing. Some categorizations are debatable.

But that's also the point. Rather than wait for perfection, I'm shipping and iterating based on real usage. The developer tool landscape moves too fast for traditional analysis cycles.

What's working:

Systematic performance tracking across comparable metrics

Regular updates as tools evolve and new ones launch

Focus on technical capability over marketing claims

What needs work:

Real-time data updates (some info lags weeks behind reality)

Broader tool coverage (missing several emerging players)

Better weighting of market adoption vs technical performance

The Plan Moving Forward

AI Power Rankings becomes the quantitative backbone for HyperDev's monthly analysis. The site provides systematic benchmarking. The newsletter provides context, experience, and strategic insight.

Short-term priorities:

Fix the Windsurf acquisition status

Add missing tools like Claude.ai Pro and ChatGPT Pro as coding platforms

Implement real-time data feeds for key metrics

Expand SWE-bench coverage to more tools

Longer-term vision:

Custom benchmarks for enterprise use cases

Integration with GitHub data for adoption tracking

Community contributions for tool testing and evaluation

API access for developers building on the rankings

Why This Matters

The agentic coding revolution is happening faster than most realize. GitHub reports 97% of developers using AI tools. Google says 25% of new code is AI-generated. These aren't experimental adoption numbers—this is infrastructure.

But choosing the wrong tool costs months of productivity and thousands in licensing. Teams need data-driven evaluation, not just enthusiastic reviews or marketing demos.

AI Power Rankings gives you the benchmark data. HyperDev gives you the strategic context. Together, they help developers make smarter tool decisions in a rapidly evolving landscape.

The site is live at aipowerranking.com. Expect frequent updates as I fix data issues and add new tools. This is version 0.1 of something that should become essential infrastructure for the developer tools ecosystem.

What tools are missing? What data points matter most to your team? Hit reply and help me build the ranking system the industry actually needs.

This hit my inbox at 5:51 am local time. Good to know that Claude is still King, since the reason I am up at this ungodly hour is I was rate limited out of my max account last night and had to wait five hours to get my compute back. I fear my token habit may be developing into an addiction. :).

If you're interested in peeking under the hood, post your github username here and I'll add you as a collaborator on the project!