I spent $1.07 and 8 minutes fixing a bug today that would have cost me $376 to outsource. That’s based on hiring an offshore developer at typical $40/hour rates for work that would take roughly 9.4 hours based on the agent execution time.

But here’s the thing about personal projects: my consulting time commands good rates, but for my own website? I’m not billing anyone. The comparison that matters isn’t theoretical cost savings—it’s $376 in actual engineering costs versus $1.07 in AI costs, plus 8 minutes of my attention versus the coordination overhead of managing another engineer. (I know the numbers are small, but bear with me, they’re meant to be illustrative).

More importantly: this bug touched multiple system layers that would have traditionally required coordinating several specialists. RSS feed integration, data transformation, React rendering, deployment pipeline. I handled all of it solo using orchestrated AI agents.

Eight minutes to save $375 and tackle work requiring team coordination shows why we’re seeing heightened interest in agentic coding tools—though my experience represents a best-case scenario worth examining critically.

I tracked every token. I have the receipts. And the session logs reveal something more interesting than just impressive cost savings: they show exactly which scenarios deliver real value, and which don’t.

The Session: From Bug Report to Production

Here’s what actually happened. I noticed my personal website’s blog integration was broken. Posts weren’t loading correctly, the feed was throwing errors, and the whole thing needed fixing before it became a bigger problem.

Rather than hiring an engineer and managing the work, I used Claude-MPM to orchestrate four AI agents in a single browser tab:

Research Agent - Investigated the codebase, identified the broken blog feed integration, analyzed the data flow from Substack RSS to the site’s display logic.

Engineer Agent - Implemented the initial fix attempt, modified the feed fetching logic, updated the data transformation pipeline.

Web QA Agent - Ran Playwright tests against the staging deployment, verified the fix worked, validated all blog posts loaded correctly.

React Engineer - Handled the root cause analysis when the first fix proved incomplete, restructured the entire blog integration architecture, ensured production-ready quality.

My actual work? Writing the initial prompt, monitoring progress in a single tab while working on four other projects, and occasionally redirecting agents when they went down wrong paths. Eight minutes of attention across an hour of wall-clock time while the agents executed in parallel.

This is my normal workflow now: Claude-MPM managing agent coordination in one tab while I context-switch between five active projects. The orchestration framework handles the parallel execution and agent handoffs. I provide strategic direction and quality oversight.

The Economics: $1.07 Instead of $376 (Plus Coordination Overhead)

Let’s break down what this work actually cost, with transparent assumptions:

Token Consumption:

Total tokens: 104,641 tokens (52.3% of Claude’s 200K context window)

Input tokens: ~41,856 tokens (context, instructions, code)

Output tokens: ~62,785 tokens (analysis, code generation, fixes)

Cost Breakdown at Claude Sonnet 4.5 rates:

Input cost: $0.13 ($3 per million tokens)

Output cost: $0.94 ($15 per million tokens)

Total AI cost: $1.07

My Time Investment:

Active oversight: 8 minutes of attention

Wall-clock time: ~60 minutes while agents worked in parallel

Personal project time: Not billable to anyone

Alternative: Hiring an Engineer:

Offshore/nearshore developer at typical $40/hour rate (conservative market rate)

Estimated work: 9.4 hours (extrapolated from agent execution time plus debugging/testing)

Engineering cost: $376

My coordination time: Minimum 1 hour for requirements, review, deployment

Total traditional cost: $376 in hard costs plus coordination overhead

For personal projects where I can’t bill anyone, the relevant comparison is $376 in engineering costs versus $1.07 in AI costs. The dollar savings matter. But the time efficiency—eight engaged minutes for multi-system work—is what makes personal projects actually viable. This work would have stayed broken indefinitely because neither the cost nor the coordination overhead justified the fix.

Beyond Cost: Capability Expansion

The economics matter, but something else is happening: agentic tools expand what I can attempt without team support.

This blog integration issue touched multiple system layers—RSS feed parsing, data transformation, React component rendering, deployment pipeline. Five years ago, I would have needed multiple team members to handle this reliably: a backend engineer for the feed integration, a frontend specialist for the React components, a QA engineer to verify everything worked.

Now I orchestrate AI agents to handle all of it. This isn’t about replacing coding skill—I could write this code myself. It’s about managing complexity across multiple subsystems efficiently enough to be worth doing at all.

In practice, I’m now regularly tackling work that would have required team coordination:

Building full-stack features solo that would have required 2-3 engineers

Maintaining multiple production systems without dedicated devops support

Implementing complex integrations that would have needed specialized expertise

Deploying changes across distributed systems with confidence

I’ve seen similar patterns with other technical people, though the effectiveness varies by context:

Senior engineers shipping entire features without team support (when scope is well-defined)

Technical founders building production systems solo during early stages (though architectural complexity still requires expertise)

Staff engineers prototyping architectural changes across multiple services (within their existing systems)

Technical product managers implementing fixes without engineering allocation (for straightforward issues)

The bar for what technical people can attempt solo has shifted in some contexts—though complex projects still require specialized expertise and human oversight. Work that required team coordination and specialized roles can become feasible for individuals with good orchestration skills when the problem type fits.

What the Token Timeline Reveals

The session analytics show something interesting about how agentic work actually unfolds:

50K ┤● Initial context (project files, instructions)

│

60K ┤ ●● Investigation phase (research agent)

│

70K ┤ ●● First implementation attempts

│

80K ┤ ● QA and deployment

│

90K ┤ ●●● Root cause deep dive (largest spike)

│

100K ┤ ●● Cleanup & analytics

│

105K ┤ ● (current)

└─────────────────────────────────────

0 10 20 30 40 50 60 (minutes)

Nearly half the tokens (47.5%) went to initial context loading—establishing what the project was, how the code worked, and what needed fixing. Another 25% covered investigation and research. Only 15% went to actual implementation.

This distribution reveals the paradigm shift: I wasn’t coding. I was orchestrating intelligence to understand the problem, propose solutions, verify quality, and handle edge cases. The traditional developer workflow inverts when AI handles implementation while humans handle strategy.

The Orchestration Paradigm: From Hiring to Directing

Here’s what changed about how work gets done. Instead of four browser tabs managing agents manually, I use Claude-MPM to handle agent coordination in a single tab. My attention switches between five active projects while the framework manages parallel execution.

The work that happened (tabs here refer to iTerm2 tabs — my go-to terminal viewer).

Single Claude-MPM Tab:

Research agent analyzing the codebase

Engineer implementing fixes

QA agent testing deployments

React specialist handling complex restructuring

All coordinated through the orchestration framework

Four Other Project Tabs:

Client work continuing in parallel

No context switching penalty

Framework handles agent handoffs and progress tracking

My job shifted from implementation to strategic direction:

Initial prompt: “Blog feed is broken, investigate Substack RSS integration”

Occasional redirects: “The initial fix didn’t address root cause—investigate data transformation layer”

Quality checkpoints: “Verify all historical posts load, not just recent ones”

Final verification: “Document architectural decisions”

Traditional software development requires your full attention during execution. You write code, debug issues, test thoroughly, deploy carefully. Each step demands focus.

Orchestrated development enables parallel execution across multiple projects. The framework manages agents while you provide strategic oversight. Quality emerges from good specifications and periodic verification, not constant supervision.

This approach works particularly well for personal projects where coordination overhead would kill the project entirely. My website bug wasn’t worth hiring an engineer—the coordination time would have cost more than the fix was worth. Claude-MPM made it viable by requiring only eight minutes of my attention.

What made this work so efficiently: eliminated hiring overhead entirely, agents executed in parallel while I context-switched to other projects, and eight minutes of total attention across an hour of wall-clock time.

When the Numbers Don’t Work: The Long Tail Problem

Here’s what I need to be honest about: this session represents a best-case scenario across both dimensions—cost savings and capability expansion. The value doesn’t materialize consistently.

The Economics Long Tail:

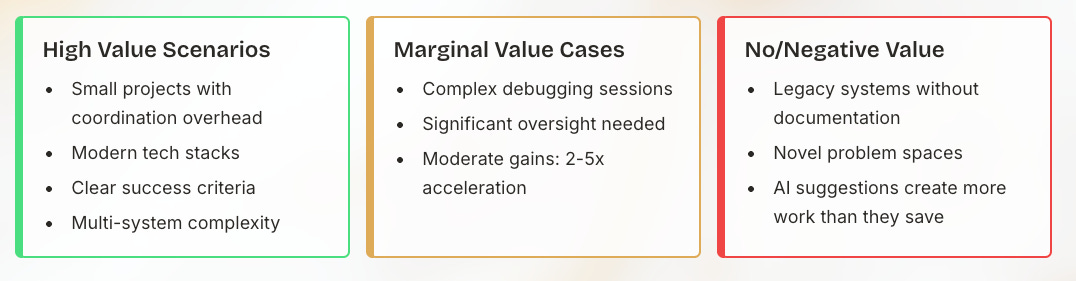

Success cases like this bug fix sit at one end of a distribution. At the other end are situations where AI assistance provides marginal value, no value, or negative value:

Marginal gains (2-5x cost reduction, limited capability expansion):

Work requiring significant oversight (coordination overhead remains)

Complex debugging demanding you’d hire senior engineers anyway

Projects where verification time approaches implementation time

Multi-system work where AI lacks critical domain context

No gains (1x or worse):

Work requiring deep expertise that AI fundamentally lacks

Legacy systems with undocumented architectural decisions

Security-critical code where verification exceeds any AI savings

Projects where coordination overhead was never the limiting factor

Genuinely novel problem spaces with no relevant training data

Negative value:

Fixing AI-generated bugs costs more than hiring correctly

AI confidently provides wrong solutions requiring extensive debugging

Management overhead increases rather than decreases

AI suggestions waste more engineering time than they save

Multi-system changes introduce subtle integration failures

The distribution matters more than the peak. My bug fix achieved major cost savings ($376 vs $1.07) and demonstrated capability expansion (solo work spanning multiple systems). But across client work over the past three months, the aggregate value is more modest—typically 2-3x gains from acceleration rather than transformation.

What determines where you land on this curve?

High-value scenarios (cost + capability):

Small projects where coordination overhead dominates

Personal work not worth hiring for at any price

Multi-system fixes with clear success criteria

Modern tech stacks matching AI training data

Straightforward verification and testing

Moderate-value scenarios (primarily acceleration):

Work within managed teams requiring oversight anyway

Complex problems needing senior judgment but benefiting from AI assistance

Projects where you’re coordinating engineers regardless

Incremental features in well-understood systems

Low-value scenarios:

Legacy systems requiring extensive human context

Novel problem spaces with no training data

Security or performance-critical code demanding extensive review

Work where AI suggestions require more debugging than starting fresh

Political or organizational constraints on implementation approach

The heightened investor interest makes sense for the high-value category—massive volume of small projects plus capability expansion for individual contributors. Market skepticism makes sense for larger managed work where you’re hiring teams regardless.

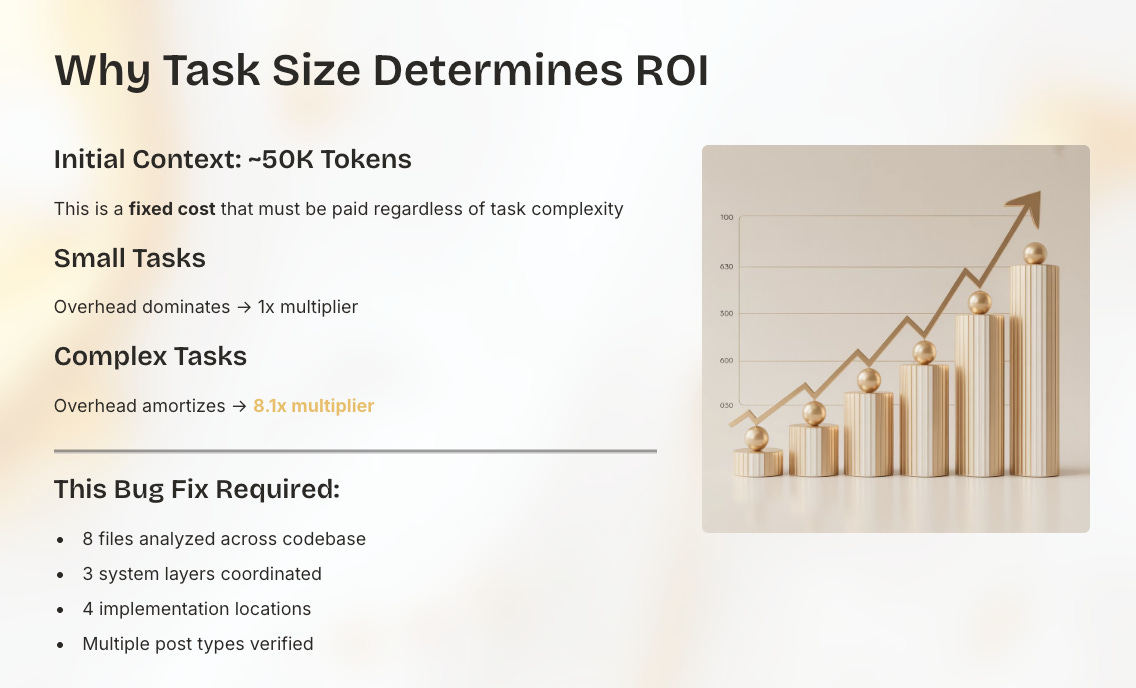

The Token Efficiency Story

Something interesting emerged from analyzing the session: token efficiency mattered more than raw capability.

Initial context loading consumed nearly 50,000 tokens—establishing project structure, reading configuration files, understanding the codebase. This overhead was identical whether I was fixing a trivial bug or implementing a complex feature.

For small tasks, that overhead dominates. A 5-line change doesn’t justify 50,000 tokens of context establishment. The productivity multiplier approaches 1x or worse.

For complex tasks spanning multiple files and subsystems, the overhead amortizes. My 8.1x multiplier came from a bug fix that required:

Analyzing 8 different files

Understanding data flow across 3 system layers

Implementing changes in 4 locations

Verifying behavior across multiple post types

Documenting architectural decisions

The same initial context cost, but much higher value from the work performed.

Token efficiency insights:

Single-session work: No context restarts saved ~50,000 tokens

Targeted analysis: Research agent methodology minimized exploratory waste

Parallel processing: Multiple files analyzed simultaneously without token duplication

Smart caching: Repeated file access handled efficiently

The session used 52.3% of the available 200,000-token context window. Efficient enough to complete the work in one session, but not wasteful. A goldilocks utilization rate.

Why Investors Are Interested (And Where Skepticism Is Warranted)

The funding activity around AI coding tools—Augment’s $227M (enterprise-focused coding assistant), Magic’s $320M (autonomous coding agents), Codeium’s $150M (AI code acceleration platform)—reflects recognition of two distinct value propositions: cost savings on small projects and capability expansion for individual technical contributors.

Where the investment thesis makes sense:

The addressable market appears substantial across two dimensions:

Cost savings market: Small projects, personal sites, maintenance tasks—all the work below the “worth hiring for” threshold—suddenly become economically viable. Millions of these decisions happen daily.

Capability expansion market: Technical people at various levels handling multi-system work solo that traditionally required team coordination. Senior engineers, staff engineers, technical founders, technical PMs—all expanding what they can accomplish independently within certain contexts.

Both markets show real, measurable value when conditions align. Token costs decline while capabilities improve. The technology enables both cost arbitrage and skill amplification simultaneously.

Where caution is warranted:

Neither value proposition scales uniformly to all software work. Personal project economics don’t apply when you’d hire and manage teams regardless. Capability expansion has limits—complex projects still require specialized expertise and human oversight. Context windows constrain problem scope. Verification overhead grows with project complexity.

The peak performance cases—like my $376 saved, 8-minute fix spanning multiple system layers—aren’t representative of median experience. Legacy systems, ambiguous requirements, and genuinely novel problems still resist automation.

My website bug represents the sweet spot: clear problem, modern stack, straightforward solution, multi-layer complexity. It demonstrates both cost savings (work that wouldn’t happen) and capability expansion (work that would have required team support).

Companies that can deliver consistent value across both use cases without overpromising tend to build sustainable businesses. Companies that only optimize for one scenario or suggest they can replace entire engineering organizations risk underdelivering on expectations.

What This Means for Practitioners

The session analytics reveal a practical framework for when to employ agentic coding tools across two distinct value dimensions:

Cost savings scenarios (personal projects, small fixes):

Projects too small to justify hiring (personal sites, side projects, maintenance)

Coordination overhead would exceed implementation value

Clear specifications and straightforward verification

Multiple independent tasks can run in parallel

Modern tech stacks with good AI training coverage

Capability expansion scenarios (individual technical work):

Multi-system work traditionally requiring team coordination

Full-stack features spanning frontend, backend, and infrastructure

Complex integrations across distributed systems

Prototyping architectural changes before team involvement

Maintenance across multiple production systems

Both scenarios benefit from:

Good orchestration frameworks (like Claude-MPM)

Clear problem specifications

Ability to verify results independently

Iterative refinement workflows

Deploy tactically when:

Accelerating work within managed teams (2-3x gains typical)

Augmenting rather than replacing hiring decisions

Clear subtasks within larger projects requiring human judgment

Prototyping before committing team resources

Avoid or minimize when:

Project complexity demands senior engineering judgment regardless

Legacy systems requiring extensive human context

Security or performance criticality mandates extensive review

Verification overhead approaches or exceeds implementation savings

The key questions aren’t just “Can AI do this work?” but rather:

Cost dimension: Would I have hired someone, or would coordination overhead kill the project?

Capability dimension: Does this require coordination across specialists I don’t have access to?

If either answer is yes—small projects, personal work, or multi-system complexity requiring team coordination—the value can be compelling. If you’d hire and manage a focused team anyway, the gains are more modest but still meaningful.

The Honest Bottom Line

My $1.07 bug fix demonstrates two valuable aspects of agentic coding tools: $376 saved in engineering costs (based on $40/hour offshore rates for 9.4 hours of work) and expanded capability to handle multi-layer system work solo that would have required team support.

The first value—cost savings on small projects—applies specifically to work that falls below the “worth hiring for” threshold. The second value—capability expansion—applies in certain contexts across technical roles attempting work that would have traditionally required coordination across specialized team members.

The recent funding activity makes sense when you consider both markets: the massive volume of small projects that become viable, and the expanded capability bar for what technical people can attempt independently in favorable conditions. Market skepticism makes sense when you focus on complex work requiring team oversight regardless of tooling.

Understanding which scenario you’re in determines what value you’ll see:

Personal projects and small fixes: Cost savings ($376 vs $1.07) and time efficiency (8 minutes vs coordination overhead) make previously unviable work viable.

Capability expansion: Technical people at various levels—senior engineers, staff engineers, technical founders, technical PMs—attempting full-stack or multi-system work that would have required team coordination, when the scope and context align.

Larger managed projects: More modest 2-3x acceleration where you’re managing teams anyway.

For my website bug fix? Both values delivered—work got done that otherwise would have stayed broken, and I handled complexity that would have required multiple specialists. For larger client work? The primary value comes from acceleration rather than enablement.

Both scenarios represent real value. Both are happening across the industry. The difference is knowing which applies to your situation and what you’re actually optimizing for.

I’m Bob Matsuoka, writing about agentic coding and AI-powered development at HyperDev. The orchestration framework used in this article is Claude-MPM, my open-source multi-agent project management system. For more on orchestration strategies, read my analysis of multi-agent coordination patterns or my comparison of orchestration frameworks.