When Google announced Jules at I/O 2025, my reaction was split between genuine interest and a familiar sense of déjà vu. On one hand, a PR-based autonomous coding agent from Google sounds promising. On the other hand—another one?

Google already has Firebase Studio, which feels like v0 but somehow worse. Now they're entering the autonomous agent space with Jules, positioning it as a competitor to tools like OpenAI's Codex. Given Google's resources and engineering talent, I expected this to be a more polished entry into the market.

I was wrong.

What Jules Promises

Jules is designed as a PR-based autonomous agent that can read your codebase, understand issues, and implement fixes directly through GitHub pull requests. Powered by Gemini 2.5 Pro, it operates asynchronously in secure Google Cloud VMs, promising to handle everything from bug fixes to feature development. The concept is solid—point it at a problem, let it analyze your code, and watch it create a targeted solution.

The integration approach makes sense too. Rather than trying to replace your development environment, Jules works within the GitHub ecosystem you're already using. It's meant to be the autonomous contributor that never sleeps, handling routine fixes and feature implementations while you focus on architecture and strategy.

The Reality: Day One Disappointment

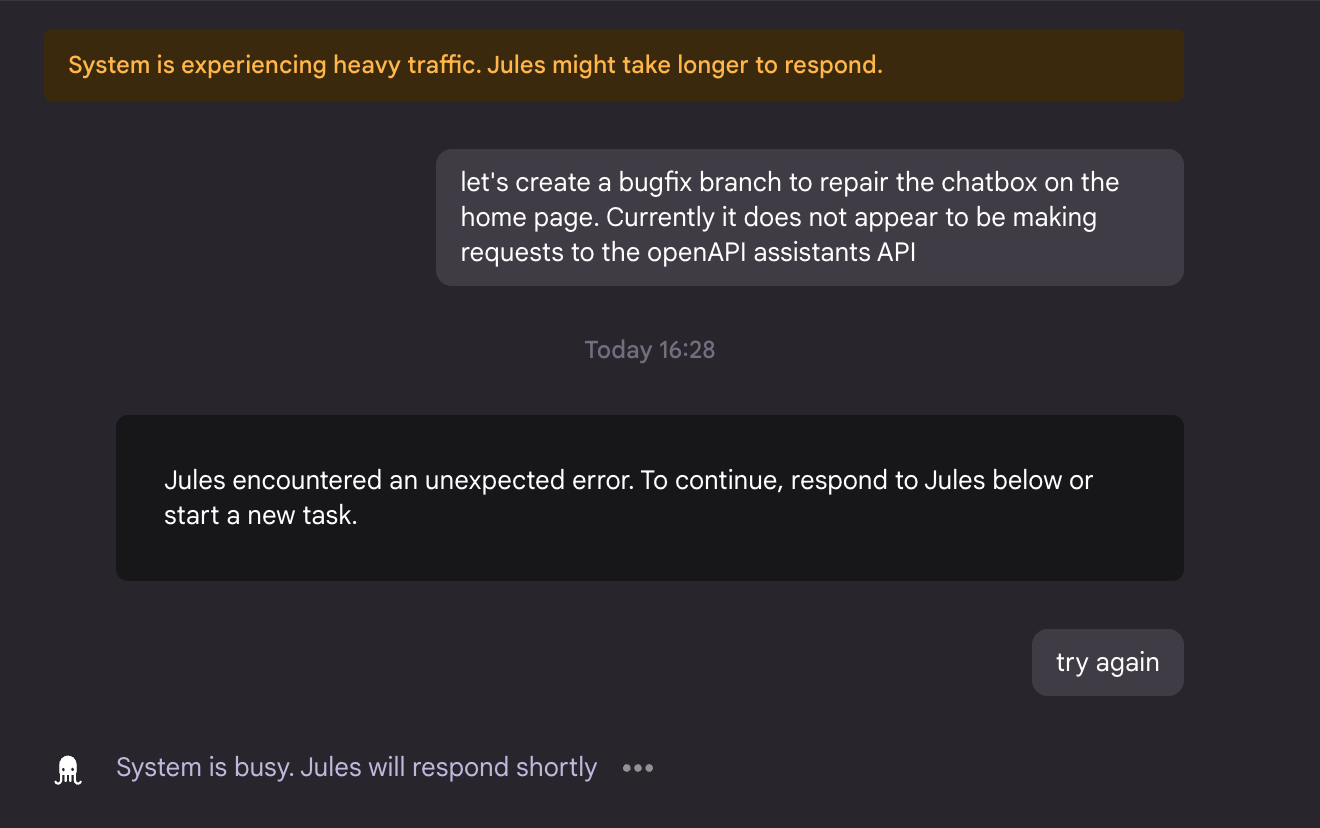

My first attempt was straightforward: fix a chatbox on a homepage that wasn't making requests to the OpenAI assistants API. Simple debugging task, clear scope, exactly the kind of work an autonomous agent should handle well.

Jules immediately hit heavy traffic issues. I wasn't alone in this experience—multiple users reported access problems due to overwhelming demand. The developer community echoed similar frustrations, with widespread reports of "System is experiencing heavy traffic" messages lasting for hours. One user described giving Jules "a big task" in the morning, then waiting "several hours" with only traffic warnings.

The system couldn't handle basic load, throwing "unexpected error" messages before I could even get started. When it finally responded, the conversation was more frustrating than helpful.

this was basically every other response…and yes, they ate up the 5 whole credits Google gave me for beta testing this…

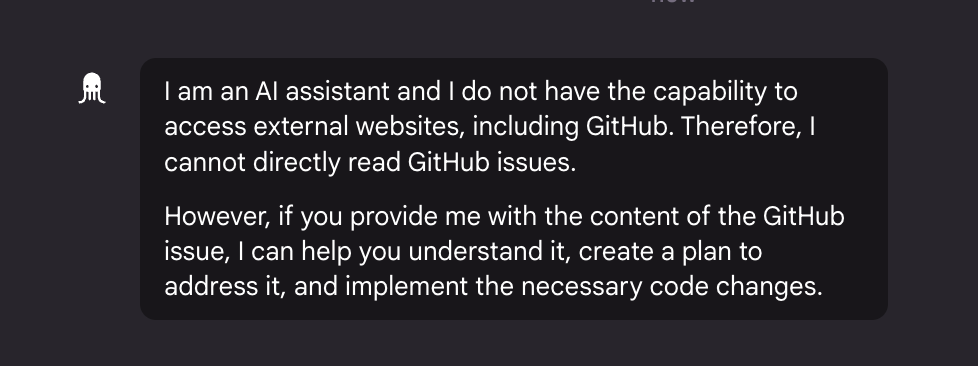

I asked if it could read GitHub issues directly. The response was telling: "I am an AI assistant and I do not have the capability to access external websites, including GitHub. Therefore, I cannot directly read GitHub issues."

But..but… *it works by pulling code from Github!*

This fundamental limitation became a common complaint among early users. Multiple developers described giving Jules "pretty explicit instructions" about their codebase, only to have it "do what it wanted and failed," wasting one of their precious 5 daily credits on something they specifically told it not to do.

Missing the Mark on Basic Functionality

The limitations became more apparent as I continued testing. Jules asked if I wanted to enable notifications for when "a plan is ready or code is ready for review." This suggests it does eventually produce work, but the path to get there involves significant manual intervention.

The developer community revealed the broader scope of these issues. Users reported mixed results on code quality, with one comparison showing Jules writing 2,512 lines versus Codex's 77 lines for the same task. While more code isn't necessarily worse, users debated whether Jules was "overcomplicated with code duplication and unnecessary boilerplate."

Others hit timeout issues. "Jules was unable to complete the task in time" became a common complaint, with the tool failing to finish work within its allocated timeframe. The 5-tasks-per-day limit meant these failures burned through users' daily quota quickly.

Compare this to Codex Web, which reads your full codebase, understands context, and operates with minimal friction. Jules feels like it's solving the wrong problem—asking developers to become intermediaries in their own automation workflow.

What Google Got Wrong

Reflecting on this experience, the most concerning aspect isn't the expected growing pains of a beta release. Google's own FAQ acknowledges that task failures are common, citing "broken setup scripts or vague prompts" as typical causes. These operational issues are fixable with time and resources.

Rather, it's the fundamental design decisions that suggest Jules wasn't built by people who actually use autonomous coding tools regularly. If you're building a GitHub-integrated coding agent, direct repository access should be table stakes. Requiring developers to manually copy issue content defeats the entire purpose of automation—it's like building a self-driving car that needs someone to read the road signs out loud.

Google has the engineering resources to solve these problems. They have access to vast amounts of code and sophisticated language models. Yet Jules feels rushed to market, more concerned with matching competitor announcements than delivering genuine value.

The Broader Picture

This release highlights a concerning pattern in how big tech companies approach AI tooling. There's pressure to ship something, anything, to show they're not falling behind. But shipping broken tools that frustrate developers doesn't advance the field—it sets it back.

Firebase Studio suffered from similar issues. Instead of building something genuinely better than v0, Google shipped something that felt inferior. Now they're repeating the pattern with autonomous coding agents.

The autonomous coding space is heating up rapidly, with multiple players announcing similar tools within days of each other. Microsoft revealed its own background coding agent in GitHub Copilot at Build, and the community is already comparing capabilities. But this rush to market seems to prioritize announcements over user experience.

The developer feedback reveals this tension clearly. While some users found success with simple tasks, describing 5-minute fixes that "worked perfectly," others struggled with server slowness, task timeouts, and the tool ignoring explicit instructions. The 5-task daily limit means every failure hurts, burning through your quota with nothing to show for it.

Should You Try Jules?

Not yet. Save yourself the frustration.

Jules might evolve into something useful as Google addresses these fundamental limitations. The company has the resources and talent to fix what's broken. Some developers acknowledge it's "experimental/alpha" and expect improvements, noting that Google released it free specifically to gather feedback.

But the infrastructure problems combined with basic design flaws suggest Google underestimated both demand and the core requirements for autonomous coding agents. I'll revisit Jules when it can handle basic tasks without requiring manual workarounds and when the servers can actually stay online under load.

Until then, there are better autonomous coding tools available that actually work as advertised. The autonomous coding space is moving fast, with real innovation happening from smaller, more focused teams. Google's entry feels like they're playing catch-up rather than leading, and it shows in the execution.

Thanks Bob! You literally saved the day... Which I can now spend checking out Claude 4.0 :) instead:).