I went into Factory AI rooting for it—because the premise, frankly, is what the next era of agentic coding should look like.

Multiple specialized agents handling different aspects of development. A local MCP bridge connecting to your actual environment (something I use extensively in my own AI workflows). The promise of truly autonomous coding—not just assistance, but "set it and forget it" development where you hand off a task and come back to finished work.

After spending time with CodeDroid on a real bug fix for my AI code review tool, here's what I found: great architectural vision, flawed execution, and a product that isn't ready for serious development work.

What Factory AI Gets Right

The specialized agents concept makes sense. Instead of one monolithic AI trying to handle everything from coding to testing to documentation, Factory breaks this into distinct Droids: CodeDroid for implementation, Review Droid for pull requests, QA Droid for testing, and others for specific workflow stages.

The local MCP bridge approach clicked with how I already work. Having an agent that can actually interact with your local environment—run builds, execute commands, understand your project structure—feels like the natural evolution beyond browser-based coding assistants.

Most importantly, CodeDroid actually solved the specific bugs I reported. It understood my workflow for creating GitHub issues, worked properly in a feature branch, and delivered fixes for the problems I described. That's table stakes, but worth noting when so many AI tools struggle with basic task completion.

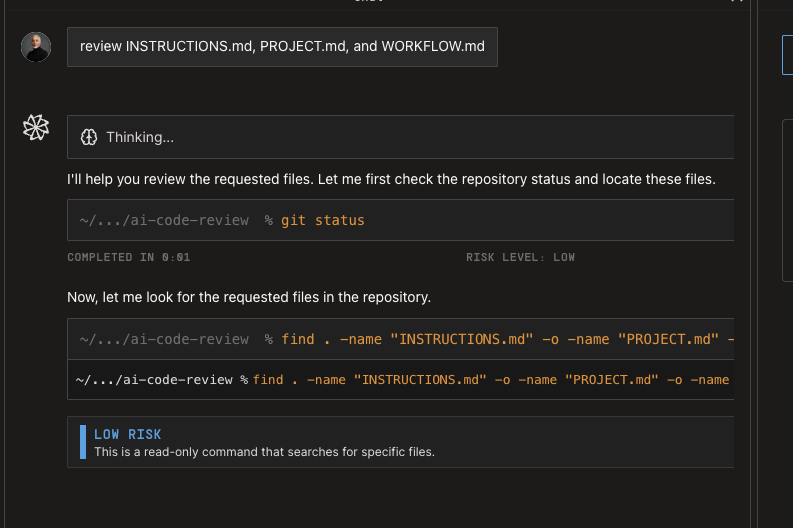

The CodeDroid Session UI

The Fundamental Architecture Problem

Here's where things get interesting, and not in a good way.

Factory markets itself as autonomous, but requiring users to manually switch between agent "modes" isn't autonomous behavior. A proper multi-agent system should have an orchestrator agent that takes your task, understands the full workflow, and delegates appropriately. It should hand off to documentation agents when specs need updating, QA agents when testing is required, research agents when context gathering is needed—and just return results.

Right now, it's more like an app switcher for different AI roles than a true autonomous system. You pick your agent like you're picking a tool from a menu—not assigning a task and letting the system run with it.

When I asked CodeDroid to handle my bug fix, it never automatically transitioned to testing phases or involved other agents. I got code changes but no validation that the broader system still worked. For something positioning itself as enterprise-grade autonomous development, this feels like a fundamental architectural oversight.

Speed That Kills Productivity

The performance issues are brutal. Compared to Cursor's sub-30-second turnarounds or Claude Code's near-instant drafts, Factory's response times feel stuck in staging. We're not talking about marginally slower—this was painfully, productivity-killingly slow.

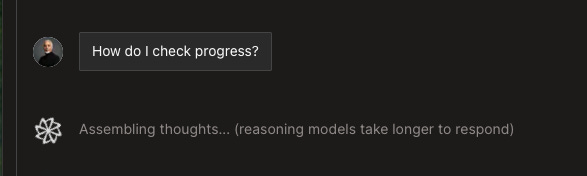

The bigger problem is feedback. Factory's UX assumes you'll start a task and walk away, but even if that's the intended workflow, you need meaningful progress indicators. Instead, you get minimal feedback during long operations, making it impossible to tell if the system is working or stuck.

This might be token conservation at play—enterprise pricing models often encourage batched operations over interactive responsiveness. But when tools like Claude Code deliver near-real-time responses with superior quality, the value proposition breaks down.

I asked this question frequently.

UX Contradictions

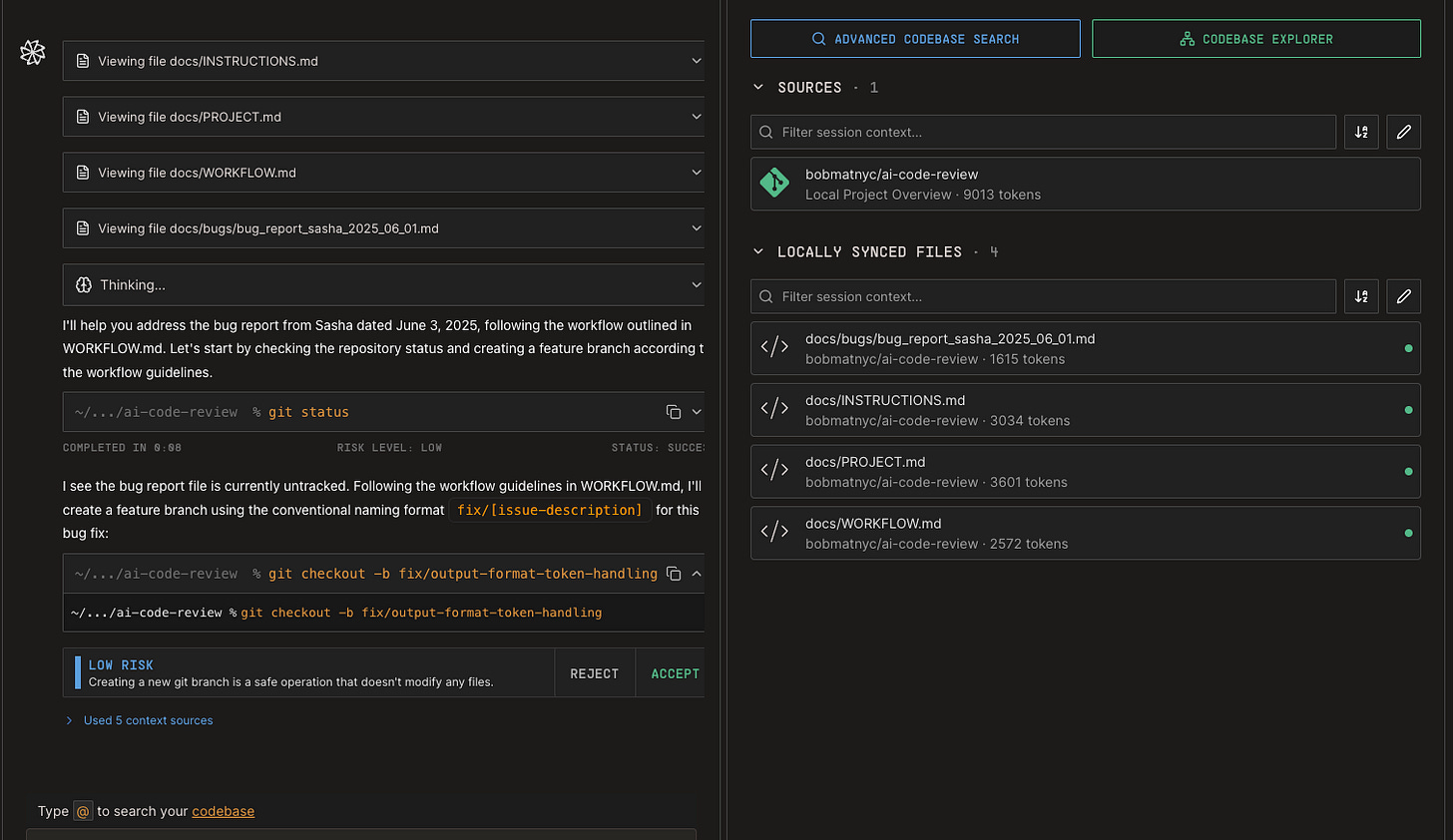

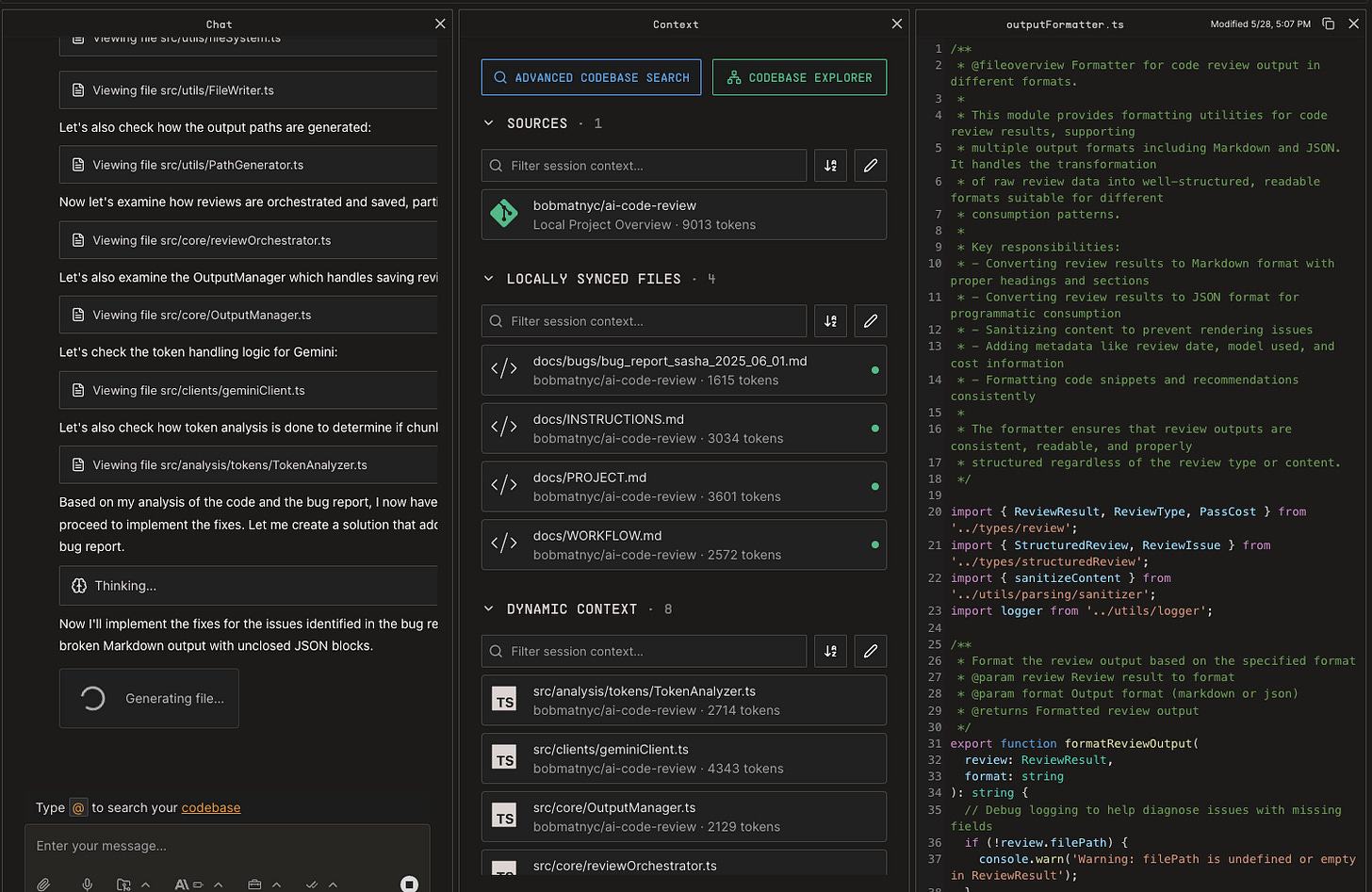

The interface reveals more complexity than it should. If autonomy is the goal, the system shouldn't be asking me to watch a context panel like a flight dashboard. But that's exactly what Factory does—three separate windows (session, context, history) compete for attention when the promise is hands-off operation.

Basic usability issues compound the problem. The history window proved useless—I could never parse what tasks were actually running. Multiple times, dialog boxes for accepting changes were hidden behind other columns, leaving me confused about why the system appeared stuck.

When CodeDroid needed permissions for non-trivial operations, it required manual approval or would halt progress. Again, this contradicts the autonomous positioning. Either work in a feature branch where it can do everything safely, or build proper permission models that don't require babysitting.

Factory AI’s Column-Based UX (Session and context columns shown. The useless history column not shown. Note the “Accept” button. It’s sometimes behind the context column, making you wonder why things have paused…)

Execution Gaps That Undermine Trust

CodeDroid fixed the specific issues I reported, which is good. But it didn't catch other TypeScript compilation errors and linting issues that already existed in the codebase. To be clear—it didn't cause these problems, but it didn't identify them either. More problematically, it claimed successful builds and mentioned fixing these problems, but when I tried to actually compile and run the code, things failed.

I'm not sure how it tested its changes if the build was broken. Maybe that's QA Droid's job, but without automatic handoff between agents, these gaps become visible and concerning.

The system also failed silently on GitHub API integration. I have a specific workflow where my AI tools create issues using environment variables, but CodeDroid couldn't execute this despite acknowledging the limitation only after attempting the work. For enterprise-grade tooling, upfront capability assessment should be standard.

3 Column Interface with a code editor.

Quality Doesn't Match the Claims

Despite reportedly using Sonnet models, the output quality felt inferior to what I get from Claude Code or Augment Code. This might be prompt engineering, context management, or architectural overhead, but the results matter more than the underlying model choice.

When you're positioning as premium enterprise tooling with autonomous capabilities, the bar is high. Traditional "assisted" tools like Cursor feel more autonomous in practice because they maintain momentum, provide clear feedback, and deliver reliable results.

The Trust Problem

Autonomous coding only works if you trust the outcomes. And trust, in this space, isn't earned by marketing—it's earned by builds that run and issues that don't come back two days later.

When CodeDroid claims it fixed linting errors but leaves broken builds, when it reports successful testing without actually running tests, when it silently fails on API integrations—that erodes confidence rapidly. The fundamental promise is that you can hand off work and trust the results. But if you still need to verify everything manually, validate builds, and debug what actually happened, the tool hasn't delivered on its core value proposition.

How I'd Fix This

Look at what actually works in autonomous AI development. Codex Web gives you a clean dashboard view of progress with real-time updates. Jules keeps the interface minimal because the AI does the heavy lifting. Devin doesn't even have a traditional interface—you communicate via chat and it shows you results.

Factory has this backwards. They're exposing complexity that should be hidden while failing to provide the essential feedback you actually need.

If I were Factory, here's what I'd build: A dashboard that shows clear progress indicators and task delegation in real-time. Full autonomous operation within feature branches—no permission requests for basic operations. Automatic orchestration between agents without manual mode switching. And actual integration with workflow tools—not just API calls that silently fail, but native JIRA, GitHub Issues, and Linear integration that works reliably.

The current three-column interface feels like they're trying to show off their technical sophistication rather than solving user problems. That's not enterprise software—that's demo software.

Where This Goes From Here

Factory AI has raised significant funding ($20M+) and secured enterprise customers, suggesting the market believes in the vision. The multi-agent architecture, local environment integration, and autonomous positioning represent thoughtful approaches to where agentic coding should evolve.

But execution matters. Speed issues make the tool unusable for iterative development. UX contradictions undermine the autonomous claims. Quality gaps break trust when you most need reliability.

I see potential here, and hopefully they'll get there. The concept is sound, and some foundational pieces work. Until Factory closes its execution gap, it's not ready for prime time. The ideas are there. The trust isn't.

For teams evaluating agentic coding tools, stick with Cursor, Claude Code, or Augment Code until Factory addresses these fundamental execution problems. The promise of true autonomous development is compelling, but promise isn't product.

Tested on Factory AI CodeDroid beta with a TypeScript/Node.js project. Your experience may vary, especially as they iterate on performance and UX issues.