DORA 2025: AI as Amplifier, not Magic Wand

Inside Google's just released "State of AI-assisted Software Development" and a new paradigm for agentic coding

TL;DR

• 90% of developers use AI, but only 17% use autonomous agents - massive trust gap exists despite productivity claims

• AI amplifies existing team dynamics - high performers get better, struggling teams see increased dysfunction and 7.2% delivery stability drop

• Seven organizational capabilities determine AI success - platform quality, data access, version control, small batches, user focus, clear policies, and AI stance

• 30% of developers don’t trust AI code they use daily - productivity perception doesn’t match measured reality (METR study found 19% slowdown)

• Platform engineering > tool sophistication - data quality and workflow integration matter more than cutting-edge AI models

• Paradigm shift required - treat LLMs as different entities requiring specialized environments, not better human coding assistants

• CLI/terminal tools gaining traction - command-line interfaces work better with AI than visual IDEs designed for humans

• Success depends on restructuring development - teams need AI-optimized workflows, not AI bolted onto human-centric processes

Google Cloud’s 2025 DORA “State of AI-assisted Software Development” report reveals a striking reality: AI doesn’t fix broken teams—it amplifies what’s already there. Based on nearly 5,000 technology professionals surveyed globally and over 100 hours of qualitative data, the report finds that while 90% of developers now use AI tools, only high-performing organizations see genuine benefits. For struggling teams, AI intensifies existing dysfunction, with delivery stability dropping 7.2% and throughput declining 1.5% despite individual productivity gains.

DORA’s research represents a fundamental repositioning of AI from experimental tool to organizational transformation catalyst.

The significance extends beyond mere adoption statistics. DORA’s research represents a fundamental repositioning of AI from experimental tool to organizational transformation catalyst. The report introduces a seven-capability framework that determines whether AI becomes an asset or liability, with platform engineering quality and user-centric focus emerging as non-negotiable prerequisites. This marks a dramatic shift from DORA’s cautious 2024 stance—what lead researcher Gene Kim now calls “the DORA 2024 anomaly”—toward acknowledging AI’s permanent place in development workflows. For technical practitioners evaluating agentic coding technologies, the implications are clear: organizational readiness matters more than tool sophistication.

The autonomous agent adoption gap reveals developer hesitation

The report exposes a massive disconnect between assisted and autonomous AI adoption that speaks directly to the agentic coding landscape. While 71% of developers use AI for writing new code through human-supervised methods, only 17% use agent mode daily where AI makes autonomous changes. Even more telling, 61% never use autonomous agent mode at all, despite 80% reporting productivity improvements from assisted AI.

This gap illuminates a critical trust paradox at the heart of AI-assisted development. 30% of surveyed developers report little to no trust in AI-generated code, even as they use it daily. DORA lead Nathen Harvey captured this tension precisely: “Having 100% trust in the output is wrong. Having 0% trust is wrong. There’s something in the middle that’s right.” The data suggests developers have found their middle ground in assisted tools while remaining deeply skeptical of full autonomy. Chatbot interactions lead at 55% usage, followed by IDE integrations at 41%, but autonomous workflows remain niche. For agentic AI vendors, this presents both challenge and opportunity—the market clearly exists, but trust barriers remain formidable.

The report’s methodology provides robust backing for these findings. Researchers conducted 78 in-depth interviews during capability identification, then validated findings through rigorous survey methodology across the nearly 5,000 respondents. The three-phase process—capability identification, survey validation, and impact evaluation—used cluster analysis to identify team archetypes and correlation analysis to measure AI’s differential impacts. This methodological rigor distinguishes DORA’s conclusions from vendor-sponsored studies that often paint rosier pictures.

Seven team archetypes expose AI’s magnification effect

DORA’s cluster analysis revealed seven distinct team profiles that demonstrate how AI amplifies existing organizational dynamics. At the top, “Harmonious High Achievers” representing 20% of teams excel across product performance, delivery throughput, and team well-being metrics. These teams leverage AI to accelerate their existing strengths, achieving measurable productivity gains without sacrificing stability. At the bottom, 10% of teams struggle with “Foundational Challenges,” trapped in what researchers call “survival mode” with high burnout, friction, and system instability. For these teams, AI introduction intensifies dysfunction rather than solving it.

The middle tiers reveal nuanced patterns. 45% of teams operate at the fifth performance level or higher, suggesting reasonable AI readiness, while the remaining 25% distribute across intermediate archetypes including “Legacy Bottleneck” teams hampered by technical debt. The report’s six-metric framework—product performance, software delivery throughput, delivery instability, burnout, friction, and valuable work focus—provides a multidimensional view that traditional productivity metrics miss. This human-centric approach acknowledges that raw output increases mean little if they create downstream chaos or team burnout.

High-performing teams see AI boost efficiency across all measured dimensions. Struggling teams experience increased delivery instability, higher friction, and accelerated burnout as AI-generated volume overwhelms inadequate processes.

Most critically, the archetype analysis confirms AI’s amplifier effect with quantitative precision. High-performing teams see AI boost efficiency across all measured dimensions. Struggling teams experience increased delivery instability, higher friction, and accelerated burnout as AI-generated volume overwhelms inadequate processes. This finding challenges the prevalent narrative that AI democratizes development capabilities—instead, it suggests AI may widen the gap between strong and weak organizations.

The seven capabilities model offers practical transformation roadmap

DORA’s AI Capabilities Model identifies seven foundational practices proven to amplify AI’s positive impacts while mitigating risks. Clear and communicated AI stance tops the list—organizations with explicit policies on tool usage, experimentation boundaries, and support structures see reduced friction regardless of specific policy content. This addresses the ambiguity that stifles adoption while providing psychological safety for experimentation.

High-quality internal data and AI access to that data form the technical foundation. The report finds AI benefits are “significantly amplified” when tools can leverage organizational context through clean, accessible, unified data sources. Without this foundation, AI remains a generic assistant rather than a specialized force multiplier. Strong version control practices become even more critical as AI accelerates change volume—teams proficient in rollbacks and frequent commits see amplified effectiveness, while those with weak practices face increased instability.

The remaining capabilities address workflow and cultural factors. Working in small batches—a long-standing DORA principle—proves “especially powerful in AI-assisted environments,” countering AI’s tendency to enable larger, riskier changes. User-centric focus emerges as perhaps the most critical factor: without it, the report finds AI adoption can have negative impacts on team performance. Finally, quality internal platforms provide the shared capabilities needed to scale AI benefits—90% of organizations have adopted at least one platform, with direct correlation between platform quality and AI value realization.

Platform engineering emerges as the hidden multiplier

The report’s emphasis on platform engineering as foundational for AI success challenges teams focused primarily on tool selection. 90% of surveyed organizations have adopted at least one internal platform, with platform quality showing direct correlation to AI value unlock. This isn’t about sophisticated tooling but rather the “shared capabilities needed to scale AI benefits across the organization.”

Value Stream Management (VSM) plays a crucial role in preventing what the report calls “local productivity gains causing downstream chaos.” As individual developers accelerate with AI assistance, weaknesses in testing, deployment, or operations become acute bottlenecks. VSM ensures end-to-end flow optimization, connecting AI-driven productivity to measurable product performance rather than mere activity metrics. The report’s finding of 7.2% delivery stability reduction despite productivity gains illustrates what happens without this holistic view. Teams that treat AI adoption as isolated tool deployment face increased instability as AI-generated volume overwhelms downstream processes.

For organizations evaluating agentic AI platforms, this suggests focusing on orchestration and integration capabilities over raw AI performance.

For organizations evaluating agentic AI platforms, this suggests focusing on orchestration and integration capabilities over raw AI performance. The most sophisticated coding model provides little value if generated code creates testing bottlenecks or deployment failures. Platform engineering excellence—automated testing, robust CI/CD, comprehensive monitoring—determines whether AI acceleration translates to business value or operational chaos.

Developer skepticism challenges vendor narratives

Community reactions reveal significant tension between vendor promises and developer experience. The most striking counterpoint comes from the Model Evaluation & Threat Research (METR) study, which found experienced developers took 19% longer completing real tasks with AI tools—directly contradicting DORA’s productivity findings. The study’s 16 experienced open-source developers working on 246 real tasks with Cursor Pro and Claude models predicted 24% speedup, believed they achieved 20% speedup, but measured data showed 19% slowdown.

This “gap between perception and reality” as METR researchers noted, suggests measurement challenges plague AI productivity assessment. Gergely Orosz of The Pragmatic Engineer offered a potential explanation: “the learning curve of AI-assisted development is high enough that asking developers to bake it into their existing workflows reduces their performance while they climb that learning curve.” The disconnect between self-reported productivity gains (80% in DORA) and measured performance raises questions about whether teams are conflating activity with effectiveness.

Developer communities have responded with characteristic pragmatism. Limited organic discussion on Reddit and Hacker News suggests the report resonates more with organizational leaders than individual developers. Where discussions occur, the 30% trust gap dominates—developers acknowledge using AI while maintaining healthy skepticism about code quality. Simon Willison’s observation about the effort required to “learn, explore and experiment” without guidance resonates with practitioners struggling to integrate AI effectively.

Vendors leverage findings while sidestepping controversies

AI coding tool vendors have selectively embraced DORA’s findings in their messaging while avoiding uncomfortable implications. GitHub maintains alignment without direct commentary, emphasizing how their Copilot metrics echo DORA’s organizational capability focus. The disconnect appears in community tensions—The Register reports developers seeking “alternative code hosting options” due to “unavoidable AI,” suggesting forced adoption creates the friction DORA’s “clear AI stance” capability aims to prevent.

Anthropic has most aggressively leveraged DORA-aligned messaging, with Claude 4’s release featuring testimonials that mirror the report’s productivity themes. Cursor’s claim that Claude Opus 4 “excels at coding and complex problem-solving” directly addresses the trust gap, while Replit’s emphasis on “complex multi-file changes” speaks to enterprise-scale concerns. Tabnine’s concrete metrics—”20% increase in free-to-paid conversions” and “20-30% decrease in monthly customer churn”—provide the quantitative validation DORA’s framework demands.

Yet vendors conspicuously avoid addressing the autonomous agent adoption gap. Marketing materials emphasize productivity gains from assisted coding while glossing over the 61% who never use agent mode. This selective interpretation suggests vendors recognize market resistance to full automation despite their technical capabilities. The focus on “human-AI collaboration” and “AI pair programming” in vendor messaging implicitly acknowledges DORA’s trust paradox findings.

Academic silence speaks to methodological concerns

The research community’s limited response raises important questions about the report’s dramatic repositioning from 2024’s cautionary stance. Gene Kim’s acknowledgment of the “DORA 2024 anomaly” suggests internal recognition of this shift, yet detailed methodology remains largely inaccessible in the 440-page full report. The rapid pivot from “AI does not appear to be a panacea” (2024) to positioning AI as central to development (2025) invites scrutiny.

The Register’s observation that “Google is invested in this not happening” regarding AI adoption slowdown highlights potential bias concerns. While DORA maintains research independence, Google Cloud’s sponsorship and the company’s massive AI investments create inherent tensions. The report’s emphasis on platform engineering and internal capabilities aligns conveniently with Google Cloud’s service offerings, though the data-backed framework provides substantive justification.

Academic researchers appear to be taking a wait-and-see approach, with no significant critiques yet published in IEEE Software or ACM venues. The typical publication lag partially explains this silence, but the absence of immediate academic engagement on social media or preprint servers suggests researchers may be awaiting raw data access or conducting validation studies. The METR study’s contradictory findings hint at the replication challenges ahead.

Practical implications for agentic coding decisions

For technical practitioners evaluating agentic coding technologies, DORA’s findings offer clear guidance despite their contradictions. Start with organizational assessment against the seven capabilities before scaling adoption. Teams lacking strong version control, quality platforms, or clear AI policies will see problems amplified regardless of tool sophistication. The 61% never using agent mode suggests the market isn’t ready for full automation—focus on augmentation over replacement.

The trust paradox demands new approaches to code review and quality assurance. If 30% of developers distrust AI-generated code they’re using daily, traditional review processes need fundamental rethinking. Consider dedicated AI code review protocols, enhanced testing for AI-generated components, and metrics that separate human and AI contributions. The report’s finding of increased delivery instability despite productivity gains suggests teams need new stability metrics that account for AI-accelerated change volume.

Investment priorities become clear through DORA’s lens. Platform engineering and data quality matter more than cutting-edge AI models. A mediocre AI with excellent platform integration outperforms sophisticated AI creating downstream bottlenecks. The seven capabilities provide a practical checklist: policy clarity, data quality, internal access, version control excellence, small batch discipline, user focus, and platform quality. Teams strong in these areas can adopt aggressively; those weak should strengthen foundations first.

The paradigm shift: treating LLMs as robots, not better compilers

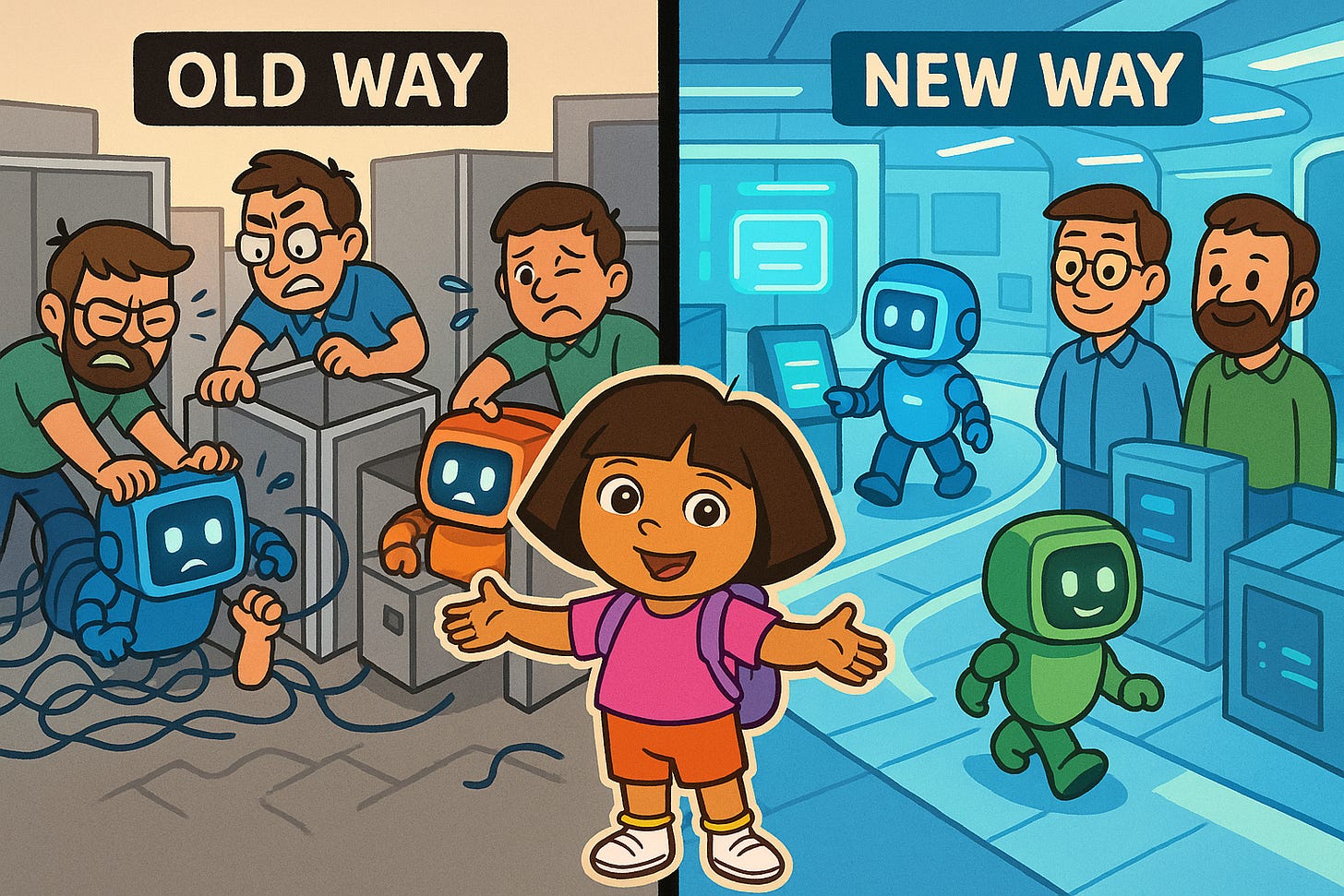

Here’s what DORA’s findings really point to, and what I’m starting to understand from months of wrestling with these tools: most developers approach LLMs as a coding problem when it’s actually an LLM-as-a-tool problem. We’re trying to make AI write better code when we should be restructuring how we work to make it easier for AI to help us.

We’re trying to make AI write better code when we should be restructuring how we work to make it easier for AI to help us.

Think about it. Every engineer I know evaluates AI tools based on code quality. “Does Claude generate better TypeScript than Cursor?” “Is GitHub Copilot’s autocomplete accurate?” But that’s the wrong question entirely. The right question: “How do I structure my development environment and process to be optimal for LLMs?”

Most coding environments—IDEs, complex project structures, human-centric workflows—aren’t just useless for LLMs. They’re actively harmful. IDEs are designed to help humans navigate complexity through visual interfaces, IntelliSense, and context switching. LLMs don’t need those crutches. They need clear, structured input and predictable patterns.

This explains the surge in CLI and terminal-based AI tools. It’s not nostalgia or developer hipsterism. Command-line interfaces are easier for LLMs to understand and work with. No GUI complexity, no visual context requirements. Just structured text input and output. When you think about it that way, of course terminal-based tools work better with AI.

The DORA study validates this without saying it directly. The “amplifier effect” isn’t just about organizational culture—it’s about development models. Teams succeeding with AI aren’t just using better tools. They’re restructuring their entire development approach around what works for LLMs. The teams struggling with AI are trying to bolt AI onto human-optimized processes.

to be effective coding with LLMs, you need to treat them as alien intelligences with different strengths and limitations. Not better humans. Different entities entirely

Here’s my new working theory: to be effective coding with LLMs, you need to treat them as alien intelligences with different strengths and limitations. Not better humans. Different entities entirely. This means designing your development environment, file structure, documentation, and workflows around what makes LLMs effective, not what makes humans comfortable.

That philosophical shift changes everything. Instead of asking “How do I get better code from AI?” you ask “How do I make my problem easier for AI to solve?” Instead of evaluating tools on output quality, you evaluate them on how well they integrate with AI-optimized workflows.

The 17% autonomous agent adoption rate suddenly makes sense too. Most teams are trying to use autonomous agents within human-optimized development environments. Of course they’re not ready. The environment itself creates friction that makes autonomous operation unreliable.

Conclusion

The 2025 DORA report establishes AI’s role as an organizational amplifier, but the deeper implication is more radical: successful AI adoption requires rebuilding development paradigms from the ground up. The 90% using assisted AI versus 17% using autonomous agents isn’t a trust gap—it’s a paradigm gap. Most developers are trying to make AI fit human-optimized workflows instead of creating AI-optimized environments.

The report’s seven capabilities provide the organizational foundation, but the technical foundation requires treating LLMs as fundamentally different entities with different needs. Teams that make this mental shift—from “better human coding assistant” to “alien intelligence requiring specialized interface design”—will see the productivity gains DORA documents. Those clinging to human-centric development models will continue struggling with AI amplifying their dysfunction.

The path forward isn’t just organizational readiness. It’s paradigm readiness. And that’s a much bigger change than most teams realize they need to make.

Related Reading

The METR Study Actually Makes Sense - Deep dive into why experienced developers measured 19% slower with AI tools despite feeling faster.

AI vs Human Problem-Solving Shows - Analysis of the productivity perception gap and what it means for development teams.

AI Is Normal Tech That Behaves Abnormally - Why treating LLMs as different entities requires specialized development environments.