For months, I've been telling anyone who'd listen about my three rules for prompt engineering. They're simple, they work, and they've saved me countless hours of frustration:

Don't write your own prompts - Let the AI do what it does best

Put as much detail into instructions FOR the prompts as you can - Context is everything

Provide detailed instructions on HOW to work - Meta prompts, also written by LLMs

These rules served me well through dozens of client projects and my work building AI-powered tools. But recently, while developing the metric extraction system for AI Power Rankings, I stumbled onto something that made me question whether these rules go far enough.

What if you don't just have AI write your prompt, but have one AI train another AI's prompts automatically?

The Moment Everything Changed

When Claude Code started correcting GPT-4.1's misses—and improving its prompts automatically—I saw accuracy jump 30% without writing a single new instruction.

I was deep into building a system to extract quantifiable metrics from AI tool news articles. Think funding announcements, user growth numbers, performance benchmarks - the kind of data that powers meaningful rankings and analysis. My original approach followed the rules perfectly: detailed instructions to Claude about what constitutes a metric, examples of good extractions, clear formatting requirements.

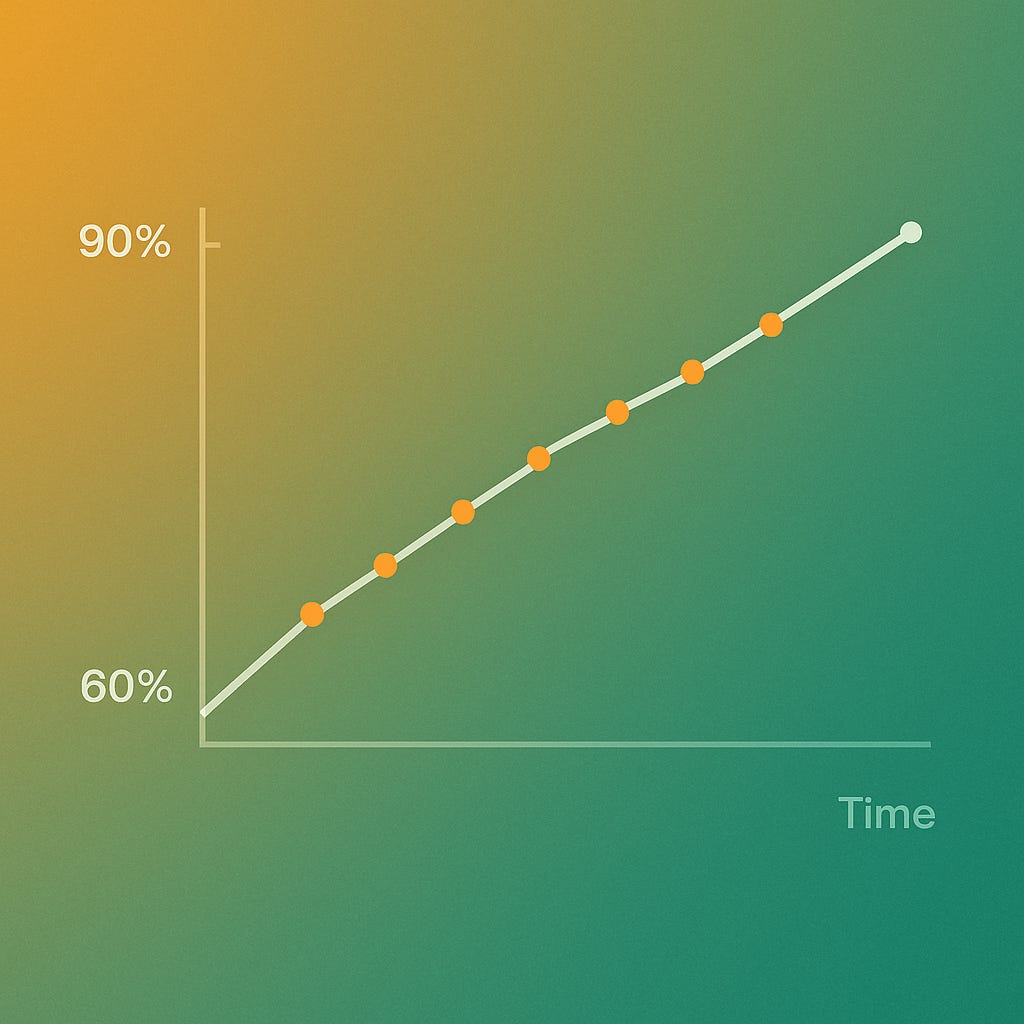

The initial results hit around 60-70% accuracy. Not terrible, but not production-ready either.

More importantly, the system kept missing nuanced cases. A $50M Series B round vs. $50M in ARR. GitHub stars vs. user counts. Performance metrics vs. adoption numbers. The kind of distinctions that matter when you're trying to build something people actually trust.

I could have spent weeks manually refining the prompts, adding more examples, tweaking the instructions. Instead, I tried something different. What if Claude Code could review GPT-4.1's extraction results and automatically improve the prompts?

That's exactly what happened. And it completely changed how I think about building with AI.

What Trainable Prompts Actually Deliver

The breakthrough wasn't just better accuracy - though jumping from 60-70% to 80-90% on complex extraction tasks changes everything. It was the compound nature of the improvements.

Cranking through months of articles in hours: My trained system now processes volumes I couldn't match manually. Each article costs roughly $0.01-0.05 to analyze with GPT-4, but the system handles scale I simply couldn't reach doing this by hand.

It started catching things I hadn't trained it to look for: Here's what surprised me most. The trained prompts don't just get better at the metrics I originally specified. They start recognizing patterns I hadn't thought to consider.

Funding metrics split naturally into seed, Series A, growth rounds. Performance benchmarks separate into speed, accuracy, and scale measurements. The system learned the domain faster than I could teach it.

Reliability that builds over time: Static prompts degrade. As the AI news landscape evolves, new terminology emerges, announcement formats change, and edge cases multiply. My trained system adapts without me touching the prompts. Each correction teaches it something new. Each improvement sticks permanently.

The approach proved its value quickly. The system learned to extract 20+ distinct metric types automatically, catching nuances that would be easy to miss manually.

Claude Code: The Training Teacher

Here's where it gets interesting. None of this would be practical if I had to manually provide feedback on every extraction. That's where Claude Code became the breakthrough - not just as a coding assistant, but as an AI trainer.

Here's how it actually works: GPT-4.1 extracts metrics from articles. Claude Code reviews those extractions and provides feedback. When GPT-4.1 misses a funding amount or misclassifies a performance metric, Claude Code catches it and automatically generates improved prompts through a LangChain-based feedback loop.

The economics make sense. Claude's not cheap—but I don't need it to be. I need it to make the cheap model smarter. I only need Claude Code for training feedback, maybe 2-3 times per week. GPT-4.1 handles the actual extraction work at pennies per article. Use the expensive AI to train the cheaper one, then let the cheaper one do the volume work.

Most importantly, I'm completely hands-off. The system runs automatically. Claude Code reviews results, identifies patterns in the errors, and updates the extraction prompts. I just check the dashboard to see accuracy climbing week over week.

The tool didn't just write code - it became the teacher that made the whole system work without me.

AI Power Rankings: The Proof Case

Building AI Power Rankings became the perfect test case for this approach. The challenge: extract meaningful metrics from the chaotic landscape of AI tool announcements, funding news, and performance claims.

Traditional approaches fail here. The terminology is evolving too quickly. Companies announce metrics in dozens of different formats. What counts as a "user" varies wildly between products. Static prompts can't keep up.

My trained system learned to navigate this complexity. It distinguishes between GitHub stars and active users. It recognizes when "95% accuracy" refers to model performance vs. uptime. It catches funding amounts whether they're announced as "$10M Series A" or "ten million dollar investment led by..."

The results speak for themselves:

20+ distinct metric types extracted automatically

90%+ accuracy on complex financial and technical metrics

Processing time measured in seconds per article, not hours

Hands-off operation as the AI landscape evolves

But the real win is insight generation. The trained system surfaces patterns I wouldn't have found manually. Which types of AI tools get funded most heavily? How do performance claims vary between categories? What language patterns predict successful launches?

This isn't just about automating existing analysis. It's about enabling analysis that wasn't practical before.

What the system does now—automatically:

Extracts funding, performance, and growth metrics across formats

Distinguishes between GitHub stars, users, and revenue figures

Adapts to new announcement styles without manual updates

Improves accuracy weekly through AI-to-AI training

Runs without human feedback or intervention

The Economics of AI Training AI

Let's be honest about the investment. Building a trainable prompt system isn't free. But the economics are more interesting than I expected.

Claude's not cheap—but I don't need it to be. I need it to make the cheap model smarter. The strategy: use expensive AI occasionally to train cheaper AI for ongoing work. Claude Code costs real money when I hit daily limits. But I only need it for feedback and training, maybe 2-3 times per week. GPT-4.1 handles the volume extraction at pennies per article.

The economics work out favorably. Processing costs stay low while training costs are occasional. The total is a fraction of what manual analysis would cost in time and effort.

But here's the key difference from traditional approaches: fully self-tuning means no ongoing human time. The AI-to-AI feedback loop runs automatically. Claude Code reviews extractions, identifies error patterns, generates improved prompts. I check the results, but I'm not providing the feedback.

The benefits compound without my input. Each training session makes GPT-4.1 more accurate across all future extractions. The error patterns Claude Code identifies improve the system holistically. But now the improvement happens without consuming my time.

This isn't just about saving money. It's about enabling analysis that scales beyond human capacity.

Beyond Extraction: Where This Approach Wins

Metric extraction was just the beginning. I'm seeing similar results in other complex AI tasks:

Content Analysis: Training systems to recognize writing patterns, sentiment shifts, and topic evolution in newsletter content. Static prompts miss the nuances. Trained systems learn to catch subtlety that matters.

Code Review: Teaching AI to understand project-specific coding standards and architectural patterns. The system learns your codebase's quirks and improves suggestions over time. No more generic advice that doesn't fit your context.

Market Research: Extracting competitive insights from earnings calls, product announcements, and strategic communications. The domain knowledge builds automatically as the system encounters new patterns.

The pattern is consistent. For complex, ongoing tasks where accuracy matters and the domain evolves, trainable prompts deliver step-change improvements over static approaches. You get results that compound rather than degrade.

When Static Still Wins

I'm not throwing out my original rules entirely. They still apply for most use cases. Quick content generation, simple analysis tasks, one-off projects - static prompts remain the right choice.

The overhead of building training systems only makes sense when you cross certain thresholds:

Volume: You're doing this work repeatedly over time Complexity: The task has many edge cases and nuances

Evolution: The domain changes frequently Accuracy: Mistakes are costly Scale: Manual refinement doesn't scale

For everything else, stick with the original approach. Don't write your own prompts. Provide detailed context. Use meta-prompts for complex workflows.

The New Framework

Here's how I decide now:

Stick with static prompts when:

The task is simple and well-defined

You need results immediately

The domain won't evolve significantly

Accuracy requirements are modest

You're doing this work occasionally

Invest in trainable prompts when:

The task is complex with many edge cases

You'll be doing this work repeatedly over time

The domain is evolving rapidly

High accuracy is essential

The cost of mistakes is significant

You're processing significant volume

The threshold is lower than you might think. If you're spending more than a few hours refining prompts for ongoing work, you should consider building a training system instead.

What This Actually Looks Like

The implementation is simpler than it sounds, but different from what I originally expected. You need three core components:

Extraction engine (GPT-4.1) that processes content and outputs structured results

Training AI (Claude Code) that reviews results and identifies improvement patterns

LangChain-based feedback loop that automatically generates and deploys improved prompts

The magic happens in the AI-to-AI interaction. GPT-4.1 extracts metrics from articles. Claude Code reviews those extractions, spots patterns in the errors, and generates better prompts through the LangChain framework. No human review required.

In my case, this happens automatically 2-3 times per week. Claude Code analyzes recent extractions, identifies where GPT-4.1 is struggling, and updates the prompts accordingly. Each improvement session boosts accuracy across all future extractions.

The key insight: expensive AI training cheaper AI removes the human bottleneck entirely. I check the dashboard to see accuracy trends, but the actual improvement happens without my involvement.

Real-World Impact

The AI Power Rankings system demonstrates this approach in practice. The AI-to-AI training loop has generated improvements I wouldn't have thought to implement manually:

GPT-4.1 learned to distinguish between GitHub stars and active users after Claude Code flagged the pattern

The system now catches funding amounts whether they're "$10M Series A" or "ten million dollar investment" after training sessions

Performance metrics get properly categorized (model accuracy vs. system uptime) without my intervention

But here's what really surprised me: the system learned to read between the lines. It finds metrics buried in technical blog posts, catches funding mentions in passing during interviews, spots user growth numbers hidden in company updates.

Claude Code taught GPT-4.1 to understand context and recognize patterns in ways my original static prompts never could. The compound returns happen automatically - each training session improves performance across all future extractions.

Most importantly, this runs without my daily involvement. I check accuracy trends weekly, but the actual improvement happens in the background. The AI-to-AI feedback loop scales without consuming my time.

Looking Forward

We're at an inflection point in how we build with AI. The first wave was about automating existing processes. The second wave is about creating processes that weren't practical before.

Trainable prompts represent this shift. They're not just more efficient ways to do what we were already doing. They enable entirely new approaches to complex problems.

As AI capabilities continue advancing, this distinction will matter more. Tools like Claude Code make sophisticated AI systems accessible to individual developers and small teams. The competitive advantage goes to those who can build systems that improve themselves over time.

The companies that figure this out first will have a significant edge. While others are still manually refining static prompts, they'll be operating self-improving systems that get better automatically.

Getting Started

My advice: start experimenting now. Pick a complex, recurring task where accuracy matters. Build a simple feedback loop. Let the system train itself for a few weeks. See what happens.

You don't need to build everything from scratch. Claude Code can generate most of the infrastructure you need. Focus on the domain expertise and feedback quality. Let the AI handle the technical implementation.

Start small. Pick one specific extraction task or analysis workflow. Build the training loop. Collect feedback for a month. Measure the improvement. Then decide whether to expand the approach.

You might discover, like I did, that your original rules were just the beginning.

The future belongs to systems that learn and improve over time. Static prompts got us started, but trainable prompts will take us where we need to go.

This approach has become central to how I build AI systems for my clients and my own projects like AI Power Rankings. The combination of human expertise and automated improvement creates results that neither could achieve alone.

Fixed a problematic copy and paste. Thanks Ophir!