Claude, Hyperdev, and the Rise of Multi-Agent Models

How building my own AI agent revealed that Claude is already running a team of its own.

I was deep in the guts of Hyperdev, an AI-first advisor I’m building to help developers reason about their own systems. It’s designed to be agentic—capable of analyzing a codebase, choosing tools, running evaluations, and then synthesizing a recommendation. The goal isn’t just to answer questions. It’s to *act like a smart team lead who’s read the code*.

While testing Hyperdev’s CLI reasoning path, I noticed a weird inconsistency: some paths spun up multiple OpenAI clients. Others didn’t. I asked the system to debug itself—and then for fun, I fed the same problem into Claude.

That’s when I saw this (my response after the caret, Claude after the bullet:

> That's clearly a problem. Interactive mode should use the exact same logic, there should be only one path. The difference between the two is UI. Same with terminal mode.

⏺ You're absolutely right. Having multiple separate implementations creates inconsistency and duplication, which is causing issues like the double OPENAI_API_KEY message.

⏺ Let's analyze how to consolidate these paths:

⏺ Task(Analyze the HyperDev codebase to determine the best approach for consolidating the different CLI and interactive modes. Currently, there are multiple separate implementations that all create their own OpenAIClient instances, causing duplication and inconsistency. …)

⎿ Done (5 tool uses · 60.7k tokens · 34.6s)

⏺ I agree with the agent's analysis. Let me outline a specific implementation plan to consolidate these paths:

> 1. First, let's create a session factory that will provide a single point for creating OpenAIClient instances...At first glance, that just looks like a sophisticated assistant answering a technical question. But what’s happening under the hood tells a much deeper story.

The Hidden Architecture: Claude is Multi-Agent

Let’s break this down:

- Claude analyzes the request.

- Delegates a specific task to a scoped worker agent.

- That agent uses tools—5 in this case.

- Another agent evaluates the output and agrees with the findings.

- Finally, the system returns a synthesized implementation plan.

That’s not a prompt. That’s a pipeline.

Claude just behaved like an **orchestrator**, coordinating specialized sub-agents, tools, and evaluators. That’s **multi-agent behavior**—a structured, internal delegation loop.

Which brings us to the real insight: this isn’t some future roadmap. **It’s already here**.

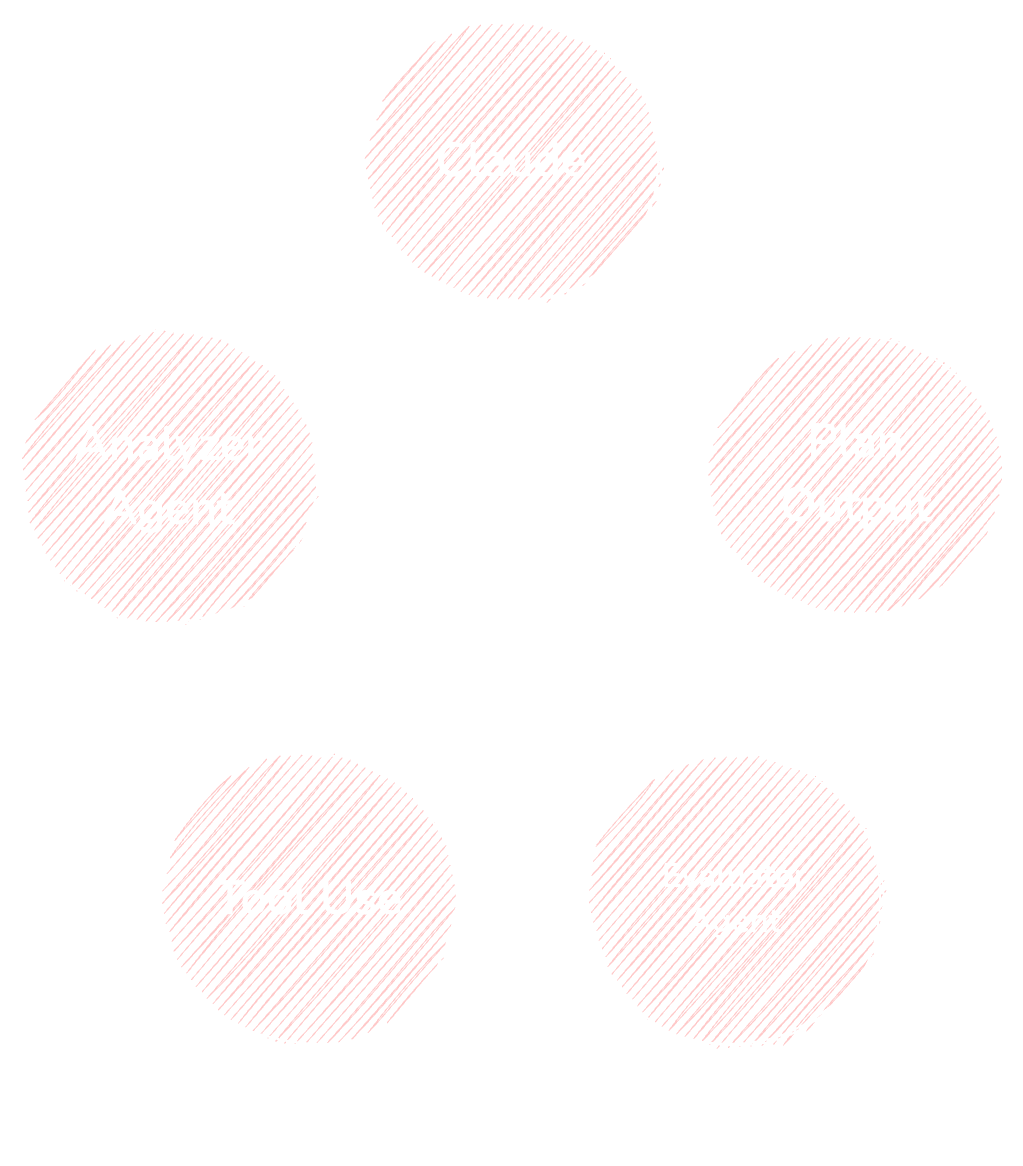

🔍 Claude’s Agentic Loop (Visualized)

Here’s a breakdown of what that flow could look like:

Each circle is a distinct agent or system component:

- Claude is the meta-agent: the interface and decision-maker.

- Analyzer evaluates the architecture.

- ToolUse represents autonomous execution (e.g., file scans, dependency graphs).

- Evaluator validates and synthesizes the findings.

- PlanOutput is the final consolidation blueprint.

Why It Matters

If you’re building tools like Hyperdev—systems that need to plan, introspect, and act intelligently—this shift changes everything.

Key Implications:=

- Task Decomposition is Native

Stop thinking in terms of monolithic prompts. Modern models can internally split and assign tasks.

- Coordination Fidelity > Raw IQ

The quality of a model’s reasoning now depends on *how well it manages its own agents*, not just how big it is.

- Debugging Gets Messier

Observability now needs to include tool traces, agent logs, and action trees—not just prompts and completions.

And here’s the real kicker: Claude didn’t expose any of this explicitly. But it *showed* it.

From Tool to Teammate

We’ve entered a new phase in AI systems: **they no longer behave like assistants—they behave like collaborators**. They route tasks, critique themselves, and propose structured plans.

That’s not “chat.” That’s team coordination.

If you’re building with AI—whether you're shipping dev tools, RAG apps, or workflow assistants—start designing for this reality:

- Architect for delegation

- Track agent state and role history

- Build observability into tool use and planning decisions

- Expect disagreement between sub-agents. Plan for arbitration

Hyperdev’s architecture is evolving in this direction already. Claude just confirmed that it’s already there.

Final Thought

The future of AI isn’t a bigger brain — though there’s no question they will get bigger. Those changes are much more expensive and will take longer.

It’s a better system of minds working together.

Claude gave us a glimpse of what that looks like—if you were paying attention.

More reading: