Anthropic quietly launched remote MCP server support for Claude Code this week (June18), and it represents the most significant shift in AI development tooling since, well, the added local MCP support a few weeks ago (see the trend?). Unlike local MCP servers that require developers to manage infrastructure, remote servers let vendors handle deployment, scaling, and maintenance while developers simply authenticate and connect.

This isn't just a convenience upgrade. Remote MCP fundamentally alters the economics of AI tool integration and positions Anthropic as infrastructure provider rather than model vendor.

What remote MCP servers actually enable

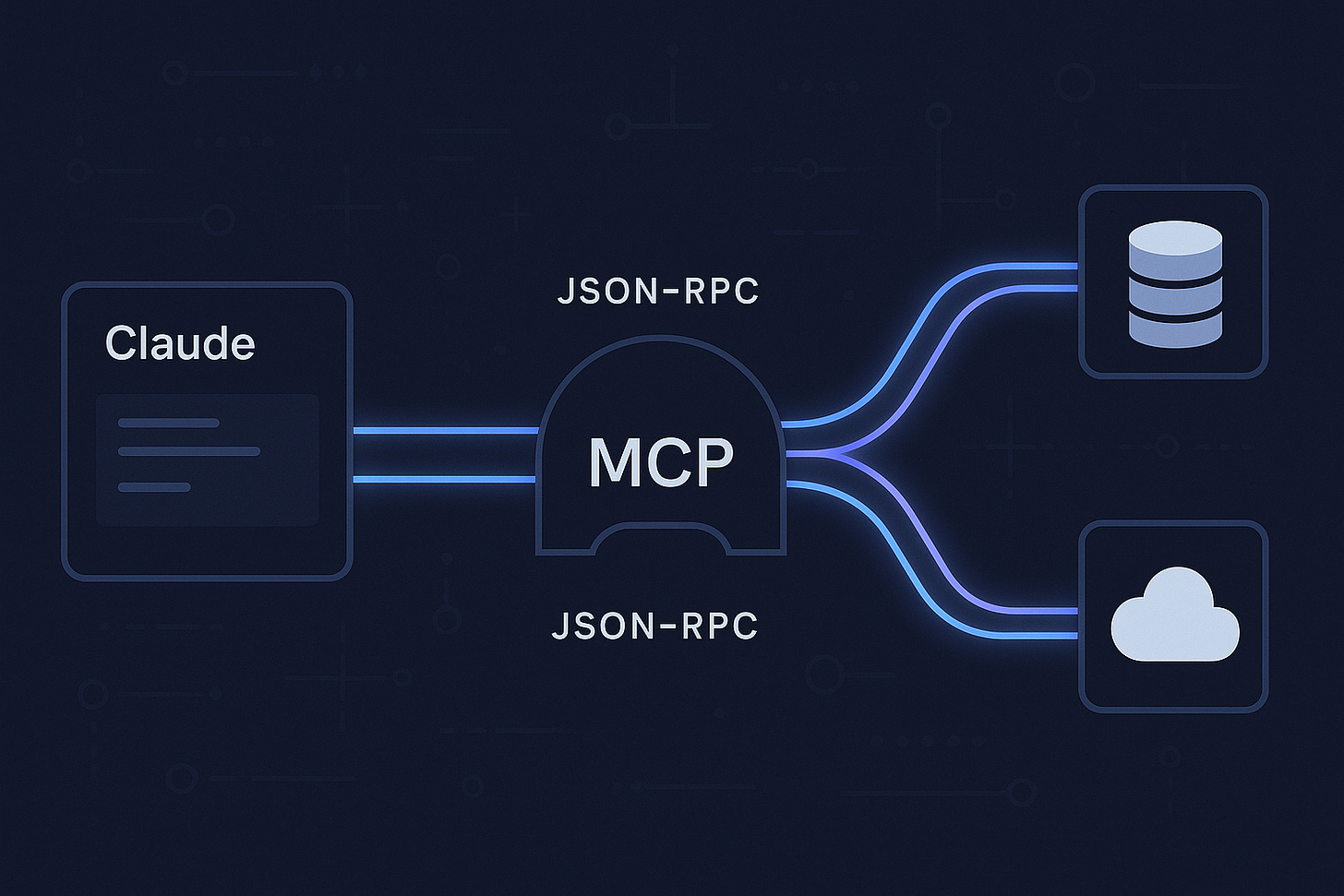

The Model Context Protocol already allowed Claude Code to connect directly to tools, databases, and external services through local server processes. Remote MCP extends this by moving server infrastructure to vendors, eliminating the setup complexity that has limited adoption.

Instead of installing and configuring MCP servers locally, developers now add vendor URLs to their Claude Code configuration and authenticate through OAuth 2.0. GitHub, Slack, database providers, and cloud services can offer direct Claude integration without requiring users to manage any local infrastructure.

The technical implementation uses HTTP transport with mandatory OAuth 2.1 compliance, replacing the deprecated Server-Sent Events approach. All communication requires HTTPS, and servers must implement proper CORS headers for browser-based clients. Capability negotiation during initialization allows clients and servers to agree on supported features.

Configuration shifts from complex to simple

Local MCP server setup required Node.js environment management, authentication token configuration, and troubleshooting connection issues. A typical GitHub integration looked like this:

json{

"mcpServers": {

"github": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-github"],

"env": {

"GITHUB_TOKEN": "ghp_your_token_here"

}

}

}

}Remote servers reduce this to a URL and OAuth flow:

bashclaude mcp add --transport http github-remote https://api.github.com/mcpThe vendor handles updates, scaling, and availability. Developers authenticate once and gain access to full integration capabilities without managing infrastructure. This approach eliminates the Node.js version conflicts, path resolution issues, and environment variable management that plagued local setups.

Security model emphasizes vendor responsibility

Remote MCP shifts security responsibilities between developers and vendors. Vendors must implement OAuth 2.1 compliance, maintain secure infrastructure, and handle credential management. Developers retain control through fine-grained permissions and can revoke access without changing local configurations.

Claude Code maintains its approval-required approach for tool execution, implements prompt injection protections, and provides audit logs of all MCP operations. However, organizations connecting to remote servers should establish additional monitoring, especially for production environments accessing sensitive systems.

The protocol includes built-in protections against common attack vectors, but the expanded surface area of remote connections introduces new considerations around vendor security practices and data handling policies.

Cost implications extend beyond token usage

MCP operations consistently increase Claude API costs by 25-30% due to additional context pulled from external sources. Remote servers compound this by potentially introducing more data sources and reducing friction around connection setup.

Organizations should factor these costs into AI tooling budgets. A development team spending $200 monthly on Claude API usage can expect increases to $260-$275 after implementing comprehensive MCP integrations. Enterprise teams with higher usage patterns face proportionally larger cost impacts.

However, remote servers eliminate infrastructure costs previously required for local MCP deployments. Teams no longer need to provision servers, manage updates, or handle scaling for MCP services. For larger organizations, this infrastructure offset may balance against increased API costs.

Competitive positioning against established tools

Remote MCP addresses fundamental limitations in existing AI development tools. GitHub Copilot accesses only currently open files. Cursor provides excellent codebase understanding but requires their specific VS Code fork. JetBrains AI Assistant works within their IDE ecosystem but lacks extensible tool integration.

Claude Code with remote MCP can simultaneously access GitHub repositories, query production databases, analyze error logs from monitoring services, and deploy infrastructure changes—all through standardized protocols that work across different AI systems.

This vendor-neutral approach creates different strategic dynamics. Rather than each AI provider building proprietary integrations, MCP enables reusable servers that work with any compatible system. A GitHub MCP server functions equally well with Claude, future AI systems, or custom implementations.

Enterprise adoption signals platform maturation

Early enterprise implementations suggest strong market reception. Block, Apollo, and other companies have deployed MCP for internal tooling integration. Development platforms including Zed, Replit, and Sourcegraph have implemented MCP support, indicating ecosystem momentum beyond Anthropic's products.

The roadmap includes enterprise features critical for broader adoption: SSO integration, role-based access control, centralized audit logging, and multi-tenant deployment options. These capabilities position MCP for regulated industries and large-scale organizational deployments.

Remote server support accelerates this timeline by reducing deployment friction. Enterprise teams can evaluate MCP capabilities without infrastructure provisioning, then scale to custom implementations as usage grows.

Technical limitations require consideration

Remote MCP introduces network dependencies that don't exist with local servers. Internet connectivity issues, vendor service outages, and regional latency variations can impact development workflows. Organizations should plan for offline scenarios and have fallback procedures.

Browser-based Claude cannot use MCP, limiting usage to desktop applications. Multi-user enterprise scenarios require careful configuration management since most MCP servers assume single-user access patterns.

The protocol continues evolving, with the initial release in November 2024. Breaking changes remain possible as the specification matures. Organizations implementing MCP should plan for ongoing maintenance and potential migration requirements.

Strategic implications for AI development

Remote MCP represents Anthropic's bid to establish infrastructure standards beyond model competition. By creating an open protocol that benefits from ecosystem growth regardless of AI model choice, Anthropic positions itself as the connectivity layer for AI-tool communication.

This approach proves particularly valuable as model capabilities commoditize and differentiation shifts to integration quality and workflow optimization. Establishing MCP as the industry standard for AI-tool communication creates sustainable competitive advantages through network effects.

The success depends on ecosystem adoption. Early indicators suggest positive momentum: major development tools implementing support, community enthusiasm producing dozens of servers for popular services, and enterprise interest in standardized AI integration approaches.

When MCP makes sense (and when it doesn't)

The 25-30% cost overhead raises an important question: when does MCP justify itself versus simpler alternatives?

Many development workflows already achieve Claude integration through standard APIs and command-line tools. GitHub's CLI, shell scripts with bearer tokens, and direct API calls work reliably without the complexity or cost overhead that MCP introduces. These approaches provide immediate value for routine development tasks.

MCP's advantages emerge in scenarios requiring deep contextual integration: multi-repository debugging sessions, legacy system analysis requiring historical context, or workflows combining multiple data sources simultaneously. The protocol excels when Claude needs to maintain state across tool interactions or correlate information from disparate systems.

For teams with established CLI workflows and API integrations, MCP represents evolution rather than revolution. The question becomes whether enhanced AI integration justifies the implementation complexity and ongoing costs.

Implementation recommendations

Organizations should evaluate MCP judiciously against existing workflow efficiency. Start with well-established remote servers for GitHub, cloud services, and monitoring tools only if current API-based approaches create significant friction.

Security considerations require vendor evaluation, especially for servers accessing sensitive systems. Implement monitoring for MCP operations, use fine-grained API permissions, and establish team guidelines for server approval processes.

Budget planning should account for 25-30% increases in AI API costs while recognizing infrastructure savings from vendor-managed servers. Compare this against the productivity gains from existing CLI and API integrations before expanding MCP usage.

Teams evaluating MCP should test with non-critical systems first, establish configuration management practices, and plan for protocol evolution as the specification matures.

Looking ahead

Remote MCP support transforms Claude Code from an AI assistant into a connected development platform. The vendor-neutral protocol design enables ecosystem growth that benefits all participants while positioning Anthropic as infrastructure provider for AI-tool communication.

Early adoption signals suggest this direction will accelerate. As more vendors offer remote MCP servers and additional AI systems implement protocol support, the network effects strengthen. Organizations implementing MCP now position themselves advantageously for the shift toward AI-integrated development workflows.

The question isn't whether AI development tools will become more connected. It's whether MCP becomes the standard protocol for that connectivity.

Good read, Robert. Am I correct in assuming that remote MCP could be used to enable functionality like the ability for users to make hotel bookings within Claude? Eg. Claude ↔ Your Remote MCP Server ↔ Hotel Booking Availability, Rates & Inventory API.

Will remote MCP become like adding an app from the app store to extend functionality for the user? Albeit now without the developers needing to build a UI?