Claude Code 1.0.60: The Native Agent Architecture I've Been Waiting For

And how Claude MPM now takes advantage of it.

Last week, Anthropic shipped Claude Code 1.0.60. The version bump looks incremental, but this update fundamentally changes how AI agents coordinate work. While everyone was debating tool migrations and pricing drama, Claude Code shipped proper subagent capabilities with context filtering.

This matters because it solves the token economics problem that made multi-agent development expensive and slow. Where 1.0.59 burned tokens with brute-force subprocess spawning, 1.0.60 can actually reduce token consumption while running multiple specialized agents.

The Subprocess Problem I've Been Complaining About

Here's what drove me nuts about previous Claude Code agent implementations: every subprocess inherited the entire conversation context. Spawn three agents with a 50,000-token conversation? You'd burn through 150,000+ tokens plus processing overhead. Each subprocess launch meant waiting 10+ seconds in complex environments.

I experienced this firsthand building claude-multiagent-pm. The token consumption was brutal. Every agent got my complete debugging history, irrelevant code discussions, and tangential research when they just needed to analyze specific functions or implement focused features.

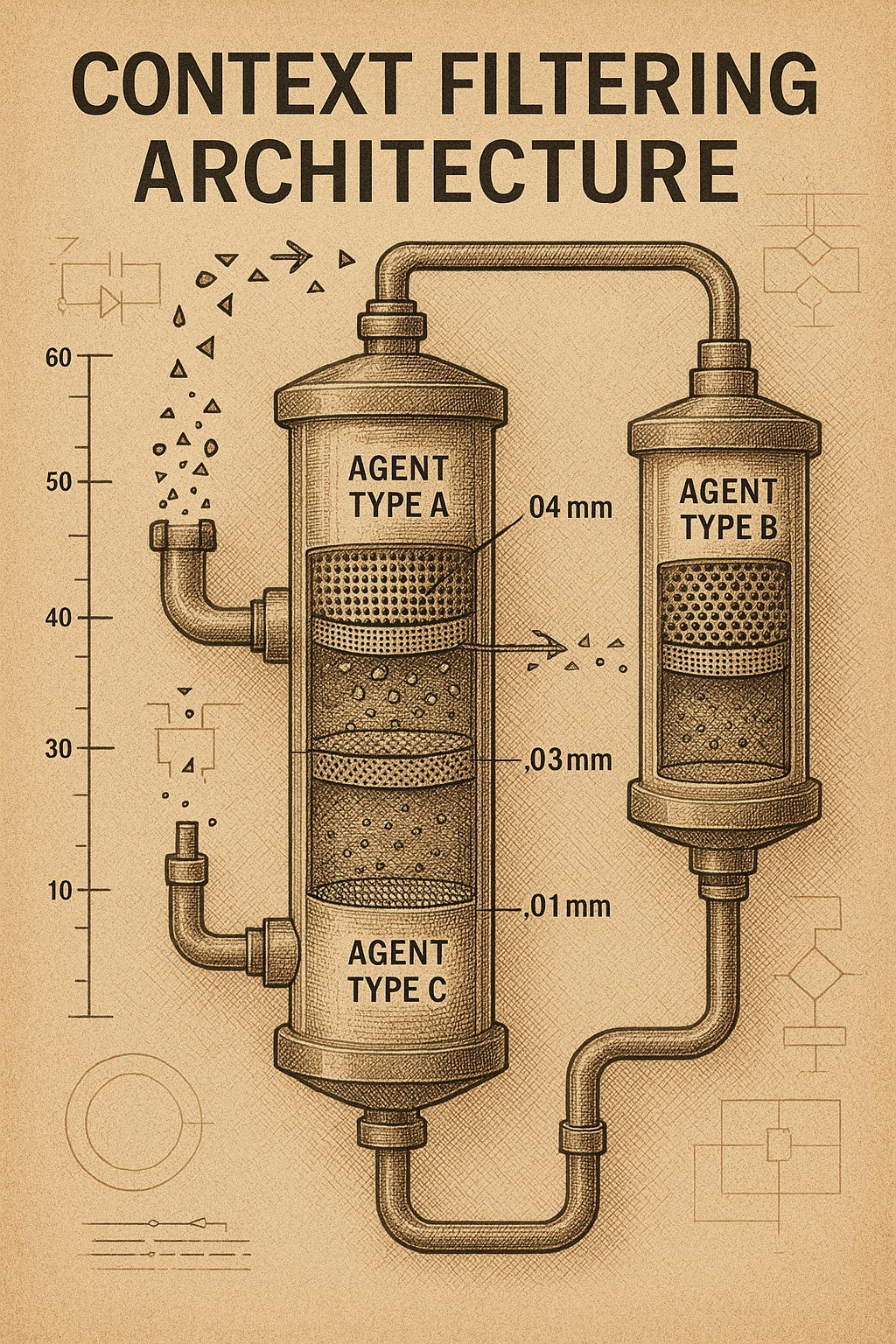

Version 1.0.60 changes this completely. Anthropic's documentation now confirms that subagents operate in independent context windows with tailored system prompts and tool access, preventing context pollution while keeping each agent focused on its domain. A security review agent analyzing bash commands gets maybe 500 tokens of relevant context, not my entire conversation. A documentation agent updating API specs gets function signatures and changes, not debugging session transcripts.

How Context Filtering Actually Works

The breakthrough isn't just efficiency—it's architectural. Instead of spawning processes that inherit everything, 1.0.60 uses what Anthropic confirms is an orchestrator-worker pattern. The main agent coordinates specialized subagents that operate in independent context windows with tailored system prompts and tool access.

Each subagent gets a filtered context containing only what's relevant to its task. The research agent analyzing authentication patterns gets function signatures and security requirements, not my debugging session from last Tuesday. The implementation agent gets the research results plus relevant code patterns, not the full conversation history.

Tool access filtering adds another layer. I can give a research agent read-only access while the implementation agent gets full editing permissions. All configured dynamically based on what the agent actually needs to do its job.

Before 1.0.60: Spawn agent → Pass 50K tokens → Wait 10+ seconds → Process with diluted focus

After 1.0.60: Dispatch agent → Filter to task-specific context → Sub-second launch → Focused processing

The Token Math That Actually Matters

In my testing with complex refactoring tasks, 1.0.60's native agents show roughly 70% token reduction compared to subprocess approaches. The overhead for agent creation is real—I'm seeing around 13K tokens per agent—but that's still dramatically better than full context inheritance.

Here's a real example from implementing authentication in a REST API:

1.0.59 subprocess: 45K tokens × 4 agents = 180K+ tokens

1.0.60 native agents: ~13K per agent × 4 agents = ~52K tokens

Still a significant improvement—roughly 70% reduction—but the overhead for agent creation is real. Each agent carries about 13K tokens of overhead even with context filtering, which is far better than the full context inheritance of subprocess spawning but not negligible.

The savings compound as conversations grow longer and projects get more complex. What previously became prohibitively expensive now scales efficiently. You can run multiple specialized agents throughout development cycles without token anxiety.

I've been rebuilding Claude-MPM to take advantage of these economics. Where claude-multiagent-pm required Claude Max subscriptions due to intensive token consumption, the 1.0.60 rewrite as Claude-MPM operates efficiently even on standard plans.

Rebuilding Claude-MPM for 1.0.60

I spent most of last week rewriting Claude-MPM to take advantage of these new capabilities. My previous framework, claude-multiagent-pm, was built on subprocess orchestration—functional but expensive. The 1.0.60 rewrite as Claude-MPM provides the same multi-agent coordination but with dramatically better economics.

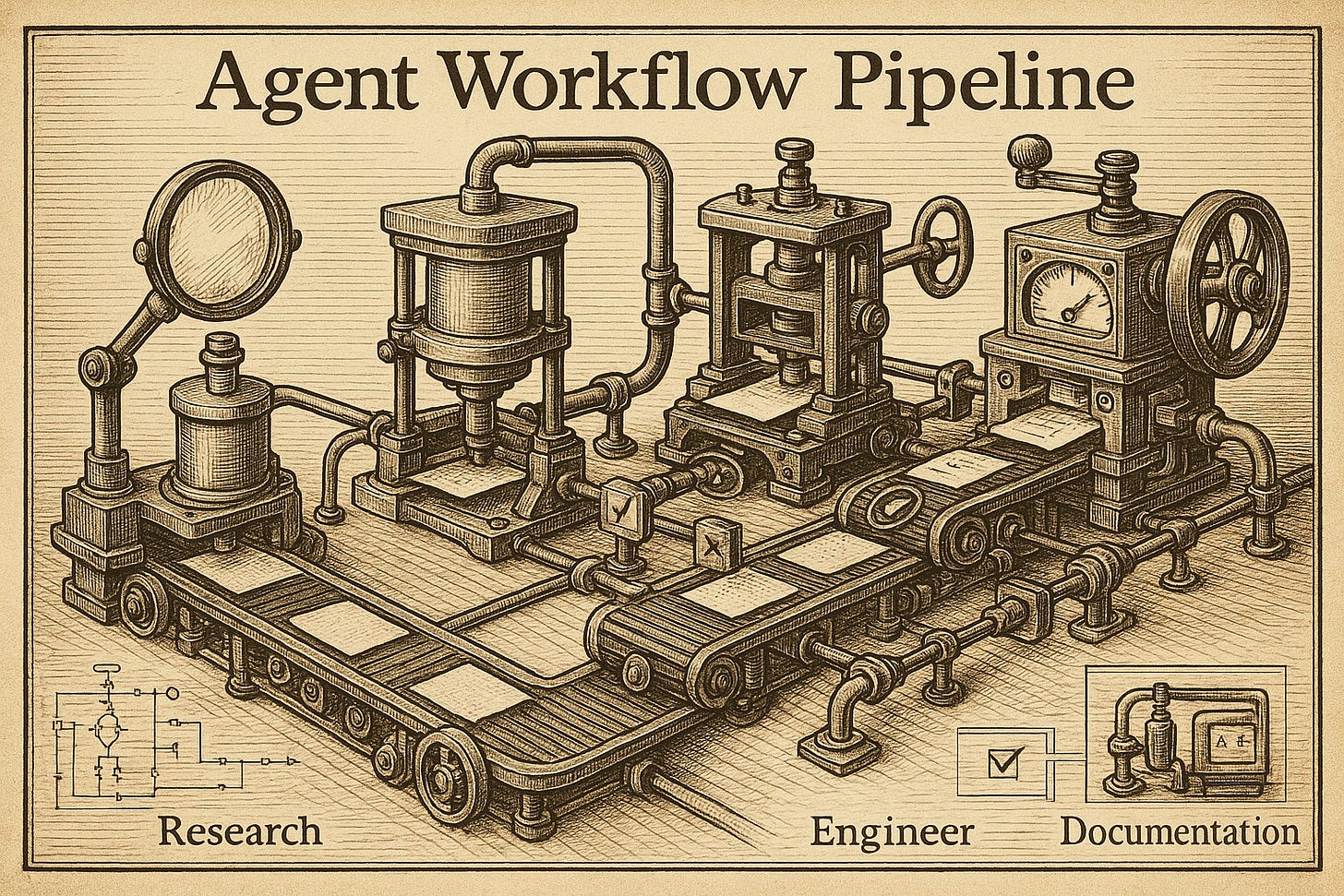

The agent ecosystem includes:

Research Agent: Codebase analysis using tree-sitter (41+ languages)

Engineer Agent: Implementation following research-identified patterns

QA Agent: Testing validation with sign-off authority

Security Agent: Security review and vulnerability assessment

Documentation Agent: Technical documentation and API docs

Ops Agent: Deployment, CI/CD, infrastructure management

Version Control Agent: Git operations and branch management

Data Engineer Agent: Database design and ETL pipelines

These aren't templates—they're production-ready agents with standardized JSON schemas, resource tier management, and tool assignment optimization. The three-tier hierarchy (project → user → system agents) means you can start with proven defaults while maintaining full customization.

Getting started is straightforward:

# Install via npm

npm install -g @bobmatnyc/claude-mpm

# Or via PyPI

pip install claude-mpm

# Start using immediately

claude-mpm # Interactive session with all agents available

What This Changes About Development Workflows

Context filtering enables workflows that were previously impractical. I can now run research-first development patterns where analysis agents examine codebases, identify integration points, and hand off precise specifications to implementation agents.

Here's my standard flow:

User Request → Research Agent → Engineer Agent → QA Agent → Documentation Agent

Each handoff includes only what's needed for the next stage. The Research Agent analyzes my codebase and creates a focused specification. The Engineer Agent receives that specification plus relevant code patterns—not my entire debugging history. The QA Agent gets the implementation plus test requirements, not the full conversation.

Quality gates become natural rather than forced. The QA Agent can refuse to sign off on implementations that don't meet testing standards. The Security Agent can block deployments with vulnerability concerns. The Documentation Agent ensures every feature ships with updated docs.

This isn't theoretical. I've been using this pattern for client work this week. The context isolation means each agent stays focused on its specific domain instead of getting distracted by irrelevant conversation history.

Performance Beyond Token Savings

The improvements extend beyond token optimization. Native agent spawning eliminates subprocess overhead, reducing latency from 10+ seconds to near-instantaneous agent creation. Direct terminal integration removes permission prompts and external process dependencies that used to interrupt my workflow.

The system implements intelligent summarization at multiple points. Subagents compress results before returning them to the parent agent, reducing the token footprint of inter-agent communication. Strategic use of lighter models for simple parsing tasks further optimizes consumption.

In testing complex research tasks, I'm seeing the kind of performance improvements Anthropic's research documented—90.2% better results on multi-faceted analysis compared to single-agent approaches. Parallel processing enables multiple agents to work simultaneously within separate context windows, effectively multiplying available context capacity.

What This Means for Developer Productivity

Version 1.0.60 represents more than an incremental improvement—it's the foundation for agent-first development where human developers orchestrate intelligent teams rather than writing code directly. The token economics now support workflows where agents handle research, implementation, testing, documentation, and deployment coordination autonomously.

The timing matters. While other AI coding tools face market corrections due to pricing controversies and quality concerns, Claude Code is delivering fundamental improvements that expand capabilities while reducing costs.

My immediate roadmap for Claude-MPM includes:

Personalized core instructions and workflow patterns

Integration with GitHub, Linear, and Jira for automatic ticket management

Custom agent creation tools for specialized domains

What Actually Changed Here

The difference between 1.0.59 and 1.0.60 might look minor in version numbering, but this update addresses the core limitation that made multi-agent development expensive and slow. Context filtering transforms agent orchestration from an expensive novelty into a practical development paradigm.

For teams ready to embrace agent-first development, 1.0.60 provides the architectural foundation. For those wanting to start immediately without custom configuration, Claude-MPM offers a curated ecosystem of production-ready agents built specifically for these new capabilities.

The version number might look minor, but when context filtering meets native performance, development workflows start looking different. This isn't just about improving existing approaches—it's enabling workflows that weren't practical before.

Related Reading:

The AI Coding Tools Market Correction - Understanding recent industry turbulence

I Hope Never To Use Claude Code Again - The evolution toward agent orchestration