I needed to build aipowerranking.com quickly. Not a prototype—a real product that tracks and ranks agentic AI coding tools monthly, like NBA power rankings for autonomous development assistants. The site analyzes everything from Cursor's $9.9B valuation to Claude Code's 72.7% SWE-bench score through a transparent, data-driven algorithm.

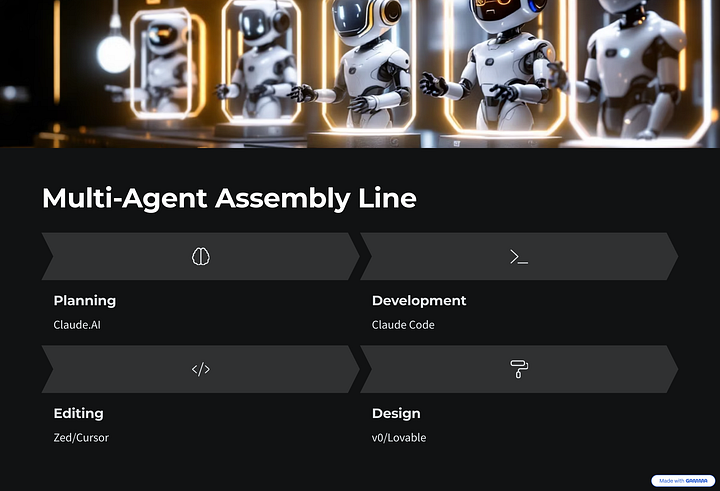

What made this interesting wasn't the end result, but the workflow. I used five different AI tools across distinct phases of development, each handling what it does best. This wasn't about finding the "perfect" tool—it was about orchestrating the right capabilities at the right moments.

Here's exactly how it worked.

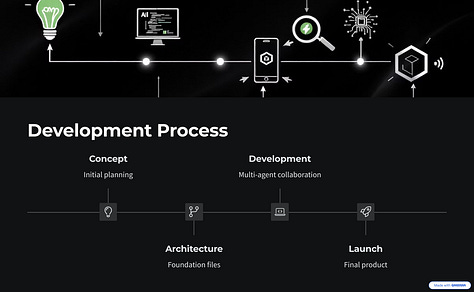

The Multi-Agent Assembly Line

Claude.AI handled requirements and PRD. I fed it the concept and had it structure the product requirements document. Claude's strength here was thinking through edge cases and user flows I hadn't considered. It asked the right questions about ranking methodology, data sources, and update frequency.

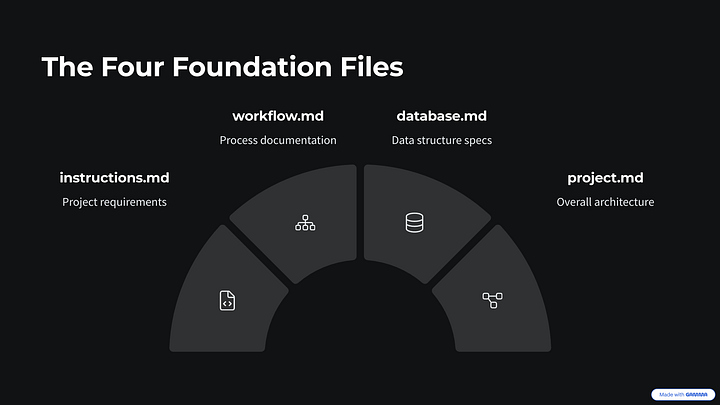

Claude Code took over scaffolding and core development. This is where my specific workflow kicks in. I maintain four key files that turn CC into a precision instrument:

instructions.md- Stack-specific best practices and toolchain guidanceworkflow.md- PRD, ticketing, and project management structuredatabase.md- Database access patterns and schema guidanceproject.md- Product goals plus architecture, API, and debugging specifics

I also maintain a CLAUDE.md that points to these files, making the whole setup portable across agents. Cursor, Augment, and Zed all follow these guidelines as intended. The difference was dramatic—instead of explaining context every time, any agent I work with just knows how I work.

Mixed in some Zed and Cursor for variety. Honestly, this was more about workflow rhythm than technical necessity. Sometimes you need to break up the AI assistance with direct coding. Keeps you grounded in what the tools are actually producing.

The Design Challenge: Where Things Got Interesting

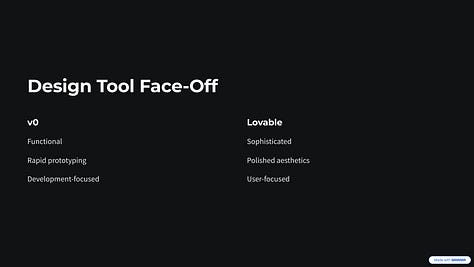

Once I had a functional site, I faced the classic problem: making it look professional. I exported the basic site and fed the same design prompt to both v0 and Lovable, asking them to build pages based on my running preview.

Lovable destroyed v0 on pure design creativity. Same prompt, dramatically different results. Where v0 gave me competent but predictable layouts, Lovable delivered sophisticated color schemes, thoughtful typography, and layouts that actually looked modern. The difference wasn't subtle—it was night and day.

But here's the critical insight: neither tool could successfully build on top of existing code. They excel at greenfield design work. Trying to retrofit their output onto my existing codebase created more problems than it solved.

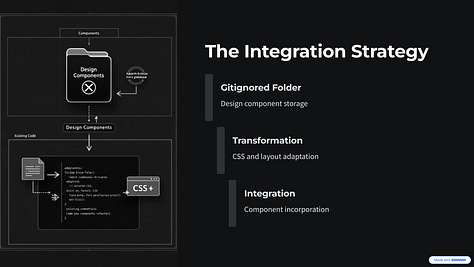

The Integration Strategy That Actually Worked

Instead of forcing integration, I let Lovable build everything fresh in its own environment. Then I created an "incoming" folder in my main project—gitignored, just holding the designed components Lovable had created.

Then I brought Claude Code back in for the migration. I pointed it at both codebases and said: "Take this design approach and apply it to this existing architecture." First the CSS, then the layout structures, then the component hierarchy.

This was more work than direct integration, but the results were substantially better. CC understood my existing patterns and could adapt the new design language without breaking functionality. It's the difference between translation and transplantation.

Production Polish: The Details That Matter

Subscriptions and validation were straightforward additions. A few hours of prompting with standard patterns.

Internationalization was probably unnecessary but I'm a sucker for i18n. Having the site display in Korean or Japanese felt too cool to skip, even if it added complexity. Claude.AI handled the language strings, GPT-4 double-checked them.

Database versioning remains unsolved. I spent hours prompting different approaches, but nothing clicked. I'll probably shell out $100/month for Supabase's versioning feature unless I find something better. Still not convinced I needed a normalized database for this project, but we'll see how it scales.

What This Workflow Reveals

Tool switching isn't inefficiency—it's optimization. Each AI tool has distinct strengths. Claude.AI excels at structured thinking and requirements. CC dominates at implementing within established patterns. Lovable crushes creative design work. The overhead of context switching was minimal compared to the quality gains.

Architecture documents are force multipliers. Those four markdown files turned CC from helpful to indispensable. The specificity of guidance let it make decisions that aligned with my broader technical vision.

Design-to-code integration is still broken. The tools that create beautiful interfaces aren't the tools that implement them well in existing codebases. Until that changes, the "incoming folder + migration" pattern is your best bet.

Greenfield design, then strategic migration. Don't try to force design tools to work within your existing constraints. Let them run free, then use implementation tools to adapt their output to your reality.

The Real Test

Check out the about page for the full technical stack. The site does exactly what I wanted: provides transparent, data-driven rankings of agentic coding tools with clear movement analysis and market insights.

More importantly, the development process revealed something valuable about multi-agent workflows. We're not looking for one tool that does everything. We're orchestrating specialized capabilities across the development lifecycle.

That's the real story here. Not that I built another website, but that the toolchain for complex development work is becoming genuinely collaborative between humans and multiple AI agents. Each handling what they do best.

Which, when you think about it, is exactly how human development teams work.

Bottom line: Multi-agent development workflows aren't the future—they're the present. The question isn't whether to use multiple tools, but how to orchestrate them effectively.

Check out AI Power Rankings to see how agentic coding tools actually stack up against each other, with transparent methodology and monthly updates. No hype, just data.