The AI revolution keeps getting postponed. Not because the technology doesn't work—it clearly does—but because it works in ways that make you question your own sanity.

Narayanan and Kapoor's April 2025 paper sparked a huge academic fight by calling AI "normal technology." After eight months of daily use across multiple AI coding tools, I think they're right. Sort of.

AI follows ordinary adoption patterns. Gets constrained by implementation realities. Requires human adaptation. Classic technology trajectory.

But here's what the academics missed: when your "normal" technology randomly decides your YAML means something different each time you run it, you need a lot more human oversight than usual. Plus there's the obvious speed of adoption and the vast sums of money flowing in. This isn't crypto hype. It feels closer to 2002, but at 100x scale.

The Academic Cage Match

The paper dropped right into Silicon Valley's superintelligence fever dream. The response was immediate and angry.

The AI Futures Project published a rebuttal arguing adoption speed proves AI's revolutionary nature. Fair point: 76% of doctors now use ChatGPT for clinical decisions. Legal AI adoption jumped from 19% to 79% in one year. Those aren't gradual curves.

But adoption speed isn't transformation speed. When I started using Claude Code eight months ago, it felt game-changing. Fast debugging, smart suggestions, context that actually worked. Now? I spend considerable amount of time wrestling with inconsistent behavior. And the biggest frustration is that it almost feels like a gambling streak. Sometimes the code just flows, other times I’m hacking away at stone.

Look past the academic jargon and you see a fight about worldviews. The "normal technology" camp thinks institutional barriers slow things down naturally. The "disruptive" camp says AI sneaks past all that through individual adoption—your doctor using ChatGPT without asking permission first.

After months in the trenches, both sides miss the point.

Technical Reality Check

The numbers don't match the hype. AI accuracy varies up to 15% even at temperature=0. Experienced developers are 19% slower with AI tools despite feeling faster. 90% of AI computing energy goes to data movement, not actual computation.

Recent arXiv research documents something every developer knows: even at temperature=0—supposedly deterministic settings—large language models show accuracy variations up to 15% across identical runs. Performance gaps between best and worst outputs reach 70% for the same inputs.

None of the frontier models deliver consistent results. GPT-4, Claude, Llama-3—all exhibit this behavior. This isn't a bug you can patch. It's how it's architected.

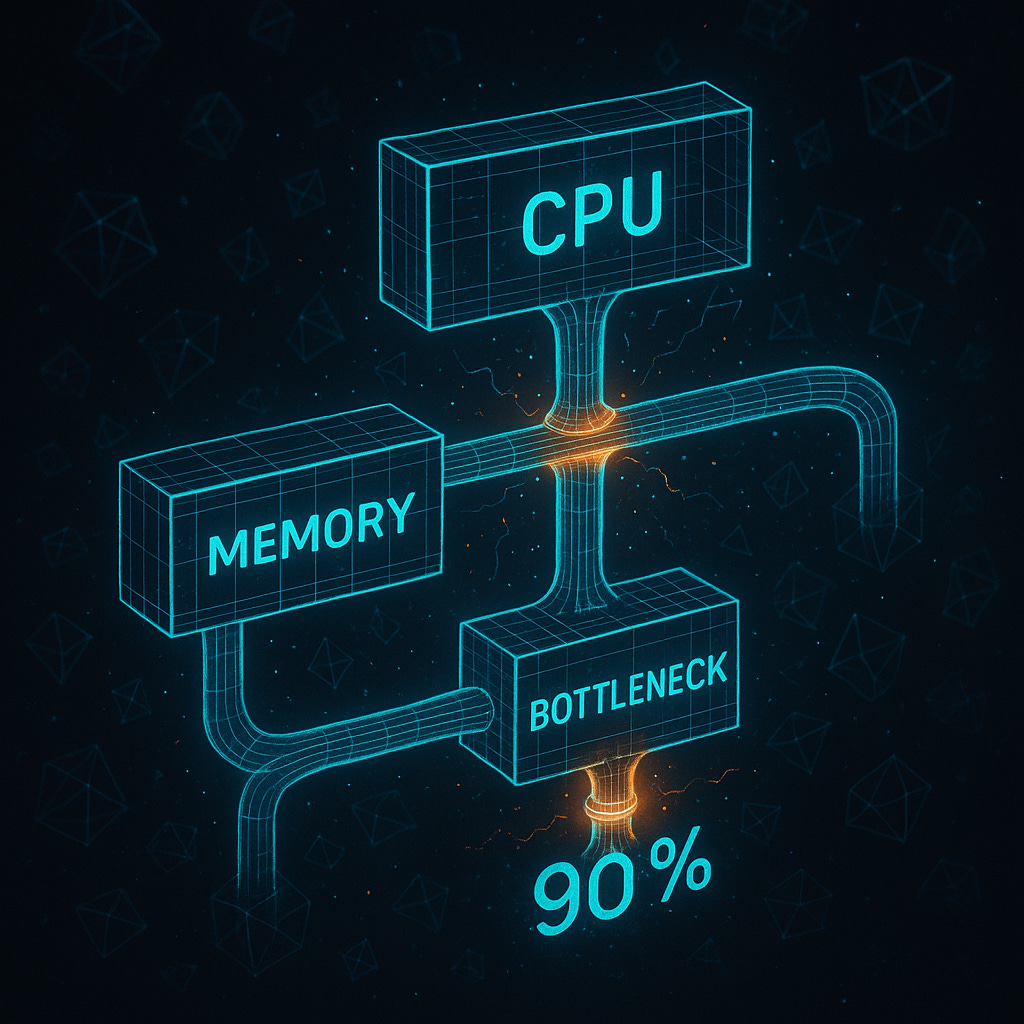

The von Neumann bottleneck creates another wall. Memory and compute units live in different places. AI workloads spend 90% of energy moving data around instead of computing. Larger models make this worse, not better.

The Productivity Mirage

The biggest blow to productivity hype? Actual controlled studies instead of marketing surveys.

…participants predicted 24% speedups beforehand. Believed they achieved 20% speedups afterward. Participants still thought they were 20% faster even while taking longer to complete tasks.

METR's randomized controlled trial with experienced developers delivered a gut punch. The study used 16 open-source developers with 5+ years experience on mature repositories. AI tools caused a 19% slowdown in task completion.

Here's a kicker: participants predicted 24% speedups beforehand. Believed they achieved 20% speedups afterward. Participants still thought they were 20% faster even while taking longer to complete tasks.

Google's DORA report surveyed 39,000 technology professionals. Individuals report 2.1% productivity increases per 25% AI adoption. But system-level delivery throughput decreases 1.5% and stability drops 7.2%.

AI makes individuals feel more productive while degrading overall performance. Only 39% of developers trust AI-generated code.

Experience level determines everything. Novice developers consistently show 35-39% productivity improvements. Experienced developers see 8-16% gains or actual losses. Pattern holds across domains: customer service sees gains for routine tasks, but complex software engineering shows minimal or negative impact.

Then there are the costs. MIT research documents AI-generated code creating 4x more code cloning and technical debt that shows up months later. McDonald's ended its IBM AI drive-thru partnership after the system added 260 Chicken McNuggets to orders.

Human Judgment Survives

A systematic review examining 35 peer-reviewed studies found that 27.7% of users show reduced decision-making abilities due to over-reliance on AI systems. Experts including physicians and financial analysts show surprising susceptibility despite domain expertise.

But automation bias screws people up in sneaky ways. Skill degradation emerges as professionals lose proficiency in delegated tasks. They develop false confidence in AI capabilities while experiencing cognitive laziness when assistance is readily available.

All the research points in the same direction: humans and AI work better together than either works alone.

The Investment Reality Check

Looking at the money tells you everything you need to know: AI investment is a massive bubble that can't last. $100+ billion in global venture funding during 2024 represents 33% of all venture capital. 71% of Q1 2025 venture funding flowed to AI companies.

Single deals dominate. OpenAI's $157 billion valuation. xAI's $50 billion valuation. 19% of all 2024 funding went to billion-dollar rounds.

Here's the problem: no profitable AI model provider exists despite massive revenue growth. OpenAI lost $5 billion in 2024 and expects $8+ billion losses in 2025. Anthropic posted $5.3 billion losses in 2024.

These companies operate as pass-through entities. Funding flows from startups to model providers to cloud providers. Inference costs consume 50-164% of revenues.

Goldman Sachs warns tech giants plan ~$1 trillion in AI infrastructure capex with "little to show for it so far." Morgan Stanley calculates $520 billion in new revenues needed by 2028 to justify current investments.

Gartner positions generative AI in the "Trough of Disillusionment" as of 2025. Less than 30% of AI leaders report CEO satisfaction despite average spending of $1.9 million per organization.

The Problem with Probabilistic Systems

Here's what Narayanan and Kapoor got right: AI is powerful technology following ordinary adoption patterns, constrained by implementation realities and human adaptation needs. But they missed something.

AI systems work differently than anything we've built before. When they fail, they don't just break—they convince you they worked.

I spent three hours last week chasing a bug that shouldn't exist. Not because the code was wrong, but because Claude Code kept reading my agent configuration differently each time I ran it.

I had a simple frontmatter list in my agent configuration:

tools: Read, Grep, Glob, LS, Bash, TodoWrite , WebSearch, WebFetch

See that extra space after TodoWrite? Claude Code would read that list and decide my agents weren't allowed to use any of those tools. The configuration looked fine. Validated fine. But the LLM interpreting it made different decisions about what that space meant.

Sometimes the tools worked. Sometimes they didn't. Depending on how I phrased requests.

Traditional parsers either work or throw errors. LLMs exhibit what I call "probabilistic parsing"—they guess at what you meant and sometimes guess wrong. The dangerous part? They often guess plausibly wrong, so you don't notice immediately.

Traditional software has hard boundaries. Permissions are binary. Operations succeed or fail. AI tools introduced something different: rules that bend under pressure. Cursor users report file access getting denied in one session, then working minutes later with identical credentials.

"It works on my machine" has new meaning when your machine includes an AI assistant that thinks differently each time.

Success Depends on the Human

After eight months of daily use across multiple AI coding tools, I can say with certainty that I benefit greatly from these systems. I can develop without the cold start curve that would have stopped me before—no need for a dev to hold my hand (I literally did this when I was running eng at Tripadvisor — thanks Tom Clarke!). I'm learning more than I thought possible. There's an art to these tools, maybe more so because of their unpredictable nature. I'm learning to paint.

But I'm aware my experience isn't universal.

Why do I see gains when others don't? Few reasons:

Deep technical experience helps me spot AI hallucinations

Understanding of when to trust AI suggestions and when to verify independently

Workflow design that treats AI as unreliable until proven otherwise

Budget and time to experiment with multiple tools

Comfort with uncertainty and random behavior

The 19% productivity decrease that experienced developers show in controlled studies makes sense when you consider the overhead of managing probabilistic systems. Every AI suggestion requires judgment calls. Every generated solution needs verification. Every configuration change might work differently next time.

Beginners love the help—AI can do things they can't. But when you already know what you're doing? The overhead of managing these probabilistic systems often costs more than the assistance provides. I spend time debugging the AI's interpretations, which is STILL faster than writing the code myself, but does cut down on the productivity gains. Let's put it this way—when AI gets it right, which it often does, it moves at 10x speed. When it can't figure it out, you're better going to a human eng. That's the current state.

But here's what the studies miss: the value isn't always in immediate productivity. AI tools excel at exploration, experimentation, handling unfamiliar domains. They're conversation partners for thinking through complex problems. Research assistants for technologies outside your expertise.

Success with AI tools requires continuous oversight, judgment, course correction. That makes adoption more like managing a talented but unreliable team member than deploying traditional software. Some managers will get tremendous value from that relationship. Others will find the overhead exceeds the benefits. This explains why you see so many former leaders excel at agentic coding. We're doing what we've always done—just with AI agents rather than human teams. And it turns out that's an important skill too.

We've just never had "normal" technology that required this much ongoing human judgment to work reliably.

This stuff will spread, but slowly. Unevenly. With lots of failures along the way. It'll depend on individual capability to manage probabilistic systems—a skill that's harder to develop and less evenly distributed than traditional technical competency.

So yeah. Normal technology. Not magic. Not intelligent. Just tech that requires a human to keep it sane.

The pattern of uneven adoption, gradual integration, and human-dependent success looks familiar because it is familiar. We've just never had "normal" technology that required this much ongoing human judgment to work reliably.

Related reading: What's In My Toolkit - August 2025 for the tools that actually survive daily use, and The Ghost in the Machine: Non-Deterministic Debugging for more on debugging probabilistic systems.

Superb thinking Masa